Over the past few months we’ve hit several major milestones in the development of Project North Star. At the same time, hardware hackers have built their own versions of the AR headset, with new prototypes appearing in Tokyo and New York. But the most surprising developments come from North Carolina, where a 19-year-old AR enthusiast […]

// Code

Update (6/8/17): Interaction Engine 1.0 is here! Read more on our release announcement: blog.leapmotion.com/interaction-engine Game physics engines were never designed for human hands. In fact, when you bring your hands into VR, the results can be dramatic. Grabbing an object in your hand or squishing it against the floor, you send it flying as the physics […]

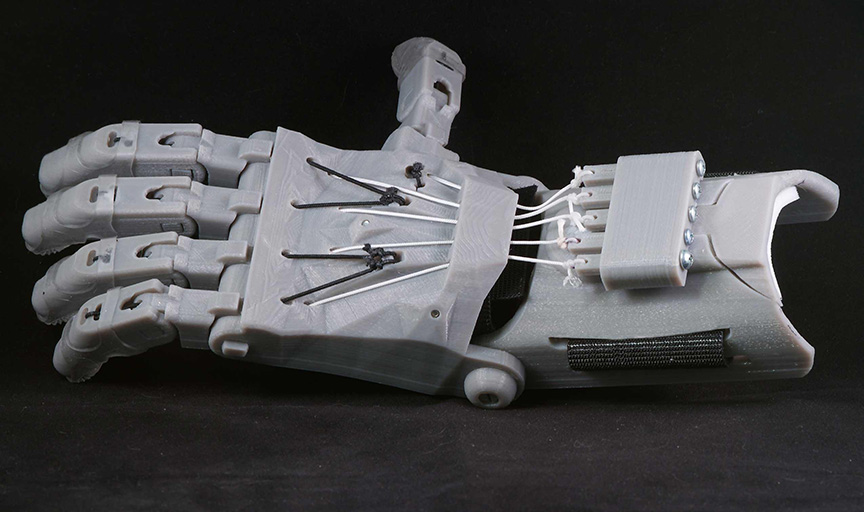

There are no limits to what you can hack together with the Leap Motion Controller – which is why this year’s Leap Motion 3D Jam includes an Open Track for desktop and Internet of Things projects! In this post, hardware hacker Syed Anwaarullah walks through his 3D-printed robotic hand project, which appeared at India’s first-ever Maker Faire. The […]

With the 3D Jam just around the corner, we thought we’d give you a headstart – with a full guide to the very latest resources to bring your to life. In this post, we’ll cover everything you need to know about our integrations and best practices for augmented and virtual reality, desktop, and the Internet of Things. A […]

What makes a collection of pixels into a magic experience? The art of storytelling. At the latest VRLA Summer Expo, creative coder Isaac Cohen (aka Cabbibo) shared his love for the human possibilities of virtual reality, digital experiences, and the power of hugs. Isaac opens the talk by thinking about how we create the representation […]

When the Leap Motion Controller made its rounds at our office a couple of years ago, it’s safe to say we were blown away. For me at least, it was something from the future. I was able to physically interact with my computer, moving an object on the screen with the motion of my hands. And that was amazing.

Fast-forward two years, and we’ve found that PubNub has a place in the Internet of Things… a big place. To put it simply, PubNub streams data bidirectionally to control and monitor connected IoT devices. PubNub is a glue that holds any number of connected devices together – making it easy to rapidly build and scale real-time IoT, mobile, and web apps by providing the data stream infrastructure, connections, and key building blocks that developers need for real-time interactivity.

With that in mind, two of our evangelists had the idea to combine the power of Leap Motion with the brains of a Raspberry Pi to create motion-controlled servos. In a nutshell, the application enables a user to control servos using motions from their hands and fingers. Whatever motion their hand makes, the servo mirrors it. And even cooler, because we used PubNub to connect the Leap Motion to the Raspberry Pi, we can control our servos from anywhere on Earth.

Among developers, interactive designers, and digital artists, Processing is an enormously popular way to build compelling experiences with minimal coding. We’ve seen hundreds of Leap Motion experiments using Processing, from Arduino hacks to outdoor art installations, and the list grows every week.

James Britt, aka Neurogami, is the developer behind the LeapMotionP5 library, which brings together our Java API with the creative power of Processing. He’s just rolled out a major update to the library, including a new boilerplate demo and a demo designed to bridge hand input with musical output. We caught up with James to ask about the library, his latest examples, and how you can get started.

For hardware hackers, boards like Arduino and Raspberry Pi are the essential building blocks that let them mix and mash things together. But while these devices don’t have the processing power to run our core tracking software, there are many ways to bridge hand tracking input on your computer with the Internet of Things.

In this post, we’ll look at a couple of platforms that can get you started right away, along with some other open source examples. This is by no means an exhaustive list – Arduino’s website features hundreds of connective possibilities, from different communication protocols to software integrations. Whether you connect your board directly to your computer, or send signals over wifi, there’s always a way to hack it.