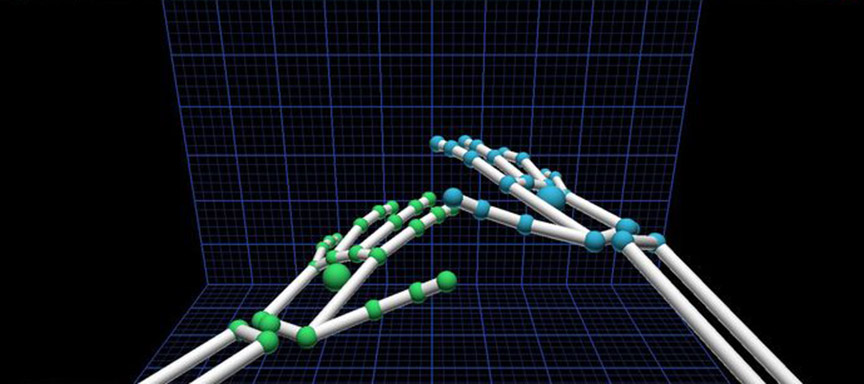

Immersion is everything in a VR experience. Since your hands don’t actually float in space, we created a new Forearm API that tracks your physical arms. This makes it possible to create a more realistic experience with onscreen forearms.

// V2 Tracking

The way we interact with technology is changing, and what we see as resources – wood, water, earth – may one day include digital content. At last week’s API Night at RocketSpace, Leap Motion CTO David Holz discussed our evolution over the past year and what we’re working on. Featured speakers and v2 demos ranged from Unity and creative coding to LeapJS and JavaScript plugins.

In any 3D virtual environment, selecting objects with a mouse becomes difficult if the scene becomes densely populated and structures are occluded. This is a real problem with anatomy models, where there is no true empty space and organs, vessels, and nerves always sit flush with adjacent structures.