Last week, we took an in-depth look at how the Leap Motion Controller works, from sensor data to the application interface. Today, we’re digging into our API to see how developers can access the data and use it in their own applications. We’ll also review some SDK fundamentals and great resources you can use to get started.

Frames

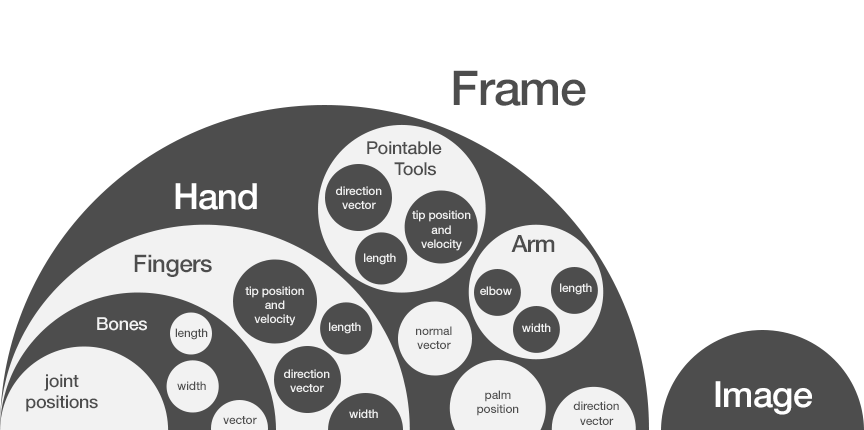

At the most basic level, the Leap Motion API returns the tracking data in the form of frames. Each Frame object contains lists of tracked entities, such as hands, fingers, and tools, as well as objects representing recognized gestures and factors describing the overall motion of hands in the scene. Our software also includes a new Image API that allows you to access the raw sensor data.

Positional tracking

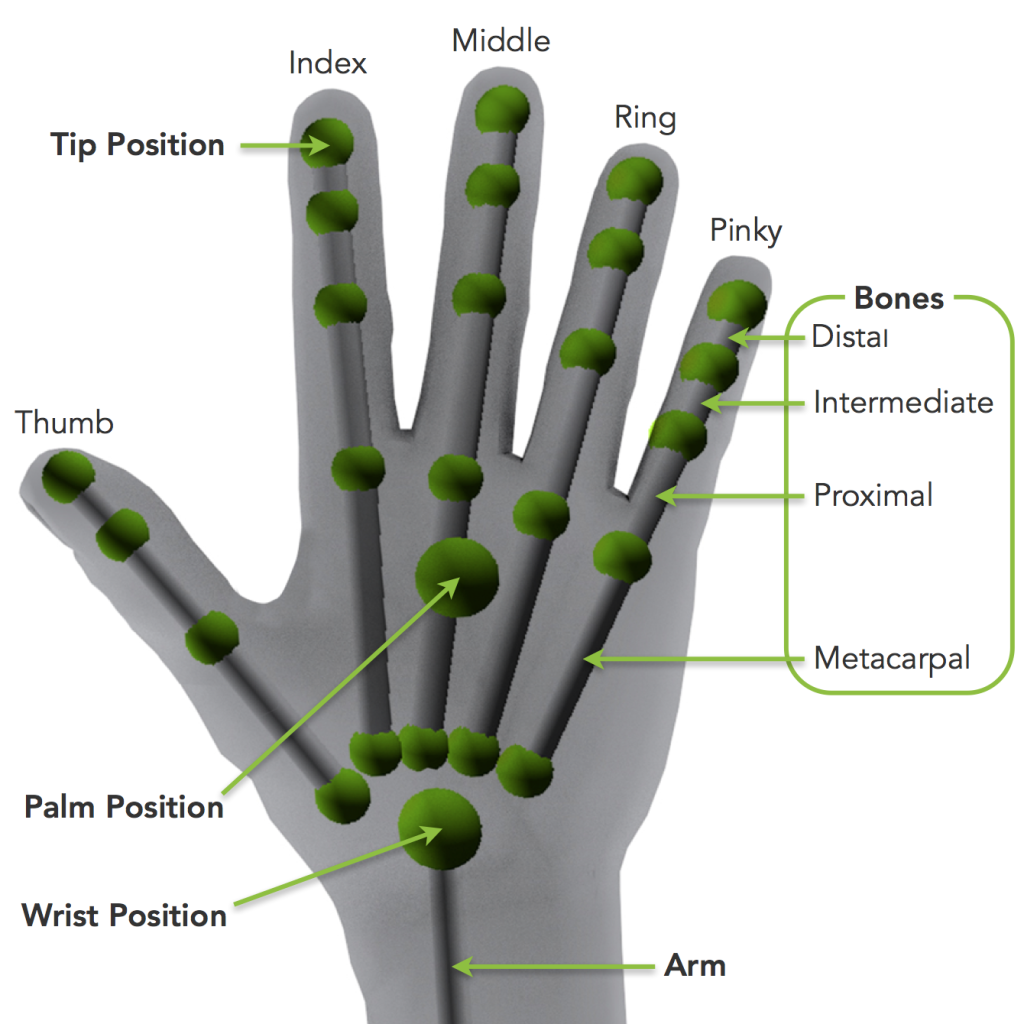

At the top of this post, you can see some of the positional tracking data available through the API, as well as how it’s organized. Every tracked entity in the Leap Motion interaction space falls within a hierarchy that starts with the hand. A Hand object includes:

- palm position and velocity

- direction and normal vectors

- orthonormal basis

- Fingers

- tip position and velocity

- direction vector

- orthonormal basis

- length and width

- Bones

- joint positions

- orthonormal basis

- length and width

- Arm

- wrist and elbow positions

- direction vector

- orthonormal basis

- length and width

- Pointable Tools

- tip position and velocity

- direction vector

- orthonormal basis

- length and width

Motions and gestures

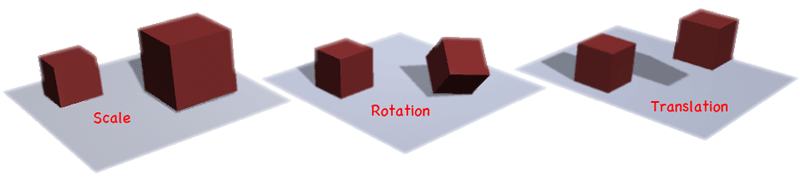

Motions are continuous hand movements – estimates of how the position of tracked objects (hands, fingers, and tools) change over time. These consist of translation, rotation, and scale. Comparing any two frames containing the same hand allows you to compute the change in motion through time. By mapping motions and position data to their applications, developers have controlled flying drones, conducted orchestras, and designed art installations.

Gestures are movement patterns that can be used to trigger certain actions. The Leap Motion API includes swipe, circle, and tap gestures, which are emitted as event objects in frames when recognized. You can use gestures to swipe through recipes or twirl your finger to watch videos.

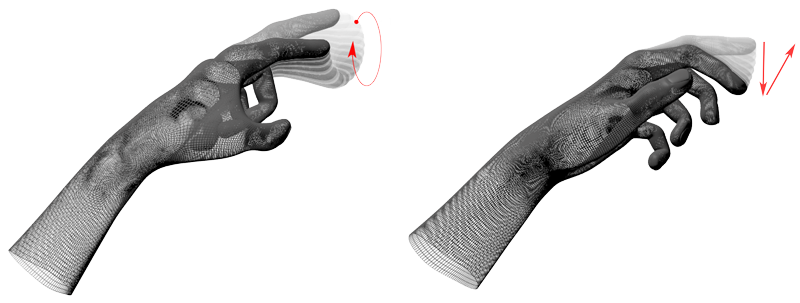

V2 tracking

With V2 tracking, our API is now able to provide a wide range of additional tracking data, including left vs. right hands, tracking confidence values, as well as grab and pinch strength. Finger tracking is now persistent (so that each hand always has five fingers), digit types are identified (thumb, index, middle, ring, and pinky), and individual bones and joints are tracked.

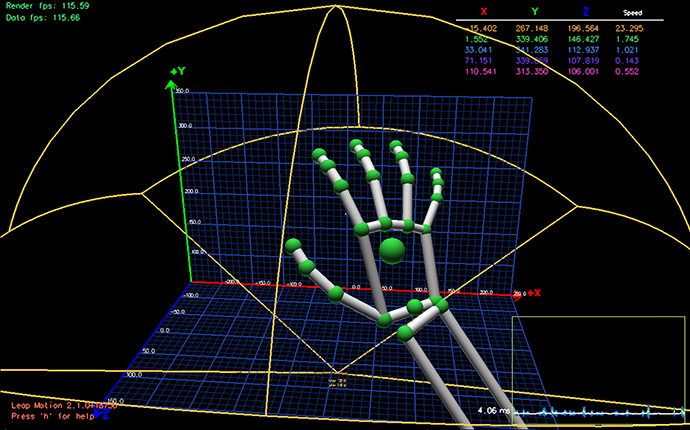

Diagnostic visualizer

Whether you’re testing an app or just want to see the tracking data in action while you wiggle your fingers, the Diagnostic Visualizer is a great resource for any developer. Toggle different settings (press ‘H’ to see all options) to get a feel for the Leap Motion tracking system.

The Leap Motion SDK

Once you download our SDK, you can start building right away with extensive libraries, examples, and documentation. It’s available for Windows, Mac, and Linux in six languages:

Our APIs are also extensible to other languages, such as ActionScript3, Flash/AIR, MatLab, Ruby, and more. For native app development, you’ll find a variety of community-contributed wrappers and libraries on our developer portal, as well as full documentation, tutorials and examples. Be sure to check out the v2 gallery for new examples and interaction experiments.

Unity

Want to integrate Leap Motion with your Unity project? Check out our setup guide for Unity Free or Pro and our v2 Skeletal Assets, featuring:

- quick and easy Leap Motion integration for your existing projects

- an interaction engine that allows you to grab, rotate, and scale objects, as well as pass them from hand to hand

- customizable hand models and a rigged hand

- several demo scenes and interactions

LeapJS

The Leap Motion software also includes support for modern browsers through a WebSocket connection. You can create in-browser Leap Motion experiences in JavaScript and CoffeeScript. LeapJS, our JavaScript client library, is hosted on a dedicated CDN using versioned URLs to make it easier for you to develop web apps and faster for those apps to load.

With LeapJS, you can access a powerful and flexible plugin framework to share common code and reduce boilerplate development. In particular, the rigged hand lets you add an onscreen hand to your web app with just a few lines of code. To get started with LeapJS, check out this beginners guide and try out our web examples.

Development and design tips

When developing for a new user interface like the Leap Motion Controller, user experience design can make or break your project. Here are some key resources to help guide you:

- Introduction to Motion Control

- Designing Intuitive Applications

- Rethinking Menu Design in the Natural Interface Wild West

- Don’t Settle for Air Pokes: Thinking Outside the Mouse

- The Sensor is Always On

- How to Build Your Own Leap Motion Art Installation

From our experience, we’ve found the best development process is rapid and iterative. Start by observing interaction styles in other motion-controlled applications and examples. Try to hone in on what types of interactions are intuitive and easily learnable, and focus on refining these core interactions before adding too much complexity. An effective strategy is to build rapid prototypes (we use Unity and JavaScript with great success), and test with users early and often. Watch and listen to users and carefully observe how their expectations differ from your intended use.

Finally, if you run into any problems, or want to share your project, we hope you’ll join us on the community forums. Happy hacking!