How Hand Tracking Works

Posted; September 3, 2020

From the earliest hardware prototypes to the latest Gemini tracking platform, Ultraleap’s hand tracking hardware and software has come a long way. Here’s a look at how raw sensor data is translated into useful information that developers can use in their applications.

Hand tracking hardware

From a hardware perspective, hand tracking is relatively simple. The heart of a device is two cameras and some infrared LEDs. These track infrared light at a wavelength of 850 nanometers, which is outside the visible light spectrum. The LEDs pulse in sync with the camera framerate, allowing for significantly lower power use and increased intensity.

Ultraleap’s hand tracking modules the Leap Motion Controller and the Stereo IR 170 Camera Module work on this principle, as do Varjo's headsets with embedded Ultraleap hand tracking, and VR/AR headsets built using Qualcomm’s XR2 reference designs.

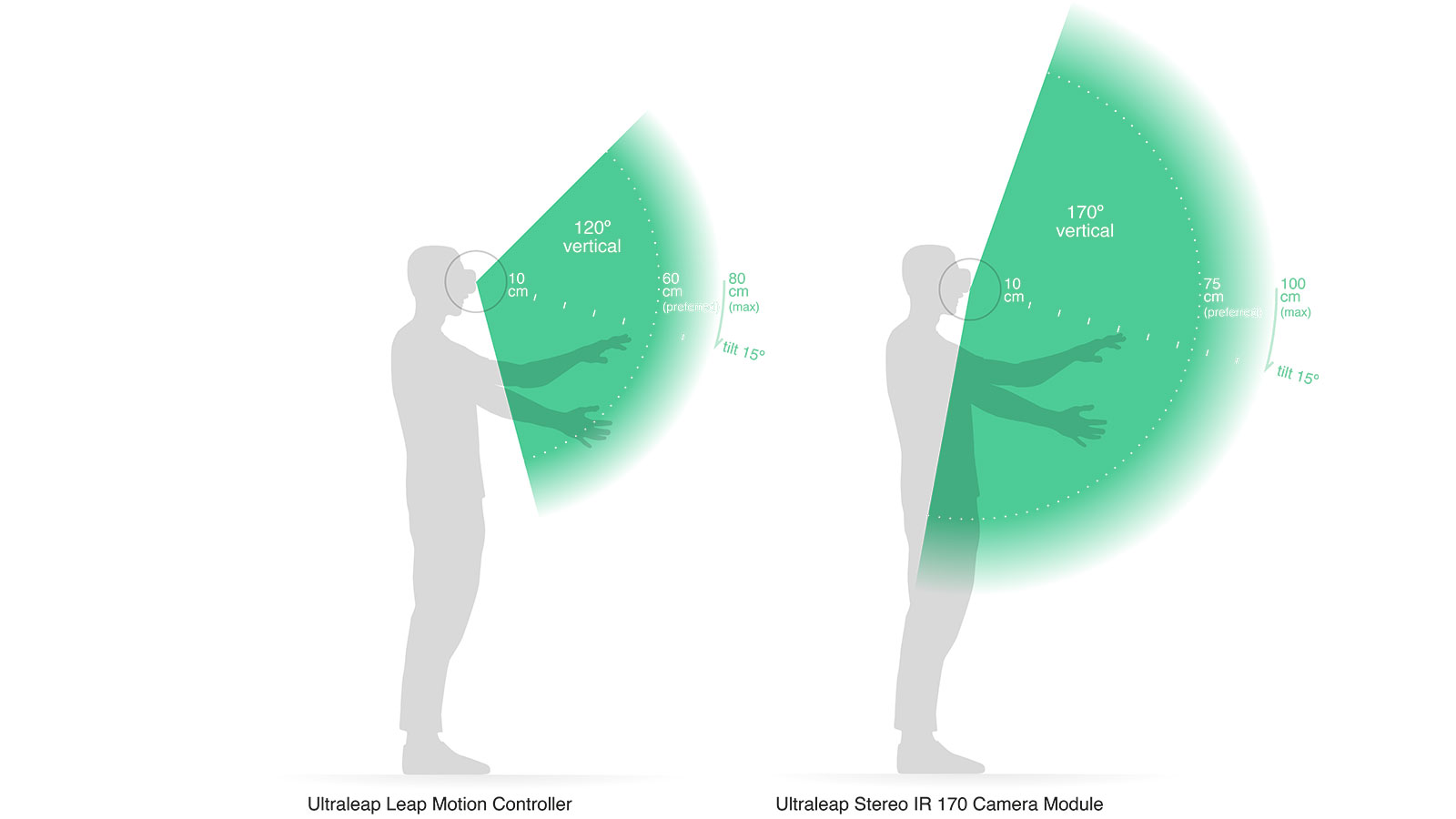

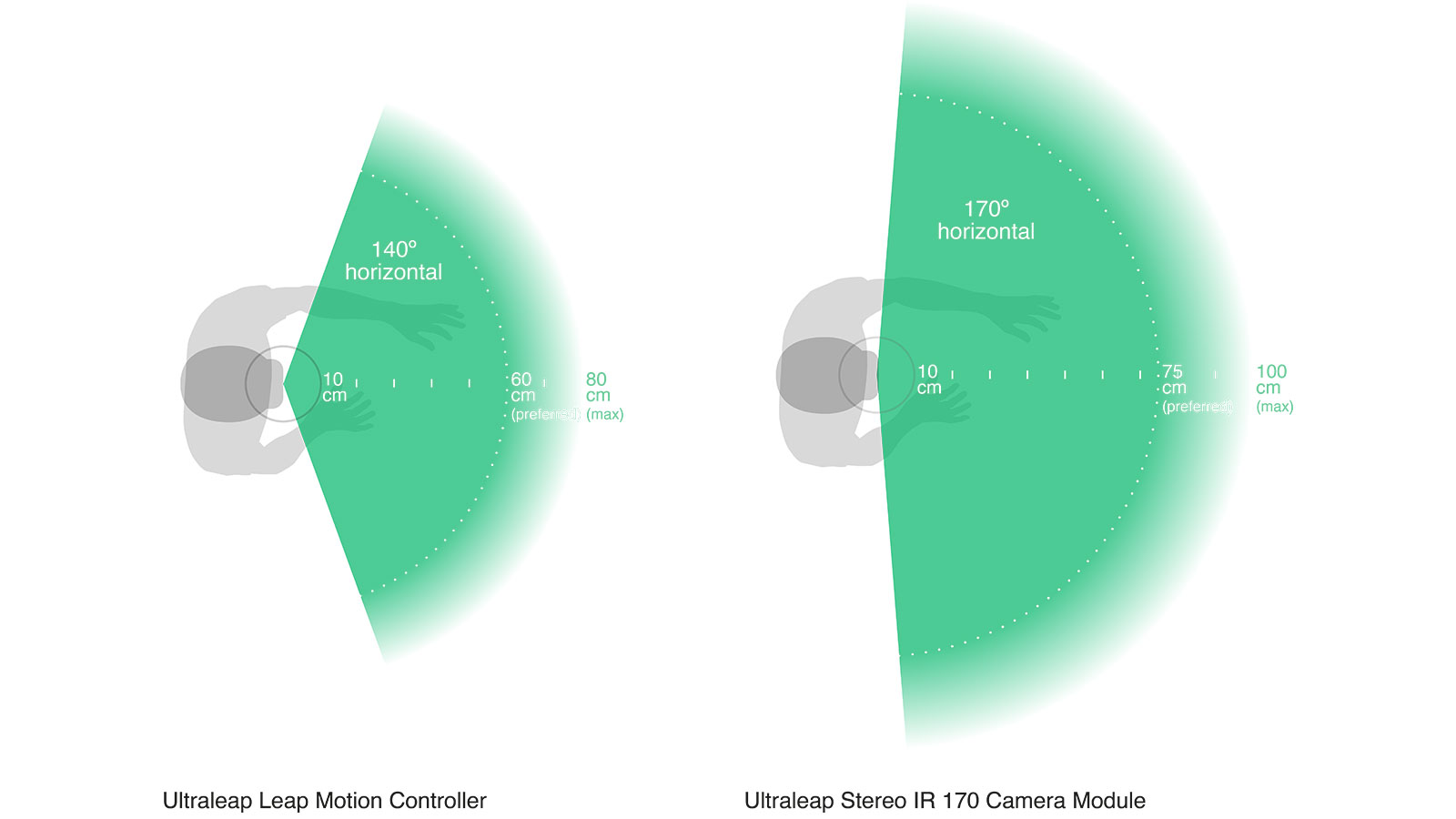

Wide angle lenses are used to create a large interaction zone within which a user’s hands can be detected. The Leap Motion Controller has an interaction zone that extends from 10cm to 60cm or more, extending from the device in a 140x120° typical field of view. The Stereo IR 170 Camera Module has an even larger interaction zone, extending from 10cm to 75cm or more, with a 170x170° typical field of view (160x160° minimum).

It takes the form of an inverted pyramid for the Leap Motion Controller, and an inverted cone-like shape for the Stereo IR 170. This is created by the intersection of the binocular cameras’ fields of view.

The range is limited by LED light propagation through space, since it becomes much harder to infer your hand’s position in 3D beyond a certain distance. LED light intensity is ultimately limited by the maximum current that can be drawn over the USB connection.

At this point, the hand tracking device’s USB controller reads the sensor data into its own local memory and performs any necessary resolution adjustments. This data is then streamed via USB to Ultraleap’s hand tracking software.

The data takes the form of a grayscale stereo image of the near-infrared light spectrum, separated into the left and right cameras. Typically, the only objects you’ll see are those directly illuminated by the device’s LEDs. However, incandescent light bulbs, halogens, and daylight will also light up the scene in infrared. You might also notice that certain things, like cotton shirts, can appear white even though they are dark in the visible spectrum.

Hand tracking software

Once the image data is streamed to your computer, it’s time for some heavy mathematical lifting. Despite popular misconceptions, our hand tracking platform doesn’t generate a depth map – instead it applies advanced algorithms to the raw sensor data.

The Leap Motion Service is the software on your computer that processes the images. After compensating for background objects (such as heads) and ambient environmental lighting, the images are analyzed to reconstruct a 3D representation of what the device “sees”.

Next, the tracking layer matches the data to extract tracking information such as fingers. Our hand tracking algorithms interpret the 3D data and infer the positions of occluded objects. Filtering techniques are applied to ensure smooth temporal coherence of the data.

The hand tracking algorithms in Gemini (the fifth generation of our core software) are more robust than ever before. One key benefit is improved performance for two-handed interactions.

The Leap Motion Service then feeds the results – expressed as a series of frames, or snapshots, containing all of the tracking data – into a transport protocol.

Through this protocol, the service communicates with the Leap Motion Control Panel, as well as native and web client libraries, through a TCP or WebSocket connection. The client library organizes the data into an object-oriented API structure, manages frame history, and provides helper functions and classes. From there, the application logic ties into the Leap Motion input, allowing a motion-controlled interactive experience in spatial computing, self-serve kiosks, digital out-of-home advertising, automotive, and many more .