Imagine being able to reach out and tweak virtual strings with your hands to create massive waves of light and sound. Last year, my colleague Alejandro Franco and I brought that idea into reality at Mexico City’s Digital Cultural Center with Resortes – an interactive installation manipulated in real-time through the hand gestures of participants.

In this installation, Newton’s laws of motion, which we find in our day-to-day lives, are simulated to recreate various elastic strings from a set of particles or nodes. The strings act independently from each other, and are triggered either by the hand gestures of each participant or by real-time audio analysis.

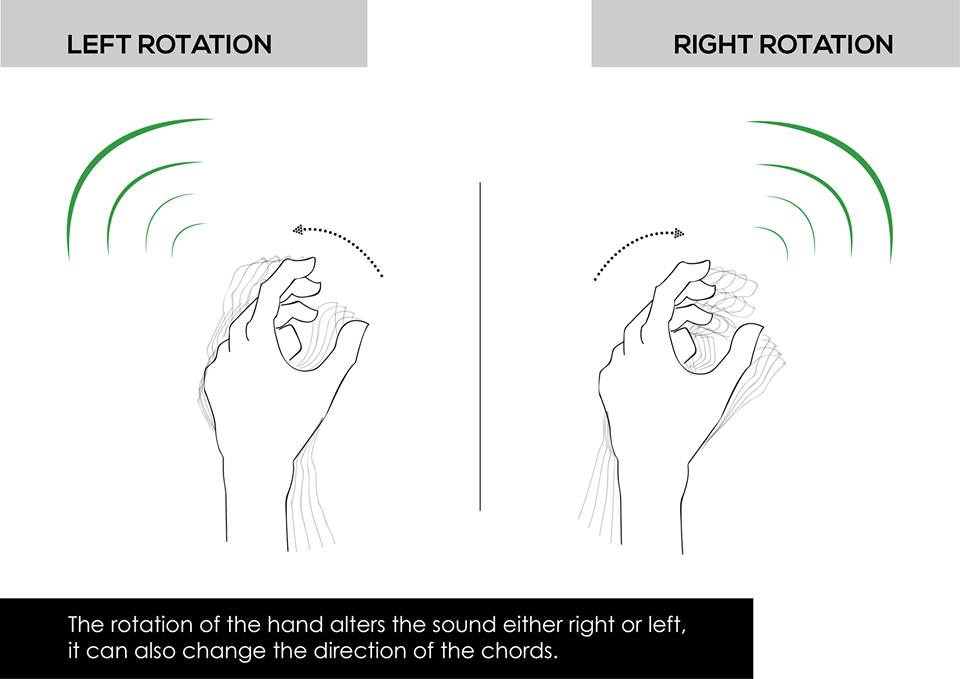

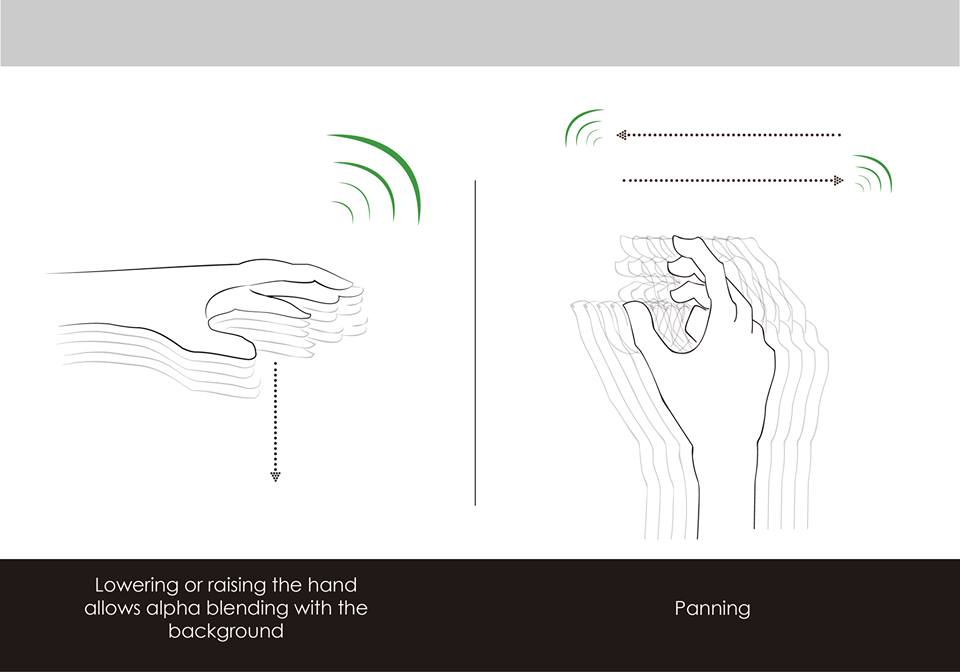

To make Resortes interactive, we placed two Leap Motion Controllers at either side of the projection. Each sensor had a different effect on the musical composition. At the same time, the viewer’s hand movements can alter the physics of the chords – analogous to manipulating elastic strings with your bare hands. When the two Leap Motion Controllers work simultaneously, the visuals and sounds generated from each sensor are combined into a single composition.

The piece is divided into two stages: the interactive and the generative. If the Leap Motion Controller can’t detect any gestures, then there is no activation of the visuals or sound – only silence. However, when either one of the devices detects a gesture, a sound explosion is triggered, and the installation springs to life.

During the interactive stage, visitors can manipulate the audio synths and the physics of the responsive visuals. When the user’s hand disappears, another sound explosion takes place, and the generative stage begins. This involves an audio-visual experience that can last between 6 and 8 minutes, depending on various feedback values from the interactive process. Here’s an example of the generative stage in action:

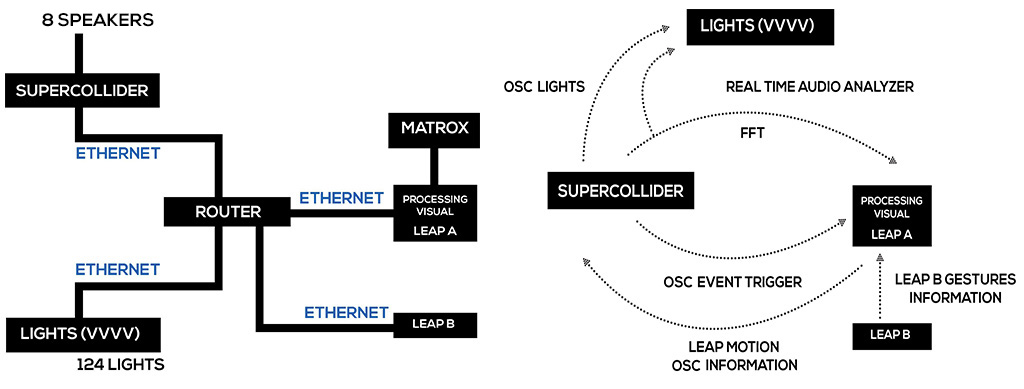

To create Resortes, we set up a local network where four computers were connected simultaneously. There was a constant flow of information between the various applications. To create the visuals, we used Processing 2.0 with OpenGL, GLSL, and libraries like oscP5 and the Leap Motion library for Processing.

The audio was made using SuperCollider and an open-source library called MIDetectorOSC, which sends musical information to other applications via OSC messages. This is very useful to allow perfect real-time synchronization between audio and video. Finally, we used VVVV to control the lights, and two computers were used to activate both Leap Motion Controllers and share information. Here’s a breakdown of the setup:

With Resortes, we wanted the audience to feel that they could control a string with their bare hands and manipulate the physics of the environment. With the Leap Motion Controller, we were able to create a different form of expression that wouldn’t have been otherwise possible. What do you think about the artistic possibilities of a touchless interface? Post your thoughts in the comments section below.

Visuals and Interaction: Thomas Sanchez Lengeling

Audio: Alejandro Franco Briones

Lights: Salvador Chávez

Camera: Edgardo Dander

Illustration: Ana Karen G Barajas

Production: COCOLAB

Held at Mexican Mutek Festival 2013

An earlier version of this post appeared at codigogenerativo.com.