One of the core design philosophies at Leap Motion is that the most intuitive and natural interactions are direct and physical. Manipulating objects with our bare hands lets us leverage a lifetime of physical experience, minimizing the learning curve for users. But there are times when virtual objects will be farther away than arm’s reach, beyond the user’s range of direct manipulation. We can force users to walk over to access those objects – or we could give them superpowers!

For our latest interaction design sprint, we prototyped three ways of summoning distant objects to bring them within arm’s reach. The first is a simple animated summoning technique, well-suited to interacting with single objects. The second gives you telekinetic powers, while the third virtually augments your body’s capabilities.

Experiment #1: Animated Summoning

Our first experiment looked at creating an efficient way to select a single static distant object, then summon it directly into your hand. After inspecting or interacting with it, you could dismiss it, sending it back to its original position. The use case here would be something like selecting and summoning an object from a shelf then having it return automatically – useful for gaming, data visualization, and educational sims.

This approach involved four distinct stages of interaction: selection, summoning, holding/interacting, and returning.

1. Selection

One of the pitfalls that many VR developers fall into is thinking of our hands as analogous to controllers, and designing interactions that way. Selecting an object at a distance is a pointing task and well-suited to raycasting. However, holding your finger or even your whole hand steady in midair to point accurately is quite difficult, especially if you need to introduce a trigger action.

Rather than simply casting a ray directly from a transform on your hand, we used the head/HMD position as a reference transform, added an offset to approximate a shoulder position, and then projected a ray from the shoulder through the palm position and out toward a target. (Veteran developers will recognize this as the experimental approach first tried with the UI Input Module.)

This allowed for a much more stable projective raycast, which we visualized by using a line renderer and a highlight circle which appears around an object when its collider is hit by the raycast.

In addition to the stabilization by projective raycasting, we added larger proxy colliders to the distant objects. This means we have larger targets that are easier to hit. We also added some logic to the larger proxy colliders so that if the targeting raycast hits a distant object’s proxy collider, we bend the line renderer to end at that object’s center point. The result is a kind of snapping of the line renderer between zones around each target object, which again makes them much easier to select accurately.

After deciding how selection would work, we needed to decide when this ability would activate – since once the object was brought within reach, you would want to switch out of ‘selection mode’ and go back to regular direct manipulation.

Since shooting a ray out of your hand to target something out of reach is quite an abstract interaction we thought about related physical metaphors or biases that could anchor this gesture. When a child wants something out of their immediate vicinity, their natural instinct is to reach out for it, extending their open hands with outstretched fingers.

We decided to use this action as a basis for activating the selection mode. When your hand is outstretched beyond a certain distance from your head, and your fingers are extended, we begin raycasting for potential selection targets.

To complete the selection interaction, we needed a confirmation action – something to mark that the hovered object is the one we want to select. Continuing with the concept of a child reaching for something beyond their grasp, curling your fingers into a grab pose while hovering an object will select it. As your fingers curl, the hovered object and the highlight circle around it scale down slightly, mimicking a squeeze. When you’ve fully curled your fingers the object pops back to its original scale and the highlight circle changes color to signal a confirmed selection.

2. Summoning

Now that we’ve selected our distant object, we want to summon it into direct manipulation range. Again we thought about existing gestures used in the real world. A common everyday action to signal that we want to bring something closer begins with a flat palm facing upwards followed by curling the fingers quickly.

At the end of the selection action, we have our arm extended, palm facing away toward the distant object, with our fingers curled into a grasp pose. So we defined our heuristics for the summon action as first checking that the palm is (within a range) facing upward. Once that’s happened, we check the curl of the fingers, using how far they’re curled to drive the animation of the object along a path toward the hand. When your fingers are fully curled the object will have animated all the way into your hand and becomes grasped.

During the testing phase we found that after selecting an object – with arm extended, palm facing toward the distant object, and fingers curled into a grasp pose – many users simply flicked their wrists and turned their closed hand towards themselves, as if yanking the object towards themselves. Given our heuristics for summoning (palm facing up, then degree of finger curl driving animation), this action actually summoned the object all the way into the user’s hand immediately.

This single motion action to select and summon was more efficient than two discrete motions, though they offered more control. Since our heuristics were flexible enough to allow both, approaches we left them unchanged and allowed users to choose how they wanted to interact.

3. Holding and Interacting

Once the object arrives in your hand, all of the extra summoning specific logic deactivates. Now you have a regular InteractionObject! It can be passed from hand to hand, placed in the world, and interacted with (if it has UI components). As long as the object remains within arm’s reach of the user, it’s not selectable for summoning.

4. Returning

You’re done with this thing – now what? If you grab the object and hold it out at arm’s length (beyond a set radius from your head position) a line renderer appears showing the path the object will take to return to its start position. If you release the object while this path is visible, the object automatically animates back to its anchor position.

Overall, this execution felt accurate and low effort. It easily enables the simplest version of summoning – selecting, summoning and returning a single static object from an anchor position. However, it doesn’t feel very physical, relying heavily on gestures and with objects animating along predetermined paths between two defined positions.

For this reason it might be best used for summoning non-physical objects like UI, or in an application where the user is seated with limited physical mobility where accurate point-to-point summoning would be preferred.

Experiment #2: Telekinetic Powers

While the first experiment handled summoning and dismissing one static object along a predetermined path, we also wanted to explore summoning dynamic physics-enabled objects. What if we could launch the object towards the user, having it land either in their hand or simply within their direct manipulation range? This execution drew inspiration from Force pulling, Magneto yanking guns out of his enemies’ hands, wizards disarming each other, and many others.

In this experiment, the summonable objects were physics-enabled. This means that instead of sitting up at eye level, like on a shelf, they were most likely resting on the ground. To make selecting them a more low-effort task, we decided to change the selection mode hand pose from overhanded, open palm-facing-the-target pose to a more relaxed open palm-facing-up with fingers pointed toward the target.

To allow for a quicker, more dynamic summoning, we decided to condense hovering and selecting into one action. Keeping the same underlying raycast selection method, we simply removed the need to make a selection gesture. Keeping the same finger-curling summon gesture meant you could quickly select and summon an object by pointing toward it with an open, upward-facing palm, then curling your fingers.

Originally, we used your hand as the target for the ballistics calculation that launched a summoned object toward you. This felt interesting, but having the object always land perfectly on your hand felt less physics-based and more like the animated summon. To counter this, we changed the target from your hand to an offset in front of you – plus a slight random torque to the object to simulate an explosive launch. Adding a small shockwave and a point light at the launch point, as well as having each object’s current speed drive its emission, completed the explosive effect.

Since the interaction had been condensed so much, it was possible to summon one object after another in quick succession before the first had even landed!

Once you got the hang of it you could even summon objects again, in mid-air, while they were already flying toward you.

This experiment was successful in feeling far more dynamic and physics-based than the animated summon. Condensing the stages of interaction made it feel more casual, and the added variation provided by enabling physics made it more playful and fun. While one byproduct of this variation was that objects would occasionally land and still be out of reach, simply summoning it again would bring it close enough. While we were still using a gesture to summon, this method felt much more physically based than the previous one.

Experiment #3: Extendo Hands!

For our third and final experiment, we wondered if we could flip the problem. Rather than simply gesturing to command a distant object to come within reach, we could instead give ourselves longer arms and grab the faraway object with our fingers.

The idea was to project the underlying InteractionHands out to the distant object to allow an Interaction Engine grab. Then once the object was held the user, would be in full control of it, able to pull it back to within their normal reach or even relocate and release it.

The idea was to project the underlying InteractionHands out to the distant object to allow an Interaction Engine grab. Then once the object was held the user, would be in full control of it, able to pull it back to within their normal reach or even relocate and release it.

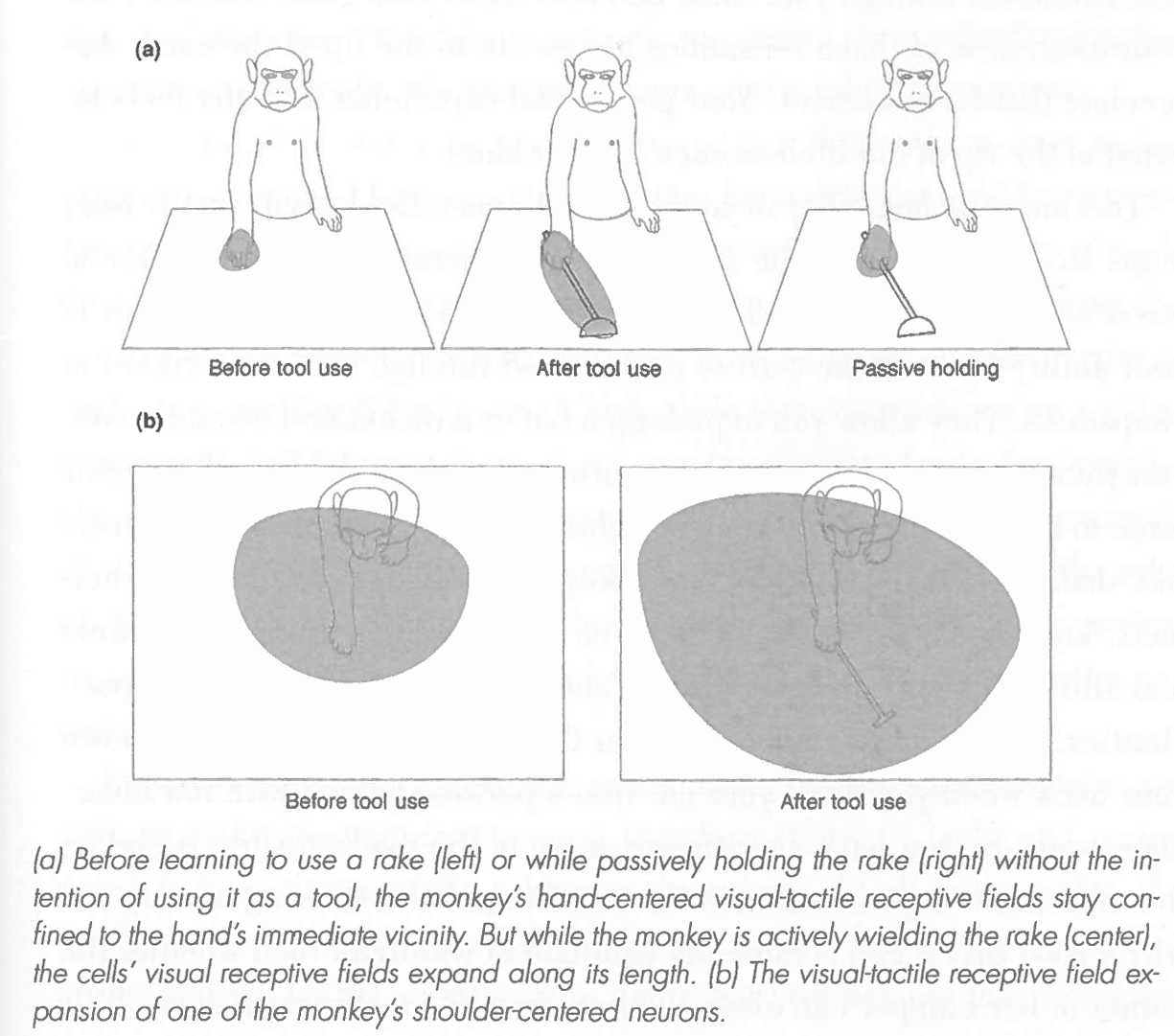

This approach touches on some interesting neuroscience concepts, such as body schema and peripersonal space. Our brains are constantly using incoming sensory to model both the position of our bodies and their various parts in space, as well as the empty space around us in which we can take immediate action. When we use tools, our body schema expands to encompass the tool and our peripersonal space grows to match our reach when using the tool. When we use a rake or drive a car, those tools literally become part of our body, as far as our brain is concerned.

Diagram from The Body Has a Mind of Its Own by Sandra Blakeslee and Matthew Blakeslee.

Our body schema is a highly adaptable mental model evolved to adopt physical tools. It seems likely that extending our body schema through virtual means would feel almost as natural.

For this experiment, our approach centered around the idea of remapping the space we could physically reach onto a scaled-up projective space, effectively lengthening our arms out to a distant object. Again, the overall set of interactions could be described in stages: selecting/hovering, grabbing, and holding.

1. Selecting/Hovering

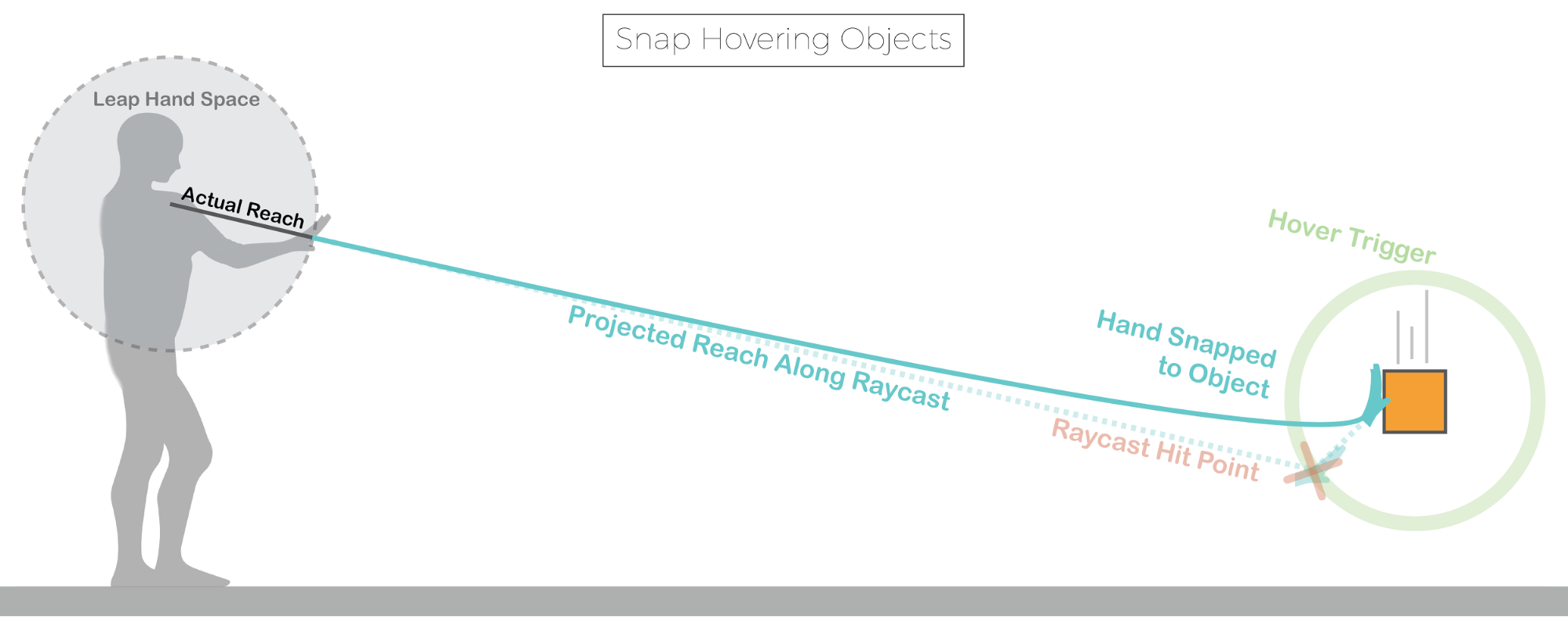

To select an object, we were able to reuse much of the logic from the previous executions, raycasting through the shoulder and palm to hit a larger proxy collider around distant objects. Once the raycast hit an object’s proxy collider, we projected a new blue graphic hand out to the object’s location as well as the underlying InteractionHand, which contains the physics colliders that do the actual grabbing. We used similar snapping logic from previous executions so that when an object was hovered, the blue projected hand snapped to a position slightly in front of the object, ready to grab it.

Raycasting against a distant object’s proxy collider, sending a projected hand out and snapping it to a position directly in front of the object ready to grab it.

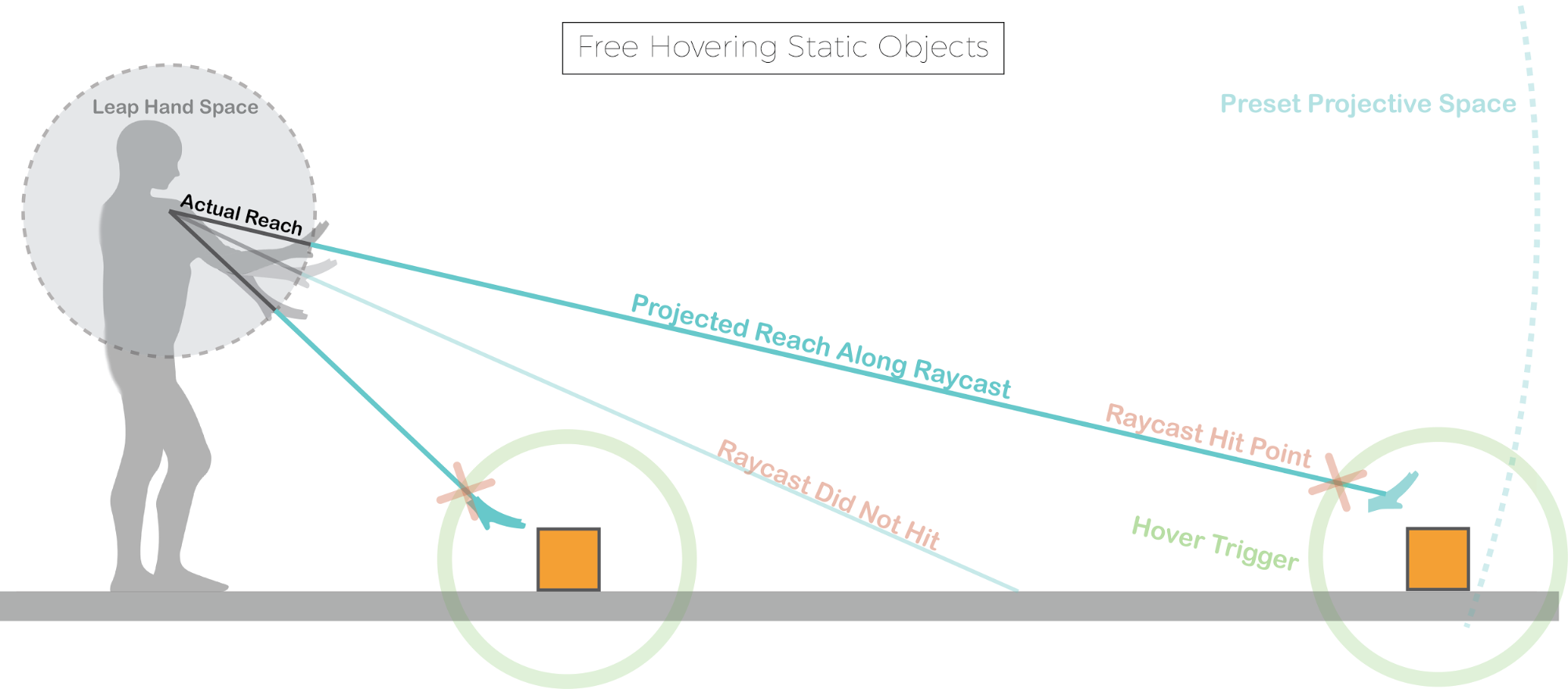

As an alternative, we looked at an approach without snapping where we would hover over a distant object and project out a green hand, but let the user retain full control of their hand. The idea was to allow the full freedom of Interaction Engine manipulation, including soft contact, for distant objects.

To do this we remapped the reachable range of your hands onto a preset projective space that was large enough to encompass the farthest object. Then whenever your raycast hit an object’s proxy collider, we would simply send out the projected hand to its corresponding position within the projective space, letting it move freely so long as your raycast was still hitting the proxy collider. This created a small bubble around each object where free hovering with a projected hand was possible.

Free hovering distant objects to allow soft contact for distant objects

This action felt interesting but ended up being quite difficult to control. Regular soft contact interaction with virtual objects takes a bit of getting used to – since from the perspective of a game engine, your hands are immovable objects with unstoppable force. This effect is multiplied when operating in a projectively scaled-up space. A small movement of your real hand is magnified by how far away your projective hand is. Often, when trying to roll our hand over a distant object, we instead ended up forcefully slapping them away. After some tests we removed free hover and stuck with snap hovering.

2. Grabbing

Grabbing in this execution was as simple as grabbing any other virtual object using the Interaction Engine. Since we’d snapped the blue projected hand to a convenient transform directly in front of the distant object, all we had to do was grab it. Once the object was held we moved into the projective space held behaviour that was the real core of this execution.

3. Holding

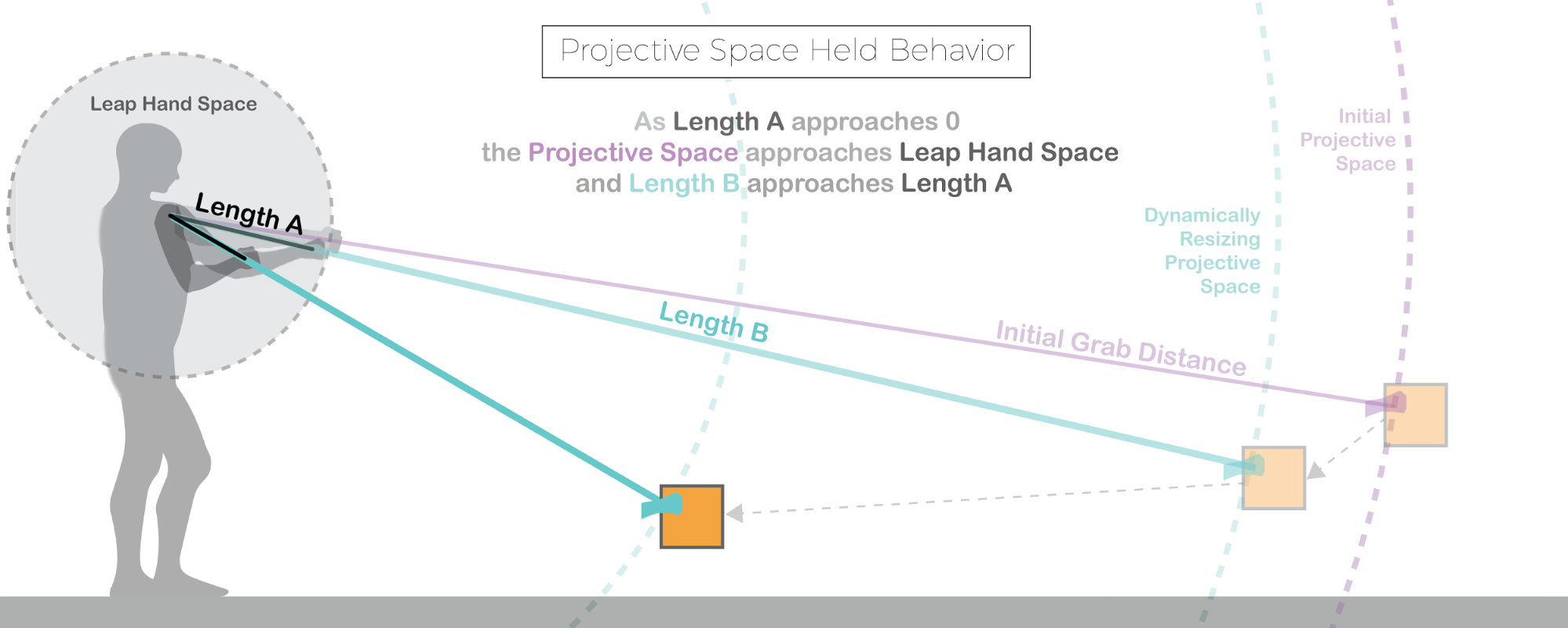

At the moment you grab a distant object, the distance to that object is used to create a projective space, onto which your actual reach is remapped. This projective space means that moving your hand in an arc will move the projected hand along the same arc within the projected space.

However, as you bring your hand toward yourself the projective space dynamically resizes. As the distance between your actual hand and a reference shoulder position approaches zero, the projective space approaches your actual reach space.

Then, once your hand is almost as far back as you can bring it and the projective space is almost equal to your actual reach space, your projected hand fuses into your actual hand and you are left holding the object directly.

The logic behind this dynamically resizing projective space held behaviour sounds quite complicated, but in practice feels like a very natural extension of your hands.

After a bit of practice these extendo hands begin to feel almost like your regular hands just with a longer reach. They make distant throwing, catching, and relocation possible and fun!

Extendo arms feel quite magical. After a few minutes adjusting to the activation heuristics, you could really feel your sense of peripersonal space expand to encompass the whole environment. Objects previously out of reach were now merely delayed by the extra second it took for your projected hand to travel over to them. Moreso than the other two executions this one felt like a true virtual augmentation of your body’s capabilities. We look forward to seeing more work in this area as our body schemas are stretched to their limits and infused with superpowers.

Photo credits: Leap Motion, picturesbymom.com. An abridged version of this post was originally published on RoadtoVR. A Chinese version is also available.