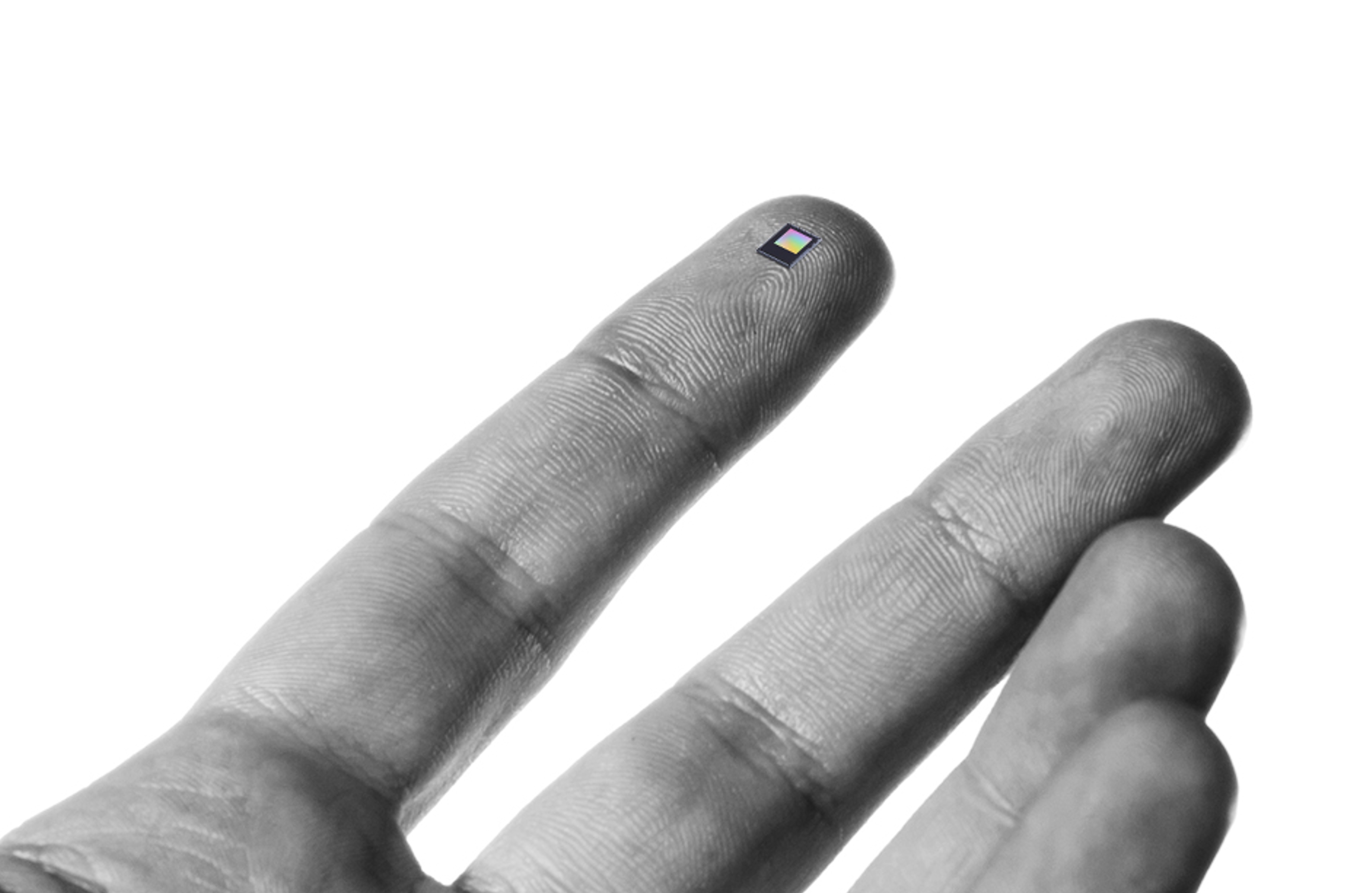

Say hello to the second generation of our iconic hand tracking camera

We remove boundaries between physical and digital worlds - for anyone, anywhere.

Say goodbye to hardware… Say hello to human technology.

Our hand tracking and haptics are powering the next wave of human potential. No controllers. No wearables. No touchscreens. Just natural interaction.

Say goodbye to hardware… Say hello to human technology.

Our hand tracking and haptics are powering the next wave of human potential. No controllers. No wearables. No touchscreens. Just natural interaction.

WORLD'S BEST HAND TRACKING

Leap Motion Controller 2

Say hello to the second generation of Ultraleap's iconic hand tracking camera and the future of natural interaction. Say goodbye to clunky controllers. Secure your Leap Motion Controller 2 today.

Touchless interaction

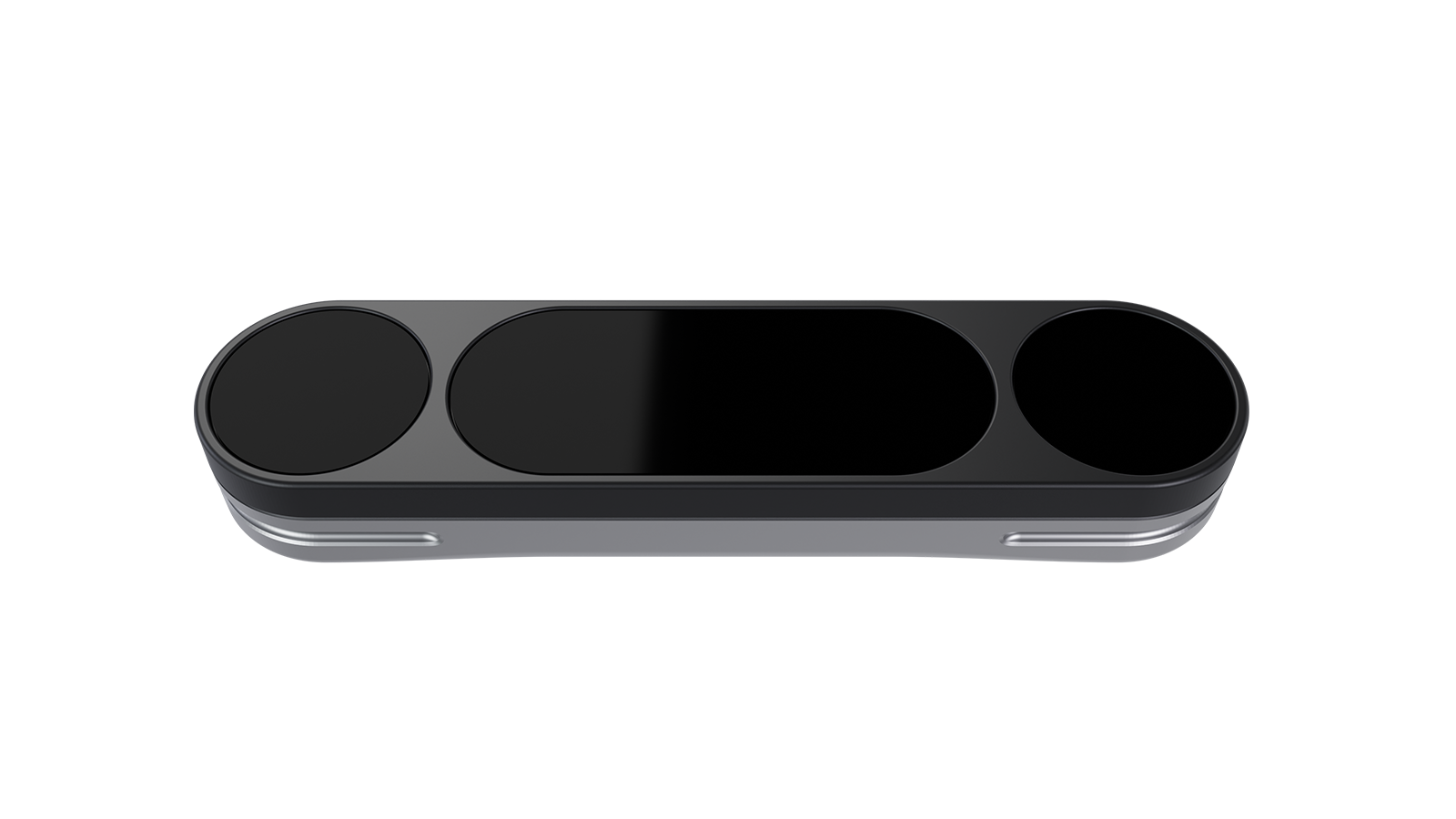

Ultraleap 3Di

The Ultraleap 3Di is a ruggedized hand tracking camera designed for connection to public interactive screens. DOOH, retail, museums, theme parks, and more.

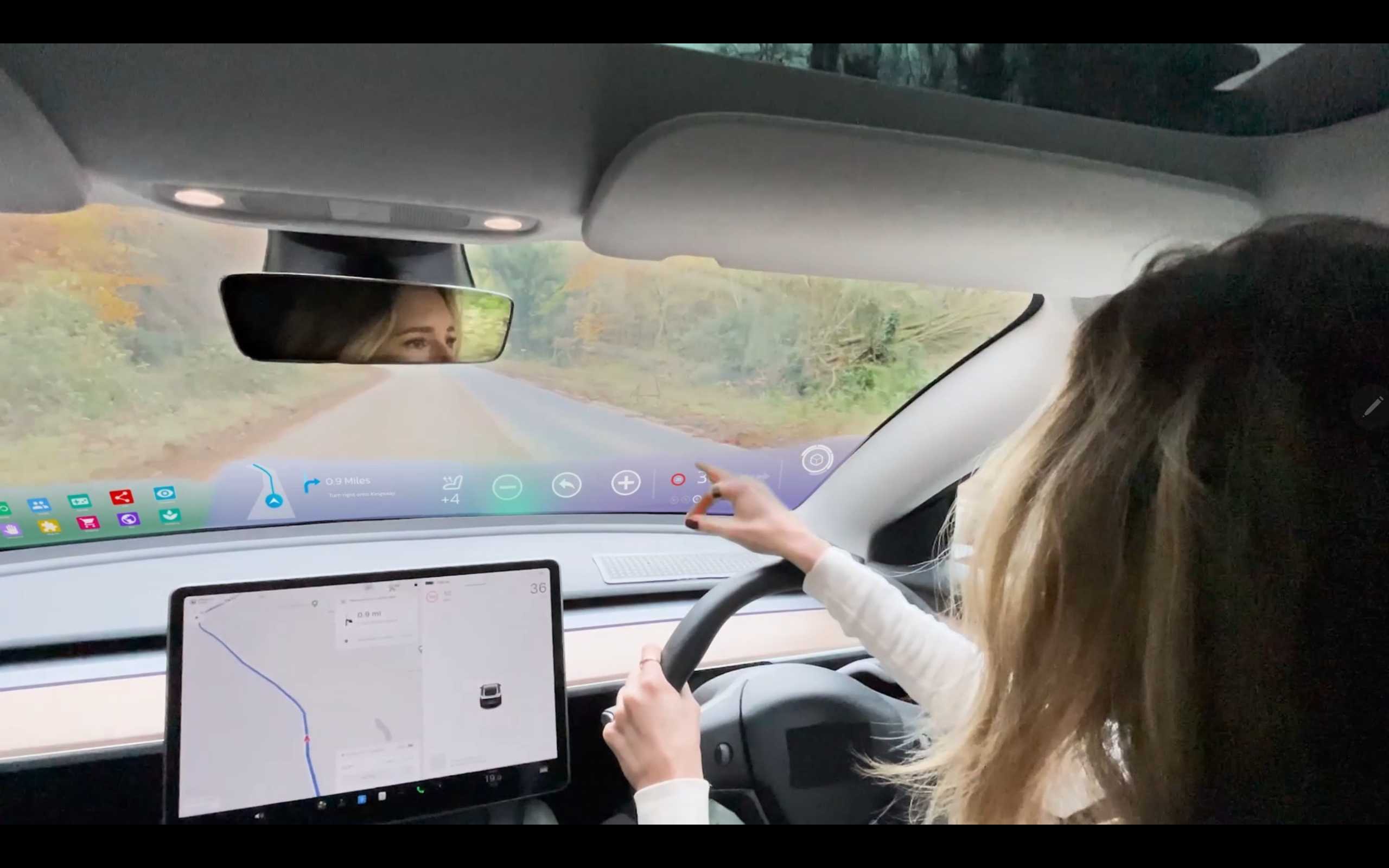

XR HARDWARE, SOFTWARE & DEVELOPER TOOLING

Reach beyond controllers

To fully realize its potential, XR needs to remove the last barrier to entry: the user interface. Our advanced hand tracking solutions open up a bigger, more diverse community of users.

Making headlines.

See all our latest news, stories, and case studies.

TOUCHLESS INTERACTIVE KIOSKS

TouchFree: From easy retrofits to new touchless interfaces

Turn any existing touchscreen touchless with our TouchFree Application. Or, use TouchFree Tooling to integrate gesture control into applications as a native feature.

Be the first to know

Get the latest curated news on haptics, tracking, and next-generation interfaces – straight to your inbox.

By signing up, you are agreeing to our privacy policy.