Grasp the true potential of XR

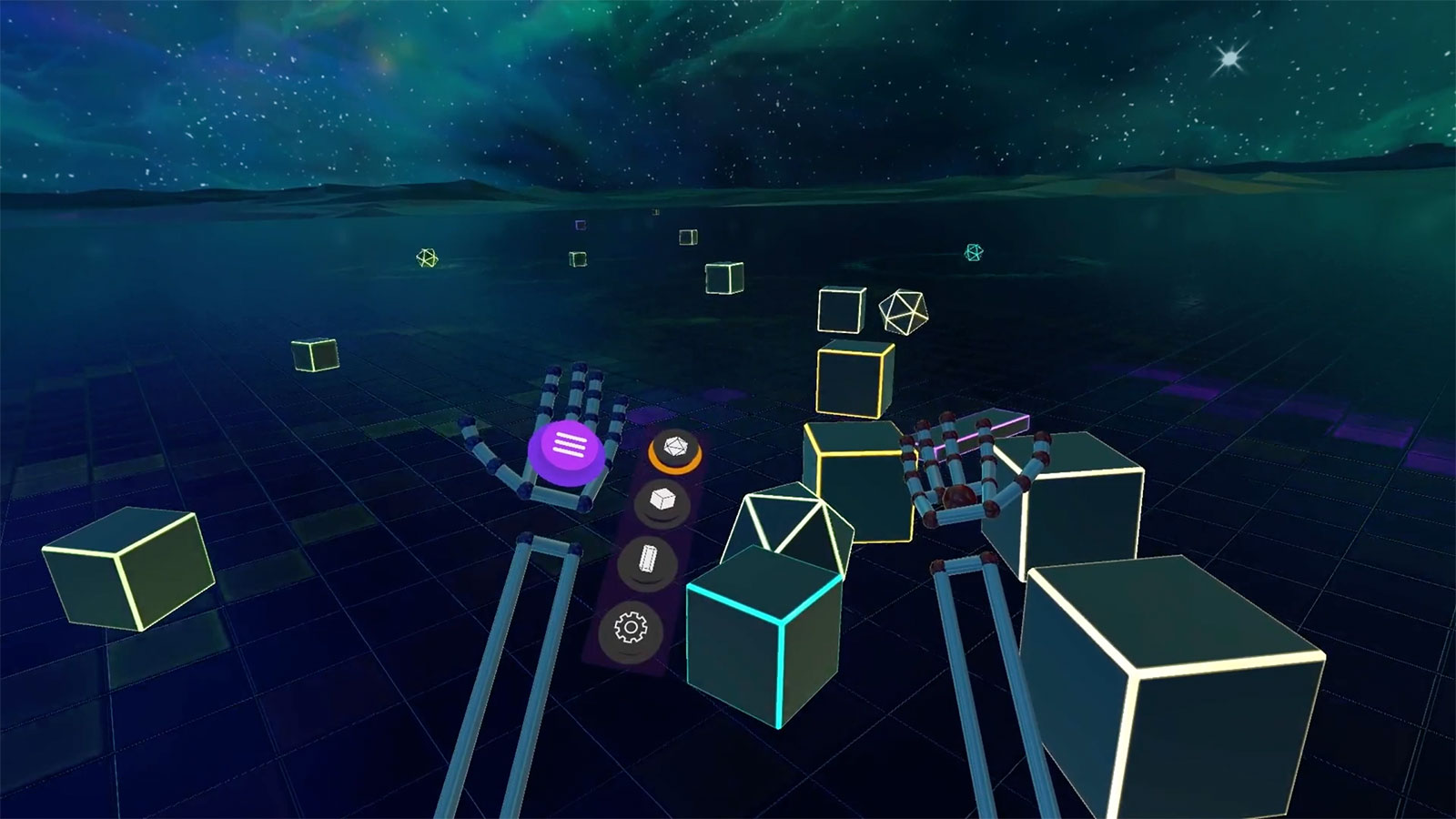

Ultraleap Gemini is the best hand tracking ever – giving users everywhere the power to reach out and make XR work for them

OEMs

Most people have never tried VR, AR, or MR – hand tracking opens up a bigger, more diverse community of users.

Software Developers

Users and markets of all sorts are looking beyond controllers. Hand tracking is key to XR realizing its potential.

VR for Training

We learn better by doing – hand tracking empowers all staff to feel truly present in VR training, enhancing on-the-job learning.

VR Arcades

VR arcades attract casual users looking for instant excitement. Hand tracking makes even those new to immersive worlds feel at home.Ultraleap Gemini

Superior pose accuracy

Virtual hands that match your real ones more closely than ever before.

Flexible for multiple platforms

Immerse seamlessly, whether you're developing PC VR or all-in-one headsets.

Unity, Unreal, OpenXR integrations

Whatever your platform, the future of interaction is just a fingertip away.

Hardware

Our purpose-built infrared cameras push the boundaries of what can be experienced in XR.

Stereo IR 170

Our widest field of view and longest tracking range. Suitable for evaluation and integration.

Qualcomm® Snapdragon™ XR2

Ultraleap hand tracking is pre-integrated and optimized on the XR2 reference design.

PRODUCT CATALOGUE

Explore our range of hand tracking solutions to help you realize the potential of XR. Hardware, software, developer tooling options, and design guidelines.

Tracking Technical Introduction

Learn about the fundamentals of how Ultraleap’s world-leading hand tracking works.

Developers

Essential software and tools for harnessing hand tracking, and our lively developer community.

XR Design Guidelines

Reach beyond the limits of controllers with our user experience design best practices.

"It is to cut a long story short, perfect... Simply put, there has never been hand tracking of this quality..."

“Ultraleap has become a leader in hand tracking, including solutions for PCs and standalone VR headsets.”

“…the solidity of Gemini (V5) is pretty astounding…”

Speak to one of our experts

Find out more about how the world's best hand tracking can transform your projects.

Contact Us