Last time, we looked at how an interactive VR sculpture could be created with the Leap Motion Graphic Renderer as part of an experiment in interaction design. With the sculpture’s shapes rendering, we can now craft and code the layout and control of this 3D shape pool and the reactive behaviors of the individual objects.

The Leap Motion Interaction Engine provides the foundation for hand-centric VR interaction design. Click To TweetBy adding the Interaction Engine to our scene and InteractionBehavior components to each object, we have the basis for grasping, touching and other interactions. But for our VR sculpture, we can also use the Interaction Engine’s robust and performant awareness of hand proximity. With this foundation, we can experiment quickly with different reactions to hand presence, pinching, and touching specific objects. Let’s dive in!

Getting Started with the Interaction Engine

To get started, we create two main scripts for the sculpture:

- SculptureLayout: One instance of this script in the scene acts as the sculpture manager, controlling the sculpture and exposing parameters to the user to create variations at runtime. It handles enabling and disabling objects as needed, as well as sending parameter changes to the group via events.

- SculptureInteraction: On each object, this script receives messages from the SculptureLayout script and communicates with the its LeapMeshGraphic and its Interaction Engine InteractionBehavior components. Using the callbacks provided by the Interaction Engine gives us a path towards our reactivity.

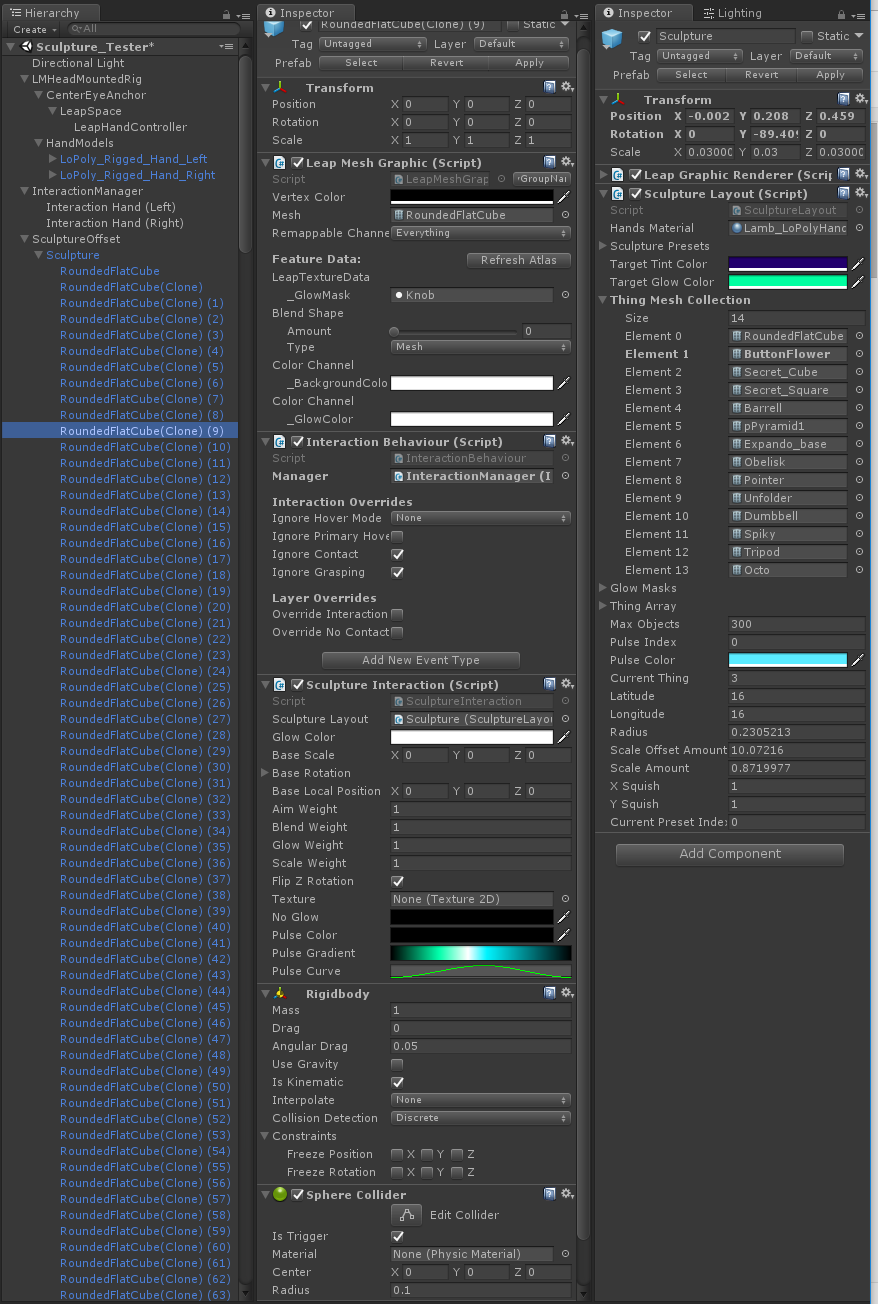

In the Unity scene hierarchy, each sculpture object needs to have a collection of components. Besides the LeapMeshGraphic, the InteractionBehavior component makes an object interactable with hands. In turn, it requires the presence of a Unity physics Rigidbody and a Collider component. Finally, each object receives our SculptureInteraction behavior script. This collection of components allows us to craft a reasonably sophisticated dynamic behavior for each object.

Left column: Scene Hierarchy. Middle column: Sculpture object setup. Right column: Sculpture parent setup

The sculpture’s parent transform holds the Graphic Renderer component and the SculptureLayout script. The sculpture’s object pool is parented to this transform, since Graphic Renderer objects must be parented under a Graphic Renderer component. For Leap Motion rigged hand models, hand tracking, and Interaction Engine setup, it’s quick and easy to begin with one of the scene examples from the Interaction Engine module folder.

Widget examples in the #LeapMotion Interaction Engine can easily be wired into #Unity events. Click To TweetThe Interaction Engine setup includes an InteractionManager component and InteractionHand assets. The InteractionHands are invisible hands that are constructed at runtime from colliders, rigidbodies, joints, and Leap ContactBone scripts. The InteractionManager provides awareness of interactive objects in our scene that have InteractionBehaviors attached.

Several of the example scenes include simple slider and button widget examples. These can be used to quickly experiment with parameters as they are exposed in code. The widgets have events that are exposed with standard Unity event Inspector UIs. This means you can wire the sliders up to methods and parameters in your scripts without any further coding! Then, when it’s time for the UI work, they also provide ready examples of how the widget hierarchies and components are set up.

VR Sculpture Layout and Control

Learn how to make a simple, highly interactive #VR sculpture demo with barely any code. Click To TweetTo perform one of its main purposes, the SculptureLayout script’s ResetLayout() method takes in a number of user-modified parameters – particularly latitude and longitude counts – and builds the sculpture from the object pool. Objects are enabled and disabled as needed (again by adding or removing from the render group) depending on these counts. Its simple layout algorithm loops through the object pool and assigns them a position based on a collection of functions.

The initial function was a spherical layout function. Tinkering with this function easily led to variants that form a bell, a twisting sheet, a mirrored funnel, and others.

The other purpose of the SculptureLayout script is to expose controls to the user so they can customize the sculpture at runtime. These are simply constructed as public parameters that trigger Unity events with their setter methods. This way it becomes easy to create UIs that only need to change these parameters for the sculpture to update.The SculptureInteraction scripts on the objects subscribe and unsubscribe to these events when they are enabled and disabled. They in turn handle these parameter changes only when they occur.

The SculptureLayout script includes parameters and events for updating a set of the sculpture’s features:

- Latitude-longitude totals, which define how many objects are in the horizontal and vertical rows of the sphere and in the rows and columns of the other layouts

- Scale of individual objects

- Layout function

- Shape mesh

- Distortion, which applies a scale modifier based on the object’s height within the sculpture

- Hover scale, which defines the size to which the objects will scale when a hand approaches

- Hover aim weight, which defines how far the objects will rotate toward a hand when it approaches

- Object base color

- Object glow color

This set of features provides enough combinations to have fun playing with the sculpture at length without exhausting its possibilities. The SculptureLayout script then has methods for saving and loading specific settings for this list of parameters. This collection of presets can be created to instantly transform the sculpture and show interesting configurations.

SculptureInteraction: Using Interaction Engine Callbacks

Crafting the SculptureInteraction behavior class starts with using the callbacks exposed by the Interaction Engine and other methods from the Leap Motion Unity Modules’ bindings to the Leap Motion tracking data.The SculptureInteraction class keeps a reference to the specific InteractionBehavior and LeapMeshGraphic instances that live on its transform – allowing it to use information about the hand to control its visual features.

The Interaction Engine’s OnHover() callback is triggered when an InteractionHand comes within a globally set threshold of the specific InteractionBehavior. By subscribing to this callback, the SculptureInteraction class can run its behavior methods, consisting mostly of simple vector math, when a hand is near. First it calculates the distance from the palm to the object. Then it can use this distance to weight its other math functions:

- Aim the object’s rotation at the hovering hand

- Scale the object’s transform as the hand approaches

- Blend the weight of the LeapMeshGraphic’s _BlendShape value to animate the shape’s morph target

- Glow changes the weight of the LeapMeshGraphic’s custom _GlowColor value

Within the SculptureInteraction’s onHover() handler method, we can use the Leap Motion C# API’s public attribute of PinchStrength to detect whether the hand is also pinching. Then a little more math causes the object to move towards the pinch point. This creates a “taffy pull” behavior, allowing the sculpture to be stretched apart.

For a chain reaction pulse behavior, another script is created – the PulsePoseDetector.cs script, which sits on the InteractionHand transform. It uses the API method hand.finger[x].isExtended() to see if the index finger and thumb are extended while the others are not. This becomes a simple pose detector that we can use in combination with the Interaction Engine’s Primary Hover callback.

By first checking to see if our pose has been detected and held for some number of frames, we’ll know if the hand is ready. Then, using the Interaction Engine’s OnContactBegin() callback combined with PrimaryHover, we can call the public NewPulse() method in the touched object’s SculptureInteraction class when the posed index finger touches an object. This calls a Propagate method, which checks for its neighbors and in turn triggers their pulse propagation methods for our chain reaction.

The pulse itself consists of a coroutine which lerps the object’s color through a gradient that is stored in the Sculpture Interaction.This both gives us access to the pulse color but allows us to give the pulse a color change over its lifespan.

The Stress Test and Optimization Loop

As noted in our previous post, this sculpture is deliberately meant to find and exceed the limits of the Graphic Renderer and the Interaction Engine. This helps us zero in on performance bottlenecks and bugs, and to highlight possible use cases. Ramping up the number of visible, active shapes in the sculpture and running Unity’s Profiler provides a lot of rich information on which parts of the code are taking the most time per frame and whether we’re seeing garbage collection.

Leap Motion's Interaction Engine includes simple techniques for #mobileVR optimization. Click To TweetAdditionally, we can begin to identify best practices for using the tools and ways to optimize the project specific code of the sculpture. There are of course endless other optimizations for any VR project – whether desktop or mobile VR – but here are a few sculpture specific optimizations to illustrate some typical performance tuning.

For example, we checked “Ignore Grasping” for each of our individual InteractionBehaviors. Since we’re not grabbing the shapes in the sculpture, we can turn off the calculations for grab detection in all the shapes. (While the math that runs in each SculptureInteraction script on each shape is computationally trivial, we sometimes have hundreds of these shapes being hovered, so this can still add up to significant calculation time per frame.) So in the SculptureInteraction script we optimized by calculating the reactions to the hand every fourth frame. These results are then fed into continuous lerps (linear interpolators) for the position and rotation of the object. While the change to the behavior of the shapes as your hand approaches is nearly imperceptible, this provides yet another small performance optimization.

With these behavior examples, we’ve illustrated how a combination of the Leap Motion tracking API and our Unity Modules can enable many forms of interaction explorations with a few lines of code for each. For the next part of the VR sculpture project, we can begin exercising the Interaction Engine’s reactive widgets. At this point, many of the sculpture’s parameters are exposed in code, so they can be easily wired up to be controlled by Interaction Engine UIs. We’ll dive into creating these UIs to make a UX playground in the next post in our series.