Creating a sense of space is one of the most powerful tools in a VR developer’s arsenal. In our Exploration on World Design, we looked at how to create moods and experiences through imaginary environments. In this Exploration, we’ll cover some key spatial relationships in VR, and how you can build on human expectations to create a sense of depth and distance.

Explore our latest XR guidelines →

Controller Position and Rotation

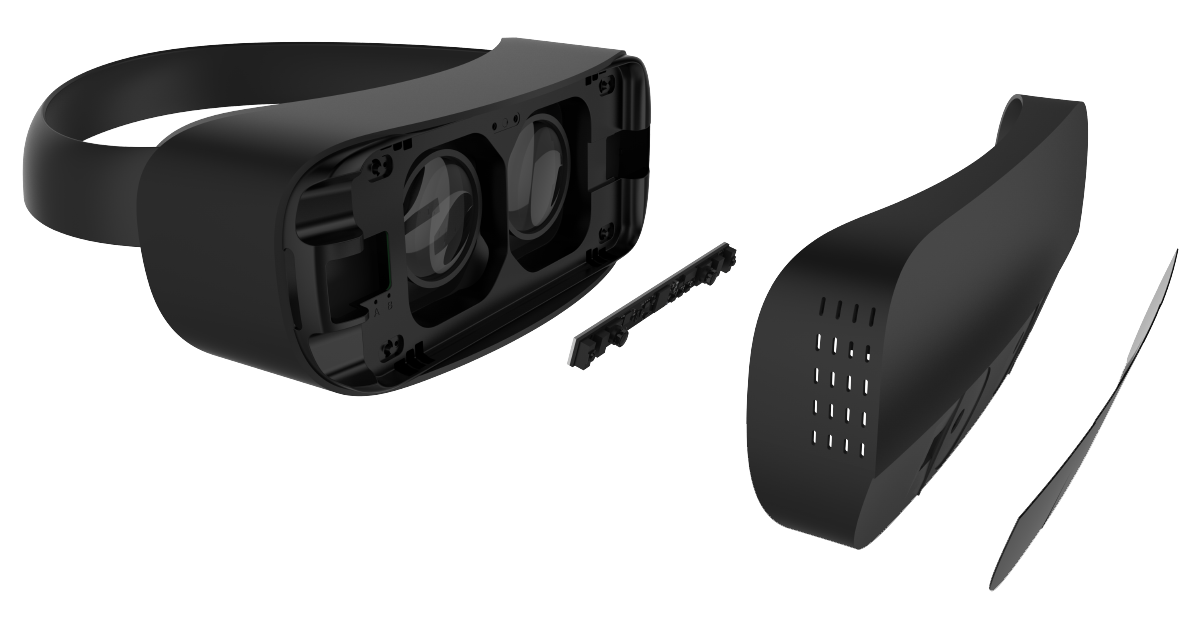

Space and perspective are among the most powerful tools in a VR developer's arsenal. Click To TweetTo bring Leap Motion tracking into a VR experience, you’ll need a virtual controller within the scene attached to your VR headset. Our Unity Core Assets and the Leap Motion Unreal Engine 4 plugin both handle position and scale out-of-the-box for the Oculus Rift and HTC Vive.

For a virtual reality project, the virtual controller should be placed between the “eyes” of the user within the scene, which are represented by a pair of cameras. From there, a further offset in Z space is necessary, to compensate for the fact that the controller is mounted on the outside of the headset.

This offset varies depending on the headset design. For the Oculus Rift, this offset is 80mm, while on the HTC Vive it’s closer to 90mm. Our Mobile VR Platform is designed to be embedded directly within headsets, and can be even closer to the user’s eyes.

Depth Cues

How to use the same 3D cinematic tricks as filmmakers to create depth and perspective in #VR Click To TweetWhether on a standard monitor or in a VR headset, the depth of nearby objects can be difficult to judge. This is because in the real world your eyes dynamically assess the depth of nearby objects – flexing and changing their lenses, depending on how near or far the object is in space. With headsets like the Oculus Rift and HTC Vive, the user’s eye lenses remain focused at infinity.

To create and reinforce a sense of depth, you can use the same 3D cinematic tricks as filmmakers:

- objects in the distance lose contrast

- distant objects appear fuzzy and blue/gray (or transparent)

- nearby objects appear sharp and full color/contrast

- shadow from hand casts onto objects, especially drop-shadows

- reflection on hand from objects

- sound to create a sense of depth

Rendering Distance

While the distance at which objects should be rendered for extended viewing will depend on the optics of the VR headset being used, it typically lies significantly beyond comfortable reaching distance. Elements that don’t require extended viewing, like quick menus, may be rendered closer without causing eyestrain. You can also play with making interactive objects appear within reach, or responsive to reach at a distance (e.g. see Projective Interaction Mode in the User Interface Design Exploration.)

Virtual Safety Goggles

As human beings, we’ve evolved very strong fear responses to protect ourselves from objects flying at our eyes. Along with rendering interactive objects no closer than the minimum recommended distance, you should ensure that objects never get too close to the viewer’s eyes. The effect is to create a shield that pushes all moveable objects away from the user.

Multiple Frames Of Reference

Within any game engine, interactive elements are typically stationary with respect to some frame of reference. Depending on the context, you have many different options:

World frame of reference. The object is stationary in the world. This is often the best way to position objects, because they exist independently from where you’re seeing it, or which direction you’re looking. This allows an object’s physical interactions, including its velocity and acceleration, to be computed much more easily.

Body frame of reference. The object moves with the user, but does not follow head or hands. It’s important to note that head tracking moves the user’s head, but not the user’s virtual body. For this reason, an object mapped to the body frame of reference will not follow the head movement, and may disappear from the field of view when the user turns around.

Head frame of reference. The object maintains position in the user’s field of view. (For example, imagine a classic HUD.)

Hand frame of reference. The object is held in the user’s hand.

Keep these frames of reference in mind when planning how the scene, different interactive elements, and the user’s hands all relate to each other.

Parallax, Lighting, and Texture

Real-world perceptual cues are always useful in helping the user orientate and navigate their environment. Lighting, shadows, texture, parallax (the way objects appear to move in relation to each other when the user moves), and other visual features are crucial in conveying depth and space.

Of course, you also have to balance this against performance! Always experiment with less resource-intensive solutions, and be willing to sacrifice elements that you love if it proves necessary. This is especially true if you’re aiming for a mobile VR release down the road.

Explore our latest XR guidelines →

So far we’ve focused a lot on the worlds that our users will inhabit and how they relate to each other. But what about how they move through those worlds? Next week: Locomotion.