When virtual reality and musical interface design collide, entire universes can be musical instruments. Created by artists Rob Hamilton and Chris Platz for the Stanford Laptop Orchestra, Carillon is a networked VR instrument that brings you inside a massive virtual bell tower. By reaching into the inner workings and playing with the gears, you can create hauntingly beautiful, complex music.

The Orchestra recently performed Carillon live onstage at Stanford’s Bing Concert Hall with a multiplayer build of the experience. Now the demo is available on Windows for everyone to try (after a brief setup process).

This week, we caught up with Rob and Chris to talk about the inspiration behind Carillon and the bleeding edge of digital music.

What’s the idea behind the Stanford Laptop Orchestra?

Rob: The Stanford Laptop Orchestra (SLOrk) is really two things in one. On the one hand, it’s a live performance ensemble that uses technology to explore new ways to create and perform music. On the other, it’s a course taught every Spring quarter at Stanford’s Center for Computer Research in Music and Acoustics (CCRMA, pronounced “karma”) by Dr. Ge Wang.

In order for students to think about how to create pieces of music and pieces of software, we enable groups to get together and make music as an ensemble. There’s a lot to learn – you have to learn how to compose, orchestrate music for an ensemble, interaction design, etc. Students in the class make up the ensemble and in the span of 9-10 weeks learn how to program, compose music and build innovative interactive performance works.

Sonically, one of the chief ideas behind SLOrk is to move away from a “stereo” mindset for electronic and electroacoustic music performance – where sound is fed to a set of speakers flanking the stage – and instead to embrace a distributed sound experience, where each performer’s sound comes from their own physical location. Just like in a traditional orchestra, where sound emanates from each performer’s instrument, SLOrk performers play sound from custom-built 6-channel hemispherical speakers that sit at their feet.

What does VR add to the musician’s repertoire?

Rob: Computer interfaces for creating and performing music have come a long way from early technologies like Max Mathews’ GROOVE system. Touchscreens, accelerometers, and a bevy of easy-to-use external controllers have given performers many options in how to control and shape sound and music. The addition of VR to that arsenal has been extremely eye-opening, as the ability to build virtual instruments and experiences that leverage depth and space – literally allowing musicians to move inside a virtual instrument and interact with it while retaining a sense of place – plays off of musicians learned abilities to coax sound out of objects.

Using technology like the Leap Motion Controller allows us to use our hands to directly engage virtual instruments, from the plucking of a virtual string to the casting of a sonic fireball. The impossibilities of VR are extremely exciting as anything in virtual space can be controlled by the performer and used to create sound and music.

In some ways, though, this is an ongoing trend. The people who created instruments were always trying to create something new. Instruments like the saxophone didn’t exist throughout all time – someone had to create them. They came up with these new ways to control sound. The whole idea behind our department is that we want to put technology in the hands of musicians and show composers how they can use technology to further the science and artistry of music.

Where will the next “saxophone” come from?

Rob: I think the next saxophone could exist within VR. One thing that’s really exciting about VR that it takes us from engaging technology on a flat plane, and brings it into three dimensions in a way that we as humans are very used to. We’re used to interacting with objects, we’re used to using our hands, we’re used to looking at objects from different angles.

As soon as you strap on a pair of VR goggles and put your hands into the scene, all of a sudden things change. You really feel a strong connection with these virtual objects, which our brains know don’t really exist, but they definitely exist in this shared mental space – in the matrix, or Metaverse from Snow Crash. The more we bring those closer to the paradigms that we humans are used to in the real world, the more real these objects feel to us.

How do the different parts of the Carillon fit together?

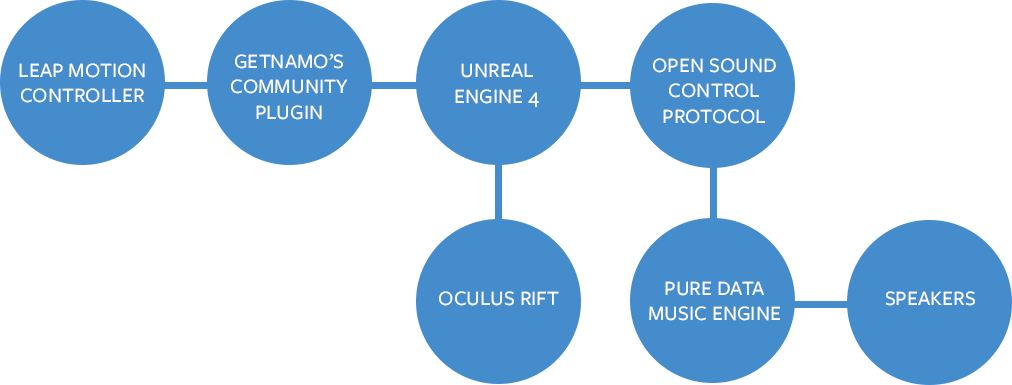

Rob: Carillon was built within the Unreal Engine and uses the Open Sound Control protocol to connect motion and actions in the game environment with a music engine built within the Pure Data computer music system. Carillon was really built for VR: the Oculus Rift and the Leap Motion Controller are key components in the work. The Leap Motion integration to Unreal uses getnamo’s most excellent event-driven plugin to expose the Leap Motion API to Unreal Blueprint and slave each performer’s avatar arms and hands to the controller. All the programming for Carillon was done using Unreal’s Blueprint visual scripting language. The environment is built to be performed across a network, with multiple performers all controlling aspects of the same Carillon.

The core interaction in Carillon is the control of a set of spinning gears at the center of the Carillon itself. By interacting with a set of gears floating in their rendered HUD – grabbing, swiping, etc. – performers speed up, slow down, and rotate each set of rings in three dimensions. The speed and motion of the gears is used to drive musical sounds in Pure Data, turning the virtual physical interactions made by the performers into musical gestures. Each performer generates sound from their own machine, and in concert with the SLOrk, that sound is sent to a six-channel hemispherical speaker sitting at their feet. Additional sounds made by the Carillon including bells and machine-like sounds are also sent to SLOrk speakers.

For this performance, we ran three instances of the Carillon across the network using fairly beefy gaming laptops with 2 or 3GB video cards. However, to get optimal framerates for the Oculus Rift, this work really should be performed using faster video cards. Nonetheless, the tracking of the Leap Motion Controller has been really nice for us, as the gestures remain intact even at these lowered framerates.

What was the design and testing process like?

Rob: Chris and I have been designing and building iterations of the Carillon for a while now as the work is actually one piece in a large work that is also made up of earlier works of ours: ECHO::Canyon (2014) and Tele-harmonium (2010). The idea of a mechanical instrument that responds to performers’ motions has been something we really enjoy and have been trying to explore different ways to make human gestures and machine-driven virtual interactions “make sense” for both performers/players and audiences.

Testing with the Oculus Rift and the Leap Motion Controller within Unreal has been pretty fun. Where the DK1 was pretty painful to develop for (physically painful), the DK2 is much more forgiving of the lower framerates we’re using on laptops. The Leap Motion integration has been extremely fun, especially in HMD mode where the fun really starts. The experience of reaching out into our interface while immersed in the Rift and tracking our avatar arms is really magical and compelling.

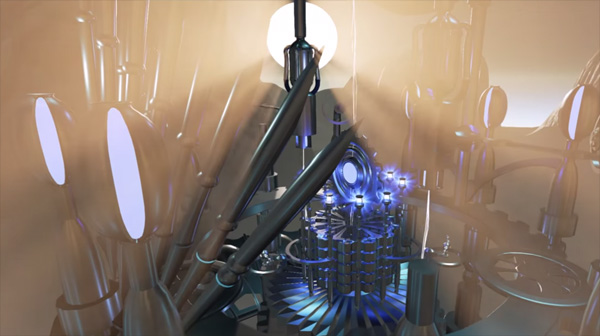

Chris: On the graphics side, most game designers (especially world builders) get greedy in making this large-scale area. We always really like to push that, and I think it paid off because we know how large we can go. One of the most exciting things about stereoscopy is really feeling like you’re standing on the edge of a 200-foot object rather than this little TV screen on this little box. I think I’m going to repurpose some of these environments and have some smaller playable areas that will perform really well.

What’s the experience like for the person inside the belltower?

Rob: Each performer’s avatar stands on a platform within the Carillon, looking up at the giant spinning rings in the center. Directly in front of them are a smaller set of rings with which they can interact – holding a hand over a ring or set of rings selects them, swiping gestures left/right, up/down, away/toward start the rings spinning. That spinning on each axis controls sound from the Pure Data patch. Across the network, each performer is working with the same set of rings, so collaboration is needed to create something truly musical, just like in traditional performance. Under the performer’s feet are a set of bells being struck by the Carillon itself, playing a melody that shapes the piece.

The Leap Motion Controller was a key component to this piece. Being able to reach out and select a ring, then to “physically” start it spinning with your hand is a visceral experience with which simply tapping a key on the keyboard or pressing a button on a gamepad can’t compare. The controller really creates a sense of embodiment with the environment, as your own physical motion really drives the machine and the music it creates.

How did the audience react to the performance?

Rob: People are really just starting to understand what kinds of things these great pieces of technology like the Leap can allow artists and creatives to build. Most people in the audience are really curious to know what’s going on beneath the Rifts; what the performers are really doing and how the whole system works. They hear what we’re doing and see how the Carillon itself is moving but they want to know how it works. The image of us onstage swiping our hands back and forth isn’t what most concert-goers are used to or were really expecting when they came to the show.

How will people be creating and experiencing music in 2020?

Rob: We’re at such a great time to be building creative musical technology-based experiences. We can move sound across networks bringing together performers from other sides of the world in real time, interacting with one another in virtual space. In five years I think the steady march of technology will continue. As we all become more comfortable with technology in our daily lives, it will seem less and less strange to see it blending with traditional musical performance practice, instrument design and concert performance. The idea of mobile musical instruments is really powerful, and you can flip that into more traditional video gaming terms, where the ability for these networked spaces to connect people is phenomenal.

About the Creators

Rob Hamilton is a composer and researcher who spends his time obsessing about the intersections between interactive media, virtual reality, music and games. As a creative systems designer and software developer, he’s explored massively-multiplayer networked mobile music systems at Smule, received his PhD in Computer-based Music Theory and Acoustics from Stanford University’s Center for Computer Research in Music and Acoustics (CCRMA) and this fall will join the faculty at Rensselaer Polytechnic Institute (RPI) in New York as an Assistant Professor of Music and Media.

Chris Platz is a virtual world builder, game designer, entrepreneur, and artist who creates interactive multimedia experiences with both traditional tabletop and computer-based game systems. He has worked in the industry with innovators Smule and Zynga, and created his own games for the iOS, Facebook, and Origins Game Fair. His real claim to fame is making interactive stories and worlds for Dungeons and Dragons for over 30 years. He holds a BA in Business & Biotechnology Management from Menlo College, and an MFA in Computer Animation from Art Institute of CA San Francisco. From 2007-2010 Chris served as an Artist in Residence at Stanford University in Computer Graphics and he is currently an Assistant Professor of Animation at California College of the Arts.

Together Rob and Chris teach the CCRMA “Designing Musical Games::Gaming Musical Design” Summer workshop at Stanford, where students learn how to explore cutting edge techniques for building interactive sound and music systems for games and 2D/3D rendered environments. To better understand the link between virtual space and sound, students learn the basics of 3D art and modelling, game programming, interactive sound synthesis and computer networking using Open Sound Control. Learn more about the Designing Musical Games Summer Workshop at https://ccrma.stanford.edu/

[…] directed by Ge Wang —the founder of the music app powerhouse Smule—played a piece called Carillon , which is “a networked (virtual reality) instrument that brings you inside a massive virtual […]

July 10, 2015 at 10:00 pm[…] and show composers how they can use technology to further the science and artistry of music,” said Rob Hamilton in a Leap Motion blog […]

March 10, 2016 at 2:49 am[…] through Ge Wang—the founding father of the track app powerhouse Smule—performed a piece called Carillon, which is “a networked (digital reality) instrument that brings you inside of a massive […]

April 20, 2016 at 8:03 am[…] and show composers how they can use technology to further the science and artistry of music,” said Rob Hamilton in a Leap Motion blog […]

May 2, 2016 at 7:20 am[…] looking up at the giant spinning rings in the center.” Dr. Hamilton describes the Carillon during an interview with the Leap Motion Blog in which he paints a picture of how the virtual instrument looks to the musicians interacting […]

June 28, 2016 at 7:39 am… [Trackback]

[…] Read More here: blog.leapmotion.com/twist-gears-massive-vr-music-engine-carillon/ […]

July 12, 2017 at 4:41 pm