We’ve seen how hardware, software, and graphics constraints can all work to produce latency. Now it’s time to put them all together, and ask what we can take away from this analysis.

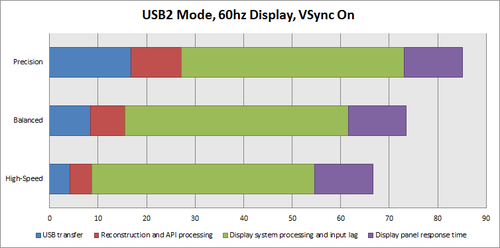

The best way to illustrate the impacts of these different factors is to look at their respective contributions under different scenarios. These measurements are averages across a few different machines, so performance on your machine in particular can be better or worse than these numbers.

We’ll start off in the worst-case scenario (however, one that is frighteningly typical):

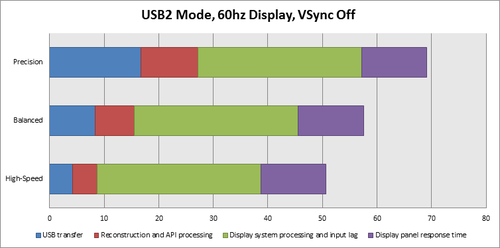

This is incredibly frustrating to us here at Leap Motion, since the vast majority of the perceived latency is outside of our control. However, let’s see what happens as we disable vertical synchronization:

Disabling VSync saves anywhere from 16ms to over 30ms, depending on the particular graphics system. However, the downside to disabling VSync is the presence of screen tearing, as we discussed in part one.

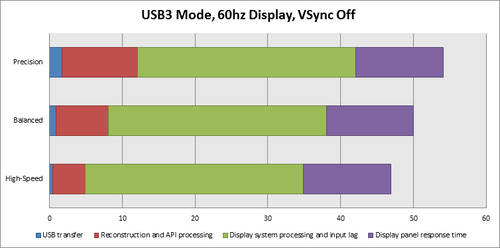

If you happen to have a USB3 cable and port, we showed how this can dramatically reduce data transfer time between the device and your computer. Here’s how that reduction compares to the other sources of latency:

In this setup under High-Speed mode our hardware and software together only contribute a little over 10% of the overall perceived latency. (Did I mention we were frustrated?)

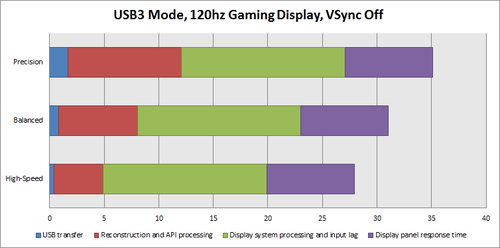

High-performance “gaming” monitors are fairly common these days, offering reduced input lag and panel response time. Let’s take a look at the numbers of the best overall configuration:

For the first time, in High-Speed mode, we’ve crossed below our 30-millisecond threshold mentioned earlier. The hope is that as time passes, you won’t need to jump through as many hoops to achieve that level of responsiveness, and instead it will be taken for granted.

Takeaways

We are continuously improving our software performance in order to reduce CPU load and increase responsiveness. However, the data tells us that the overall perceived latency depends on a lot of factors that are outside our control. So, in the meantime, here’s what you can do to optimize your experience:

- Use the device with a USB3 cable and port so that faster transfer rates are used.

- Use a 120hz monitor with a low response time. There are many “gaming” monitors nowadays that will work well, but you will sacrifice wide viewing angles and color accuracy as a result.

- In your graphics driver settings, disable vertical synchronization (VSync). If you want to leave VSync on, enable triple buffering to compensate for some of the additional delay. With a 120hz monitor you might find VSync on with triple buffering enabled to be the best experience.

- If you need to squeeze out the maximum responsiveness, run the device in “high speed” mode, which will reduce tracking quality in favor of speed and framerate.

- As a last resort, use a CRT display. These bulky analog displays have no input lag, higher refresh rates, and effectively zero panel response time. However, it’s 2013 and these may be hard to find.

What do you think about the problem of latency? Post your thoughts in the comments.

UPDATE: June 2014. We’ve been continually refining and optimizing our tracking over the year since this post was published. Latency will always be a challenge, so long as the speed of light and laws of thermodynamics still apply, but these are issues that we’re excited to work on – “not because they are easy, but because they are hard.” The current software currently only uses USB 2.0 data speeds, but in the future will also take advantage of USB 3.0 speeds.

Is it possible to have data BEFORE the “green part” (display)? I am working on a music oriented app and latency is essential, but graphics CAN lag a bit if audio is on time. I need just middle-of-palm point in space for both hands, but I need that with lowest latency possible. According to your text, data for this should be accessible in <10ms? Am I right?

February 5, 2014 at 4:43 amThe app has the data before display, which is just the final step in the whole process, and happens after the app has processed everything. The delay until the app receives data is the sum of the USB transfer time and reconstruction + API processing.

February 5, 2014 at 12:14 pmWhat about latency of various API state changes? Do you have exact or apx timing for the latency for palm center point co-ordinates update? It it the first to be produced?

February 5, 2014 at 1:10 pmUnfortunately I don’t have that data for you, though we’ve had lots of devs working on musical projects with no apparent latency (i.e. while latency exists, it’s beneath the human perception threshold). Depending on the specs of the app you want to create, you may want to take your question to the forums and find out what other devs’ experiences have been with musical latency 🙂

February 5, 2014 at 4:18 pm[…] We looked at how to take a real-time 3d scanner and sync that up to a virtual environment that a person can physically interact with in a visceral way. To make this work we had to figure out how to scan someone’s position in real-time, export that to a particle engine, emit particles at that point in time, and render that to the display frame. We also know that the latency needs to be less than 30 ms to effectively trick the brain into thinking what it is seeing is real-time. There is a great blog post by the developers of leap motion on this subject. [2. https://www.leapmotion.com/blog/understanding-latency-part-1/] [3. https://www.leapmotion.com/blog/understanding-latency-part-2/] […]

April 19, 2014 at 12:49 pmHey just wondering how these diagrams compare to the current Balance, Robust, and Low Resource modes? Does V2 utilize USB3 transfer speeds yet?

February 20, 2015 at 8:15 pmV2 does not yet use USB3 transfer speeds.

February 23, 2015 at 6:11 amWhen accessing the leapmotion data in code, (hand position, fingertip position, etc) it is only subject to the “USB transfer” and the “reconstruction and API processing” latency, correct? These latency calculations are only the delays you see in visualizing the data on the diagnostic/by other means?

July 10, 2015 at 10:55 amWhat are the numbers displayed under “processing latency” in the diagnostic visualizer “tracking information”? Is this the “reconstruction and API processing latency” only? And how can this information be accessed in code?

July 10, 2015 at 10:58 amProcessing latency is just the amount of time it takes for the frames to be handled by the system. It doesn’t include latency due to the USB stack or, I believe, time to transfer frames to the client API.

July 10, 2015 at 3:06 pmDoes the latency change depending on the “Main Display Refresh Rate”?

If my system has Sub Display, Which is depended on latency?

May 22, 2017 at 7:49 pm