Explorations in VR Design is a journey through the bleeding edge of VR design – from architecting a space, to designing groundbreaking interactions, to making users feel powerful.

In the world of design, intuition is a dangerous word. In reality, no two people have the same intuitions. Instead, we’re trained by our physical experiences and culture to have triggered responses based on our expectations. The most reliable “intuitive actions” are ones where we guide users into doing the right thing through familiarity and affordance.

Explore our latest XR guidelines →

This means any new interface must build on simple interactions that become habit over time. These habits create a commonly shared set of ingrained expectations that can be built upon for much more powerful interfaces. Today, we’ll look at broad design principles around VR interaction design, including the three types of VR interactions, the nature of flow, and an in-depth analysis of the interaction design in Blocks.

3 Kinds of Interactions

Designing for hands in VR starts with thinking about the real world and our expectations. In the real world, we never think twice about using our hands to control objects. We instinctively know how. The “physical” design of UI elements in VR should build on these expectations and guide the user in using the interface.

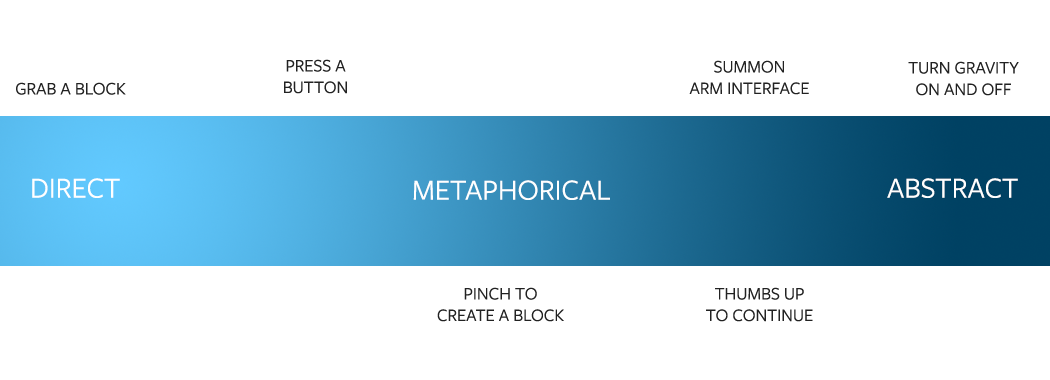

There are three types of interactions, ranging from easy to difficult to learn:

Direct interactions follow the rules of the physical world. They occur in response to the ergonomics and affordances of specific objects. As a result, they are grounded and specific, making them easy to distinguish from other types of hand movements. Once the user understands that these interactions are available, there is little or no extra learning required. (For example, pushing an on/off button in virtual reality.)

Metaphorical interactions are partially abstract but still relate in some way to the real world. For example, pinching the corners of an object and stretching it out. They occupy a middle ground between direct and abstract interactions.

Abstract interactions are totally separate from the real world and have their own logic, which must be learned. Some are already familiar, inherited from desktop and mobile operating systems, while others will be completely new. Abstract interactions should be designed with our ideas about the world in mind. While these ideas may vary widely from person to person, it’s important to understand their impact on meaning to the user. (For example, pointing at oneself when referring to another person would feel strange.)

Direct interactions can be implied and continually reinforced through the use of affordance in physical design. Use them as frequently as possible. Higher-level interactions require more careful treatment, and may need to be introduced and reinforced throughout the experience. All three kinds of interactions can be incredibly powerful.

Immersion and Flow

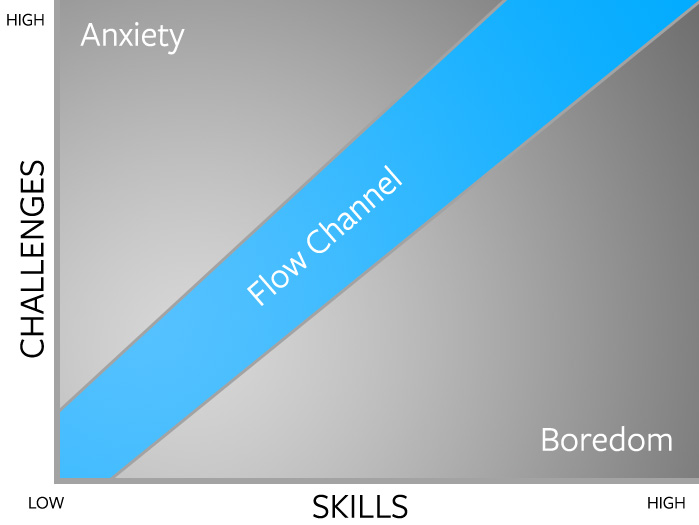

As human beings, we crave immersion and “flow,” a sense of exhilaration when our bodies or minds are stretched to their limits. It’s the feeling of being “in the zone” on the sports field, or becoming immersed in a game. Time stands still and we feel transported into a higher level of reality.

Creating the potential for flow is a complex challenge in game design. For it to be sustained, the player’s skills must meet proportionately complex challenges in a dynamic system. Challenges build as the player’s skill level grows. Too challenging? The game becomes frustrating and players will rage-quit. Not challenging enough? The game is boring and players move on. But when we push our skills to meet each rising challenge, we achieve flow.

To design for flow, start with simple physical interactions that don’t require much physical or mental effort. From there, build on the user’s understanding and elevate to more challenging interactions.

Core Orion Interactions

Leap Motion Orion tracking was designed with simple physical interactions in mind, starting with pinch and grab. The pinch interaction allows for precise physical control of an object, and corresponds with user expectations for stretching or pulling at a small target, such as a small object or part of a larger object. Grab interactions are broader and allow users to interact directly with a larger object.

Towards the more abstract end of the spectrum, we’ve also developed a toolkit for basic hand poses, such as the “thumbs up gesture.” These should be used sparingly and accompanied by tutorials or text cues.

Building Blocks! The User’s Journey

As a VR experience designer, you’ll want to include a warm-up phase where the core interactions and narrative can be learned progressively. Oculus Story Studio’s Henry and Lost begin with dark, quiet scenes that draw your attention – to an adorable hedgehog or whimsical firefly – setting the mood and narrative expectations. (Without the firefly, players of Lost might wonder if they were in danger from the robot in the forest.) Currently, you should give the viewer about 30 seconds to acclimate to the setting, though this time scale is likely to shrink as more people become accustomed to VR.

While Blocks starts with a robot tutorial, most users should be able to learn the core experience without much difficulty. The most basic elements of the experience are either direct or metaphorical, while the abstract elements are optional.

Thumbs up to continue. While abstract gestures are usually dangerous, the “thumbs up” is consistently used around the world to mean “OK.” Just in case, our tutorial robot makes a thumbs up, and encourages you to imitate it.

Pinch with both hands to create a block. This metaphorical interaction can be difficult to describe in words (“bring your hands into, pinch with your thumbs and index fingers, then separate your hands, then release the pinch!”) but the user “gets it” instantly when seeing how the robot does it. The entire interaction is built with visual and sound cues along the way:

- Upon detecting a pinch, a small blue circle appears at your thumb and forefinger.

- A low-pitched sound effect plays, indicating potential.

- When spawning, the block glows red in your hands. It’s not yet solid, but instead appears to be made of energy. (Imagine how unsatisfying it would be if the block appeared grey and fully formed when spawning!)

- Upon release, a higher-pitched sound plays to indicate that the interaction is over. The glow on the block cools as it assumes its final physical shape.

Grab a block. This is as direct and natural as it gets – something we’ve all done since childhood. Reach out, grab with your hand, and the block follows it. This kind of immersive life-like interactions in VR is actually enormously complicated, as digital physics has never been designed for human hands reaching into it. Blocks achieves this with an early prototype of our Interaction Engine.

Turn gravity on and off. Deactivating gravity is a broad, sweeping interaction for something that massively affects the world around you. The act of raising up with your hands feels like it fits with the “lifting up” of the blocks you’ve created. Similarly, restoring gravity requires the opposite – bringing both of your hands down. While abstract, the action still feels like it makes sense. In both cases, completing the interaction causes the blocks to emit a warm glow. This glow moves outwards in a wave, showing both that (1) you have created the effect, and (2) it specifically affects the blocks and their behavior.

Change the block shape. Virtual reality gives us the power to augment our digital selves with capabilities that mirror real-world wearable technologies. We are all cyborgs in VR. For Blocks, we built an arm interface that only appears when the palm of your left hand is facing up. This is a combination of metaphorical and abstract interactions, so the interface has to be very clean and simple. With only three large buttons, spaced far apart, users can play and explore their options without making irreversible changes.

Revisiting our three interaction types, we find that the essential interactions are direct or metaphorical, while abstract interactions are optional and can be easily learned:

- Direct: grab a block

- Metaphorical: create a block, press a button

- Abstract: thumbs up to continue, turn gravity on and off, summon the arm interface

From there, players have the ability to create stacks, catapults, chain reactions, and more. Even when you’ve mastered all the interactions in Blocks, it’s still a fun place to revisit.

Text and Tutorial Descriptions

Text and tutorial prompts are often essential elements of interactive design. Be sure to clearly describe intended interactions, as this will greatly impact how the user does the interaction. Avoid instructions that could be interpreted in many different ways, and be as specific as possible.

Using text in VR can be a design challenge in itself. Due to resolution limitations, only text at the center of your field of view may appear clear, while text along the periphery may seem blurry unless users turn to view it directly.

Another issue arises from lens distortion. As a user’s eyes scan across lines of text, the positions of the pupils will change, which may cause distortion and blurring. Furthermore, if the distance to the text varies – which would be caused, for example, by text on a flat laterally extensive surface close to the user – then the focus of the user’s eyes will change, which can also cause distortion and blurring.

The simplest way to avoid this problem is to limit the angular range of text to be close to the center of the user’s field of view. For example, you can make text appear on a surface only when a user is looking directly at the surface, or when the user gets close enough to that surface. Not only will this significantly improve readability, it makes the environment feel more responsive.

Users don’t always necessarily read static text within the environment, and tutorial text can clutter up the visual field. For this reason, you may want to design with prompts that are attached to your hands, or which appears near objects and interfaces contextually. Audio cues can also be enormously helpful in driving user interactions.

Explore our latest XR guidelines →

Designing for any platform begins with understanding its unique strengths and pitfalls. Next week, we’ll look at designing for Orion tracking, plus essential tips for user safety and comfort.