When the Leap Motion Controller made its rounds at our office a couple of years ago, it’s safe to say we were blown away. For me at least, it was something from the future. I was able to physically interact with my computer, moving an object on the screen with the motion of my hands. And that was amazing.

Fast-forward two years, and we’ve found that PubNub has a place in the Internet of Things… a big place. To put it simply, PubNub streams data bidirectionally to control and monitor connected IoT devices. PubNub is a glue that holds any number of connected devices together – making it easy to rapidly build and scale real-time IoT, mobile, and web apps by providing the data stream infrastructure, connections, and key building blocks that developers need for real-time interactivity.

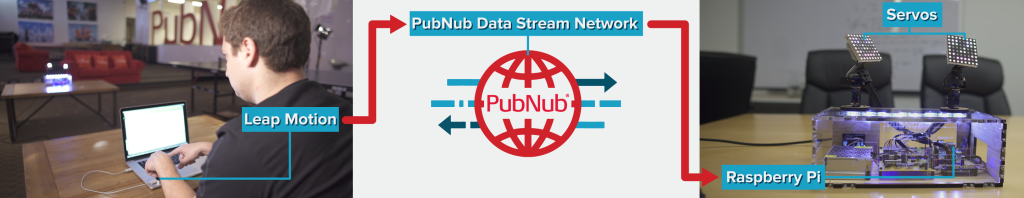

With that in mind, two of our evangelists had the idea to combine the power of Leap Motion with the brains of a Raspberry Pi to create motion-controlled servos. In a nutshell, the application enables a user to control servos using motions from their hands and fingers. Whatever motion their hand makes, the servo mirrors it. And even cooler, because we used PubNub to connect the Leap Motion to the Raspberry Pi, we can control our servos from anywhere on Earth.

In this post, we’ll take a general look at how the integration and interactions work. Be sure to check out the full tutorial on our blog, where we show you how to build the entire project from scratch. If you want to check out all the code, it’s available in its entirety in our project GitHub repository and on the Leap Motion Developer Gallery.

Detecting Motion with Leap Motion

We started by setting up the Leap Motion Controller to detect the exact data we wanted, including the yaw, pitch, and roll of the user’s hands. In our tutorial, we walk through how to stream data (in this case, finger and hand movements) from the Leap Motion to the Raspberry Pi. To recreate real-time mirroring of the user’s hands, the Leap Motion software publishes messages 20x a second with information about each of your hands and all of your fingers via PubNub. On the other end, our Raspberry Pi is subscribed to the same channel and parses these messages to control the servos and the lights.

Controlling Servos with Raspberry Pi

In the second part of our tutorial, we walk through how to receive the Leap Motion data with the Raspberry Pi and drive the servos. This part looks at how to subscribe to the PubNub data channel and receive Leap Motion movements, parse the JSON, and drive the servos using the new values. The result? Techno magic.

Wrapping Up

We had a ton of fun building this demo, using powerful and affordable technologies to build something really unique. What’s even better about this tutorial is that it can be repurposed to any case where you want to detect motion from a Leap Motion Controller, stream that data in realtime, and carry out an action on the other end. You can open doors, close window shades, dim lights, or even play music notes (air guitar anyone?). We hope to see some Leap Motion, PubNub, and Raspberry Pi projects in the future!

would be much cooler to use the leapmotion directly on the raspberry. in the end the project above is raspberry controlling servos (you could easily use arduino for that) and the leapmotion connected to a pc again.

August 25, 2015 at 11:45 pmWe hear you, but unfortunately the Raspberry Pi doesn’t even come close to the minimum requirements of running our core software.

August 26, 2015 at 6:50 amof course. however, there have been successfull attempts to play with the oculus on the rpi2 as well as man opencv projects with conventional cameras (i know you’re 200 hz, etc), and also people have been using the kinect / asus xtion on the rpi2 and also the odroid u. it would be simply cool if leapmotion would have a very basic SDK for raspbian, i think people then would be creative enough to do funny things with it. you know that a huge part of kinect’s success was the robotics community.

August 26, 2015 at 6:57 amStill, this is pretty cool as it allows the average person in their home to create remotely operated devices that don’t require keyboard or mouse. I could imagine motion racers – gesture-controlled model car racing. Or gesture-controlled robot wars.

November 29, 2016 at 2:40 am[…] Más detalles en el blog de Leap Motion. […]

August 26, 2015 at 2:58 am[…] Más detalles en el blog de Leap Motion. […]

August 28, 2015 at 3:36 am[…] Read more […]

August 28, 2015 at 5:00 am[…] Source link […]

January 8, 2018 at 10:31 am