Explorations in VR Design is a journey through the bleeding edge of VR design – from architecting a space, to designing groundbreaking interactions, to making users feel powerful.

What’s the most important rule in VR? Never make your users sick. In this exploration, we’ll review the essentials of avoiding nausea, positive ergonomics, and spatial layouts for user safety and comfort.

Explore our latest XR guidelines →

The Oculus Best Practices, Designing for Google Cardboard, and other resources cover this issue in great detail, but no guide to VR design and development would be complete without it. (See also the Cardboard Design Lab demo and A UX designer’s guide to combat VR sickness.)

Above all, it’s important to remember that experiences of sensory conflict can vary a great deal between individuals. Just because it feels fine for you does not mean it will feel fine for everyone – user testing is always essential.

Restrict Motions to Interaction

Simulator sickness is caused by a conflict between different sensory inputs, i.e. the inner ear, visual field, and bodily position. Generally, significant movement – as in the room moving, rather than a single object – that hasn’t been instigated by the user can trigger feelings of nausea. On the other hand, being able to control movement reduces the experience of motion sickness. We have found that hand presence within virtual reality is itself a powerful element that reinforces the user’s sense of space.

Remember when we said there were no hard-and-fast rules for VR design? Consider these to be strongly worded suggestions:

- The display should respond to the user’s movements at all times. Without exception. Even in menus, when the game is paused, or during cutscenes, users should be able to look around.

- Do not instigate any movement without user input (including changing head orientation, translation of view, or field of view). This includes shaking the camera to reflect an explosion, or artificially bobbing the head while the user walks through a scene. There are rare exceptions which we’ll cover in a future exploration on locomotion.

- Avoid rotating or moving the horizon line or other large components of the environment unless it corresponds with the user’s real-world motions.

- Reduce neck strain with experiences that reward (but don’t require) a significant degree of looking around. Try to restrict movement in the periphery.

- Ensure that the virtual cameras rotate and move in a manner consistent with head and body movements.

Ergonomics

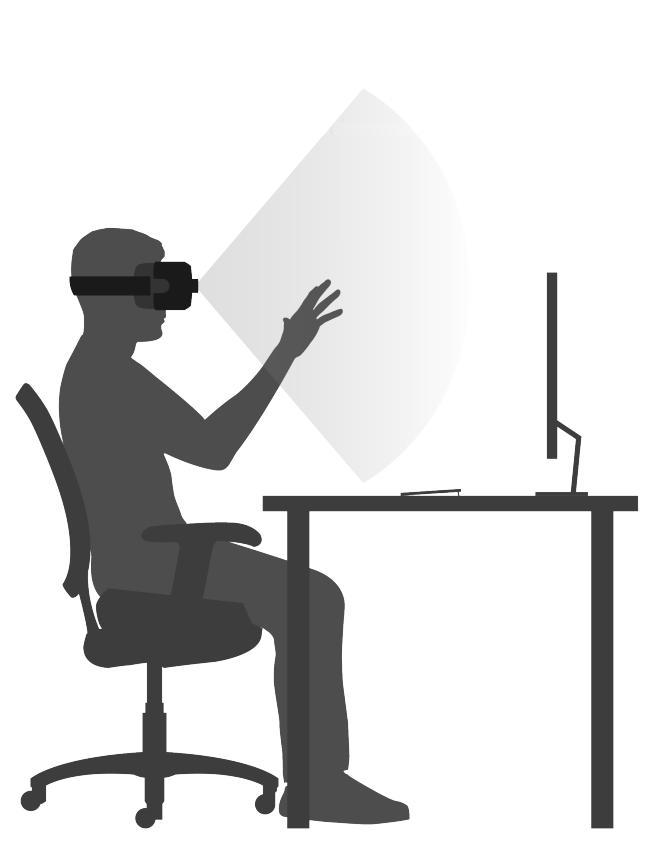

What will the digital experiences of the future look like, and how will you use them? You might imagine the large holographic interfaces in movies from Minority Report and Matrix Reloaded to The Avengers and Ender’s Game. But what looks awesome on screen doesn’t always translate well to human experience – because fictional interface designers don’t need to worry about ergonomics.

To take the most enduring example, the Minority Report interface fails by forcing users to (1) wave their hands and arms around (2) at shoulder level (3) for extended periods of time. This quickly becomes exhausting.

This isn’t an interface. It’s a workout!

But with a more human-centered design approach, it’s possible to bring science fiction to life in other ways.

One way to avoid user fatigue is to mix up different types of interactions. These allow your user to interact with the world in different ways and use different muscle groups. More frequent interactions should be brief, simple, and achieved with a minimum of effort, while less frequent interactions can be broader or require more effort. You’ll always want to position frequently used interactive elements within the human comfort zone (explained later in more detail).

No one enjoys feeling cramped or boxed in. Our bodies tend to move in arcs, rather than straight lines, so it’s important to compensate by allowing for arcs in 3D space. That’s why many of our projects feature interfaces that are curved in an arc around the user, resembling a cockpit.

Human beings are great at gauging position in the horizontal and vertical planes, but terrible at judging depth. This is because our eyes are capable of very fine distinctions within our field of view (X and Y), while judging depth requires additional cognitive effort and is much less precise.

As a result, designing interactions for human beings means that you’ll need to be fairly forgiving of inaccurate motions. For example, a user might make a grabbing gesture near an object, rather than directly grabbing the object. In these cases, you may want to have the object snap into their hand. Similarly, continuous visual feedback is an essential component for touchless interfaces.

Throughout the development process, always keep your user’s comfort in mind from the perspective of hand, arm and shoulder fatigue. Again, user testing is essential in identifying possible fatigue and comfort issues.

For more general ergonomics guidelines, be sure to consult our post Taking Motion Control Ergonomics Beyond Minority Report along with our ergonomics and safety guidelines.

Ideal Height Range

Interactive elements within your scene should typically rest in the “Goldilocks zone” between desk height and eye level. For ergonomic reasons, the best place to put user interfaces is typically around the level of the breastbone.

Interactive elements within your scene should typically rest in the “Goldilocks zone” between desk height and eye level. For ergonomic reasons, the best place to put user interfaces is typically around the level of the breastbone.

Here’s what you need to consider when placing elements outside the Goldilocks zone:

Desk Height or Below

Be careful about putting interactive elements at desk height or below. For seated experiences, the elements may intersect with a real-world desk, breaking immersion. For standing experiences, users may not be comfortable bending down towards an element.

Eye Level or Above

Interactive objects that are above eye level in a scene can cause neck strain and “gorilla arm.” Users may also occlude the objects with their own hand when they try to use them.

Explore our latest XR guidelines →

Our Interaction Engine is designed to handle incredibly complex object interactions, making them feel simple and fluid. Next week’s exploration dives into the visual, physical, and interactive design of virtual objects.