As humans, we are spatial, physical thinkers. From birth we grow to understand the objects around us by the rules that govern how they move, and how we move them. These rules are so fundamental that we design our digital realities to reflect human expectations about how things work in the real world.

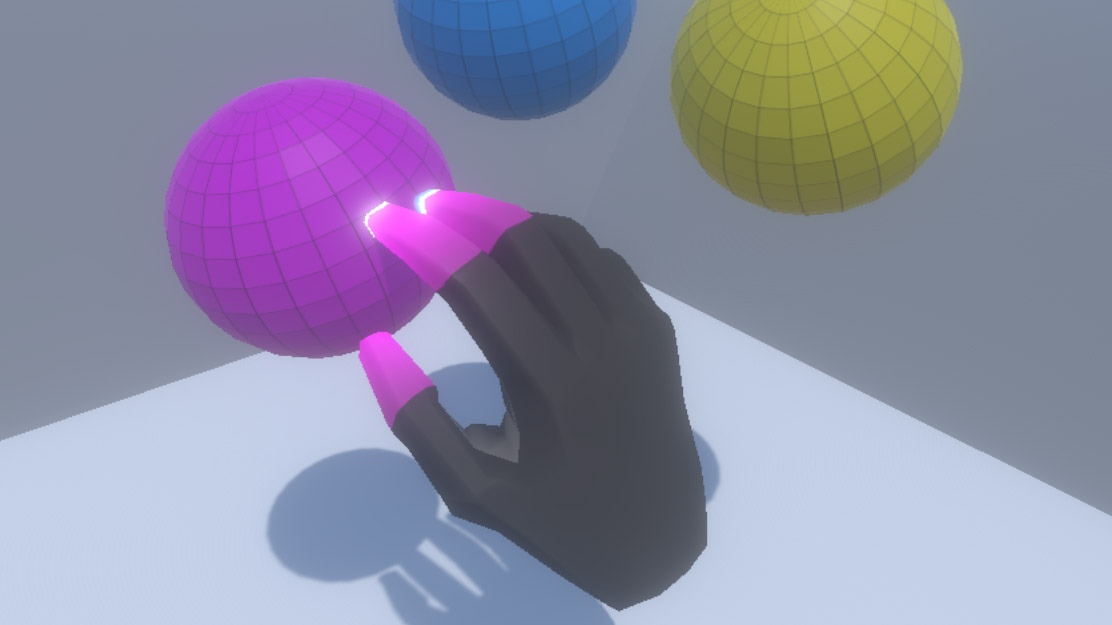

At Leap Motion, our mission is to empower people to interact seamlessly with the digital landscape. This starts with tracking hands and fingers with such speed and precision that the barrier between the digital and physical worlds begins to blur. But hand tracking alone isn’t enough to capture human intention. In the digital world there are no physical constraints. We make the rules. So we asked ourselves: How should virtual objects feel and behave?

We’ve thought deeply about this question, and in the process we’ve created new paradigms for digital-physical interaction. Last year, we released an early access beta of the Leap Motion Interaction Engine, a layer that exists between the Unity game engine and real-world hand physics. Since then, we’ve worked hard to make the Interaction Engine simpler to use – tuning how interactions feel and behave, and creating new tools to make it performant on mobile processors.

Today, we’re excited to release a major upgrade to this tool kit. It contains an update to the engine’s fundamental physics functionality and makes it easy to create the physical user experiences that work best in VR. Because we see the power in extending VR and AR interaction across both hands and tools, we’ve also made it work seamlessly with hands and PC handheld controllers. We’ve heard from many developers about the challenge of supporting multiple inputs, so this feature makes it easier to support hand tracking alongside the Oculus Touch or Vive controllers.

Let’s take a deeper look at some of the new features and functions in the Interaction Engine.

Read More ›