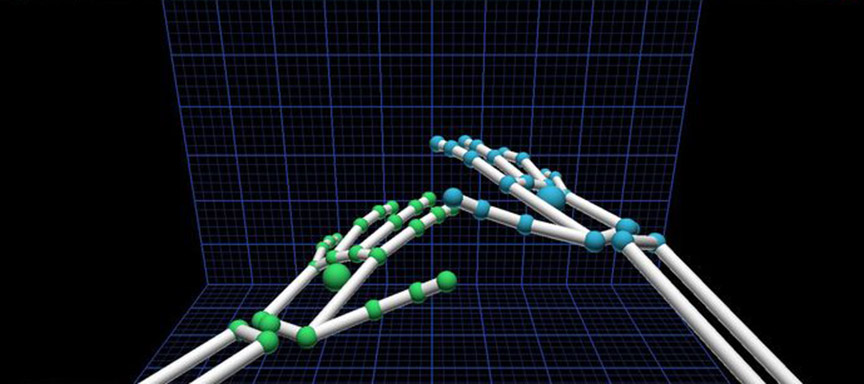

Immersion is everything in a VR experience. Since your hands don’t actually float in space (unless you’re this guy), we created a new Forearm API that tracks your physical arms. This makes it possible to create a more realistic experience with onscreen forearms.

How it works

While we created Arm as a separate class, it behaves much like a Bone, with many of the same functions. Direction, width, elbow and wrist positions – everything you need to create an onscreen forearm.

While the capability to track arms has been a part of our platform since the beginning, we’ve excluded it from the tracking model, because it wasn’t very reliable. With our improved beta tracking algorithms, arm tracking is now a viable option. Currently, the arm position is checked against the hand position, but in the future this will work both ways – hand to arm, and arm to hand. Ultimately, we expect that this will improve overall tracking confidence.

Applications

Beyond VR aesthetics, we also created the Forearm API for developers who want to experiment with multi-modal input. Unlike other motion-tracking platforms, the Leap Motion Controller provides highly precise hand interactions at the submillimeter level. By combining arm tracking with full-body tracking technologies, developers could take advantage of gross and fine motor control.

What do you think?

The Forearm API is still a beta feature, and our tracking team would love to know what you think about it. Is this a useful feature for you? What new functions could we add to the API?

Hi, the precission looks good, I´d like to use it for VR interaction for assembly processes. Some open software interfaces…. (Leap VR).

Wish list:

– less freedom of hand movements (just: hand open, closed and two positions between – for a fast LEAP – VR comm.)

– closed hand as a signal for VR to grap a virtual part

– the hand should look like a hand (like the picture above – tesselated hand)

– higher stabiliti (instead of dissapearing – waiting for the next robust scan)

– I want to use it in ICIDO….

Best regards, Franz

July 9, 2014 at 1:16 amThanks for your feedback, Franz! Better stability and cleaner grabbing interactions are definitely on the roadmap 😀

July 9, 2014 at 7:50 pmComing to this post a bit late, but.. Im interested in a bit of a bastardized use of Leap Motion for only detecting the width of an arm, without any hand in view. Is Leap capable of outputting arm width data when no hand is visible? Use-case I’m looking at is measuring diameter of arm or leg for integration with other data we’re collecting visually.

Thanks!

February 4, 2015 at 11:20 amUnfortunately, that functionality isn’t currently available with our tracking software.

February 5, 2015 at 3:21 pm