Have you ever received an MRI scan back from the lab and thought to yourself, “I’m not sure how even a medical professional could derive any insightful information from this blast of murky images?” You’re not alone. But what if, instead of having your doctor’s obtuse interpretation suffice, you could physically walk through your ailment with your doctor in VR, parsing and pointing out the nuances of pain felt within pieces of inflamed tendons or nerves or sections of your brain?

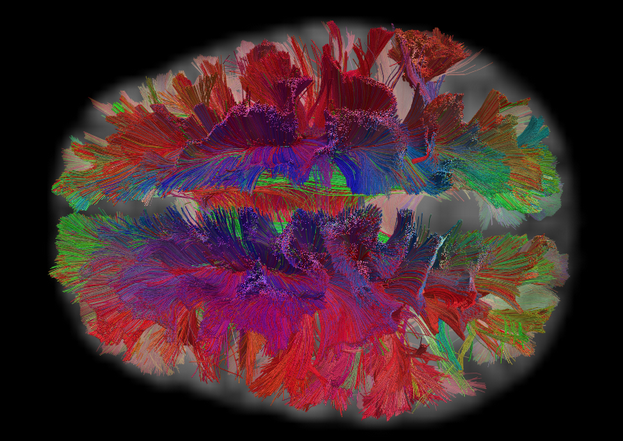

Brain Connectivity, a new example in the Developer Gallery, marks the beginning of a Master’s Thesis project from Biomedical Engineering student Filipe Rodrigues. The experiment uses slices of MRI scans and Multimodal Brain Connectivity Analysis to reconstruct a 3D model of the brain. Using tractography images, the user is able to see how regions of the brain are connected to each other. Based on this information, a matrix can be drawn of all the connected regions.

“Turning a boring 2D [MRI] visualization into a 3D interactive one is a great way of appealing to people who are maybe not from this discipline,” Filipe told us, “It’s a good way to visualize complex concepts and try to make them as appealing as possible. Leap Motion is a great tool for this.”

The project was primarily built in Unity, utilizing our widgets to cue interaction design. The brain model itself consists of Magnetic Resonance Images processed with Freesurfer. The connectivity graphs are computed from Diffusion Tensor Imaging tractography data processed with Diffusion Toolkit / Trackvis and Brain Connectivity Toolbox.

While the visualization portion of the project has proven to be an interesting challenge in and of itself, Filipe hopes to expand his thesis far beyond this demo. “In the beginning, I wanted to develop a haptic glove that I could use with Leap Motion in a virtual reality scenario, allowing me to feel the stuff I touched.” He explained, “For example, when I interrupt brain connectivity, I want to feel the vibrating on my fingers. That was the initial goal for my thesis. I’m still working on it, but I got too involved with the software part of it. I’m loving working with Leap Motion so I’ve postponed the glove component of the project a bit.”

Filipe does, however, have a working prototype. He removed vibration motors from two broken down cell phones, then glued them to a glove and wired that to an Arduino. From there, he looped in Unity using a handy package called Uniduino. You never know when you’ll strike gold in the asset store.

In addition to haptics, Filipe and his team hope to take the project from a purely aesthetic to a hardcore scientific place. The goal would be that for every interruption your hand makes on the graph, information would pop up about exactly what you’re interacting with. He’d also like to expand the interaction design to make the project as accessible as possible for the general user.

“Ideally,” Filipe said, “we’d like to turn it into two apps. One that’s more focused on the aesthetics and the interaction part of it, and one that’s more focused on the science behind it.”

Want to dig around the experience for yourself? Download it here in the Leap Motion Developer Gallery.

[…] what your brain looks like when in VR, thanks to our friends at Leap […]

December 22, 2015 at 3:03 pm[…] Turning a boring 2D MRI visualizations into a 3D interactive ones is a great way to visualize complex concepts. […]

February 6, 2016 at 10:40 pm