This week at CES, Mercedes-Benz unveiled their latest concept car with technology plucked from the pages of science fiction – including one of our latest prototypes. Embedded into the console, Leap Motion’s Meadowhawk modules allow drivers to access an experimental natural user interface. Visitors at CES can also see Leap Motion technology featured in a demo by Hyundai, as well as a standalone demo that our team is bringing to the showcase. While these are early concept demos, we’re excited to drive the future of touchless interaction in 2015.

With the rise of more complex navigation, media, and climate controls, the amount of technology available in our cars has exploded over the past several years. But our ability to access these features is fundamentally limited by existing touchscreen interfaces.

This is why we believe that accurate and robust hand tracking will be essential to the next generation of automotive consoles. With Meadowhawk, your hand becomes the interface quickly, intuitively, and (most importantly) in a way that is unobtrusive. Instead of reaching for small buttons, you can control the console with your hand motions anywhere within Meadowhawk’s range.

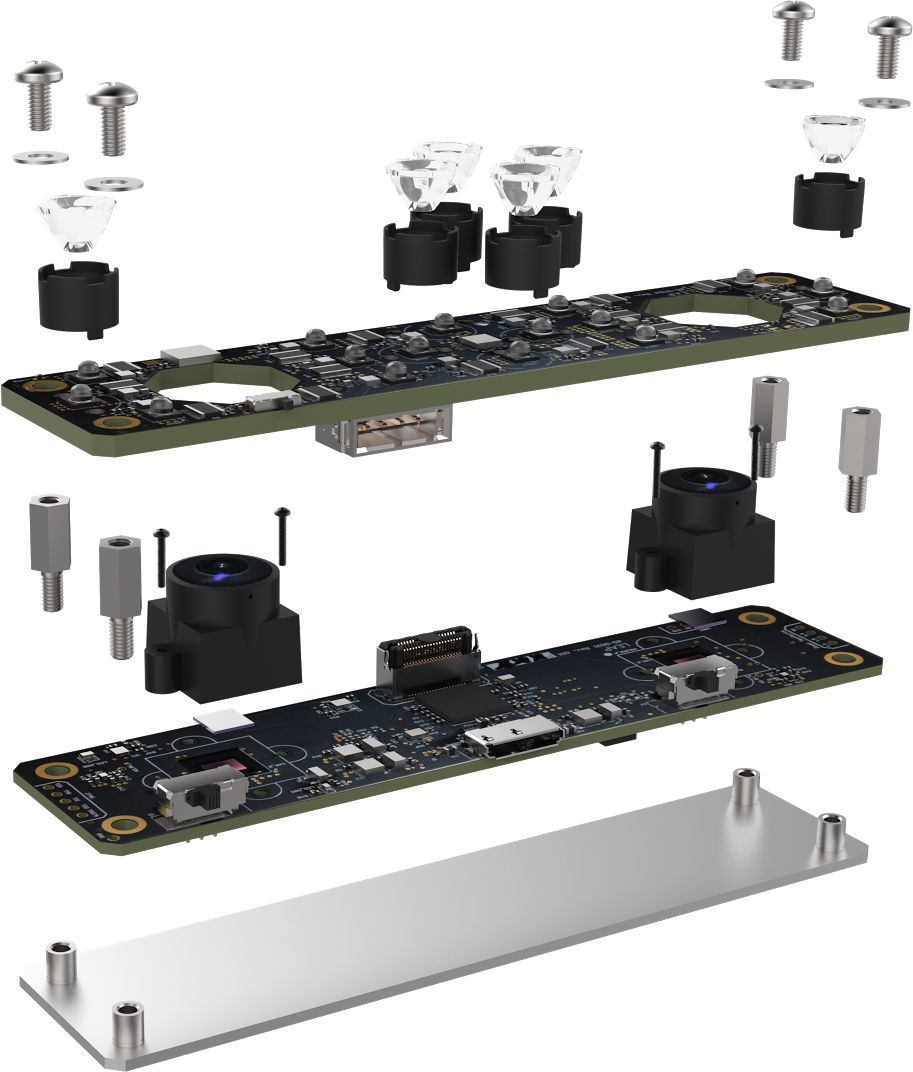

Designed to work in extreme lighting and higher temperature conditions, Meadowhawk is just one of several modules that we’ve been working on over the past year. The module features specialized 720p high-framerate image sensors and 16 high-powered infrared LEDs. Like its cousin Dragonfly, Meadowhawk is designed to be embedded by OEMs.

Designed to work in extreme lighting and higher temperature conditions, Meadowhawk is just one of several modules that we’ve been working on over the past year. The module features specialized 720p high-framerate image sensors and 16 high-powered infrared LEDs. Like its cousin Dragonfly, Meadowhawk is designed to be embedded by OEMs.

In the near term, Leap Motion interaction will be able to augment existing inputs with quick gestures to control audio, navigation, and climate controls – complementing existing buttons and touchscreen controls. Combined with audio cues and large visuals, this would give you the ability to perform specific tasks without taking your eyes off the road.

This is the idea behind the standalone demo that we’re showcasing this week at CES, which features a single Meadowhawk device along with an experimental user interface. By holding out five fingers and circling your wrist, you can cycle through a menu of options – phone, climate controls, directions, or music.

For example, to listen to your favorite artist, you can quickly rotate to music in the main menu, then show two fingers to bring up the music menu. Next, cycle through different artists, and switch to one finger when you find the one you want. Finally, you can cycle through their tracks, then push your finger forward a few inches to play a song.

This is a really early concept, but it points toward a new way of thinking about how we can interact with our cars. For their demo, Mercedes has combined Leap Motion technology with eye tracking – allowing drivers to glance at UI elements (e.g. temperature, fan speed, a zoomable map, etc.) and tap in the air to activate them. From there, drivers would be able to change settings by moving their hand left or right.

In the future, we envision a much more robust integration with the next generation of automotive interfaces – moving beyond small screens and towards interactive heads-up displays (HUDs) built into windshields. Using Leap Motion technology, drivers will be able to interact directly with the HUD without needing to touch the windshield, or use cumbersome buttons or trackpads. With self-driving cars already on the horizon, drivers will be soon freed to multi-task and interact with their cars in much more complex ways. We believe that motion controls will play a crucial role as HUDs evolve to meet this growing complexity.

Working with our partners, our team has lots of work ahead, including further hardware development, UX prototyping, and user testing. Beneath it all, our tracking software continues to evolve and advance across multiple platforms – transforming how we interact with our computers, cars, mobile devices, and beyond in ways that are wonderful and profound.

“Like its cousin Dragonfly, Meadowhawk is designed to be embedded by OEMs.”

only OEMs??

January 7, 2015 at 12:16 amCurrently, yes — Meadowhawk is in the very early stages and we have no plans to make it a separate peripheral.

January 7, 2015 at 12:15 pm[…] This week at CES, Mercedes-Benz unveiled their latest concept car with one of our latest prototypes – Leap Motion’s Meadowhawk automotive module. […]

January 7, 2015 at 6:46 am[…] we points and control regulating fingers, or Myo or Bitbrick as a trackable armband, or even a dear Leap Motion, record is apropos some-more physically firm to […]

January 8, 2015 at 8:10 am[…] by VR OEMs. But Dragonfly isn’t the only prototype that we developed in 2014. Along with the Meadowhawk automotive module (right), there are several more in the works. You’ll hear more about them […]

January 8, 2015 at 1:58 pm[…] More Coverage: Leap Motion […]

January 9, 2015 at 7:34 pmfascinating news! looking forward to see what other inventive stuff Leap comes up with in the future.

January 13, 2015 at 9:56 amVery cool!

January 15, 2015 at 3:49 pmcombining the leap with eye tracking would be the perfect complement for desk top users too.

is this something we can look forward to?

January 16, 2015 at 11:32 am[…] http://blog.leapmotion.com/experimental-meadowhawk-module-featured-mercedes-benzs-ces-concept-car/ […]

January 16, 2015 at 1:03 pm[…] More Coverage: Leap Motion […]

January 18, 2015 at 9:09 am[…] More Coverage: Leap Motion […]

January 18, 2015 at 5:43 pm[…] More Coverage: Leap Motion […]

January 18, 2015 at 8:26 pm[…] Leap Motion reports that its 3D camera Meadowhawk module is used to create a gesture interface in Mercedes-Benz concept shown at CES 2015. Leap Motion technology also featured in a demo by Hyundai, as well as a standalone demo that Leap Motion team brought to the showcase. Designed to work in extreme lighting and higher temperature conditions, Meadowhawk uses specialized 720p high-frame rate image sensors and 16 high-powered infrared LEDs (only 6 are seen on the picture below). The module is designed to be embedded by OEMs: […]

March 9, 2015 at 2:44 am