Physical interaction design for VR starts with fundamentally rethinking how objects should behave. Click To TweetWhen you reach out and grab a virtual object or surface, there’s nothing stopping your physical hand in the real world. To make physical interactions in VR feel compelling and natural, we have to play with some fundamental assumptions about how digital objects should behave. The Leap Motion Interaction Engine handles these scenarios by having the virtual hand penetrate the geometry of that object/surface, resulting in visual clipping.

With our recent interaction sprints, we’ve set out to identify areas of interaction that developers and users often encounter, and set specific design challenges. After prototyping possible solutions, we share our results to help developers tackle similar challenges in your own projects.

For our latest sprint, we asked ourselves – how can penetration of virtual surfaces be made to feel more coherent and create greater sense of presence through visual feedback?

For our latest sprint, we asked ourselves – how can penetration of virtual surfaces be made to feel more coherent and create greater sense of presence through visual feedback?

To answer this question, we experimented with three approaches to the hand-object boundary – penetrating standard meshes, proximity to different interactive objects, and reactive affordances for unpredictable grabs. But first, a quick look at how the Interaction Engine handles object interactions.

Object Interactions in the Leap Motion Interaction Engine

Earlier we mentioned visual clipping, when your hand simply phases through an object. This kind of clipping always happens when we touch static surfaces like walls, since they don’t move when touched, but it also happens with interactive objects. Two core features of the Leap Motion Interaction Engine, soft contact and grabbing, almost always result in the user’s hand penetrating the geometry of the interaction object.

Similarly, when interacting with physically based UIs – such as our InteractionButtons, which depress in Z-space – fingertips still clip through the geometry a little bit, as the UI element reaches the end of its travel distance.

Now let’s see if we can make these intersections more interesting and intuitive!

Experiment #1: Intersection and Depth Highlights for Any Mesh Penetration

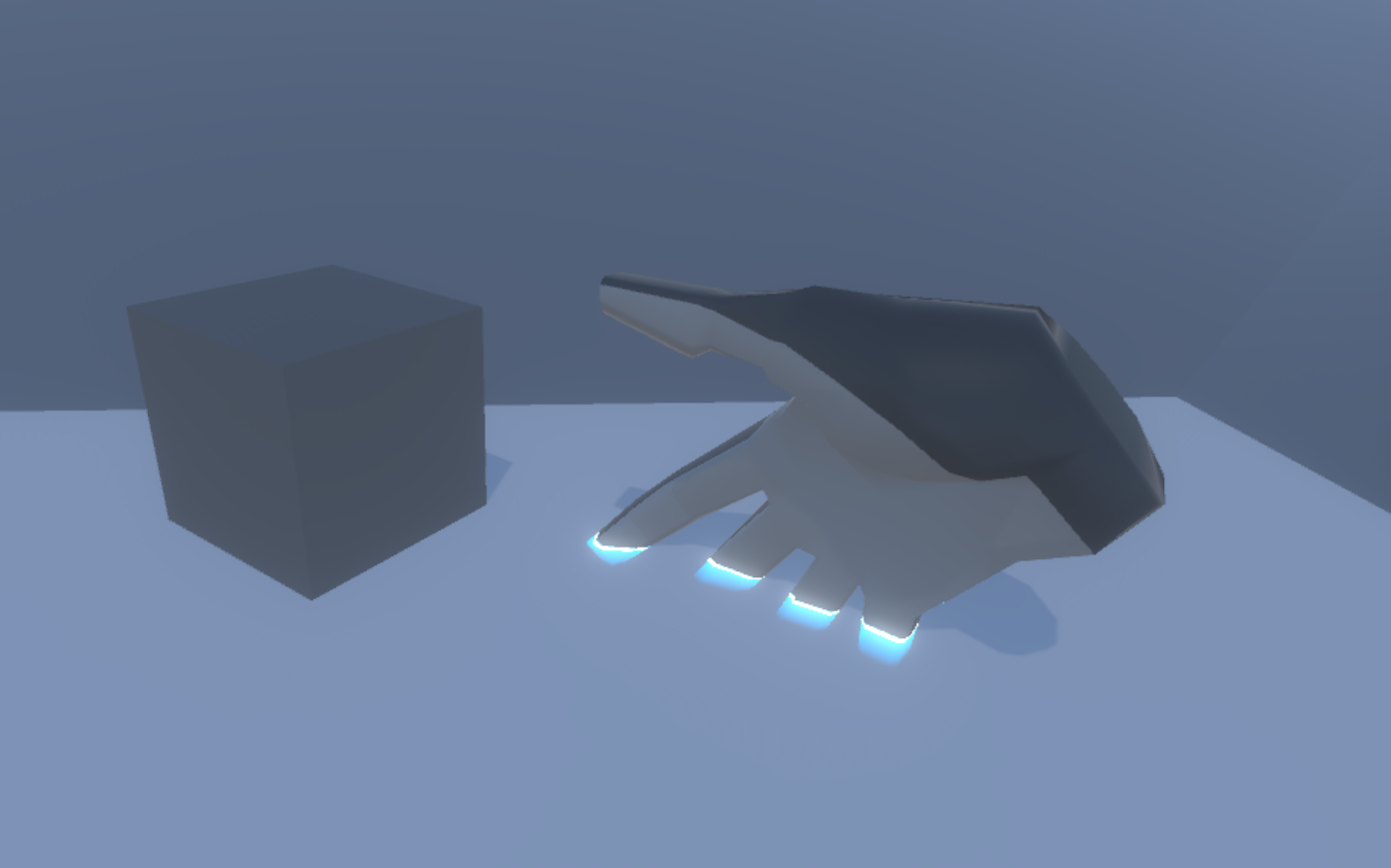

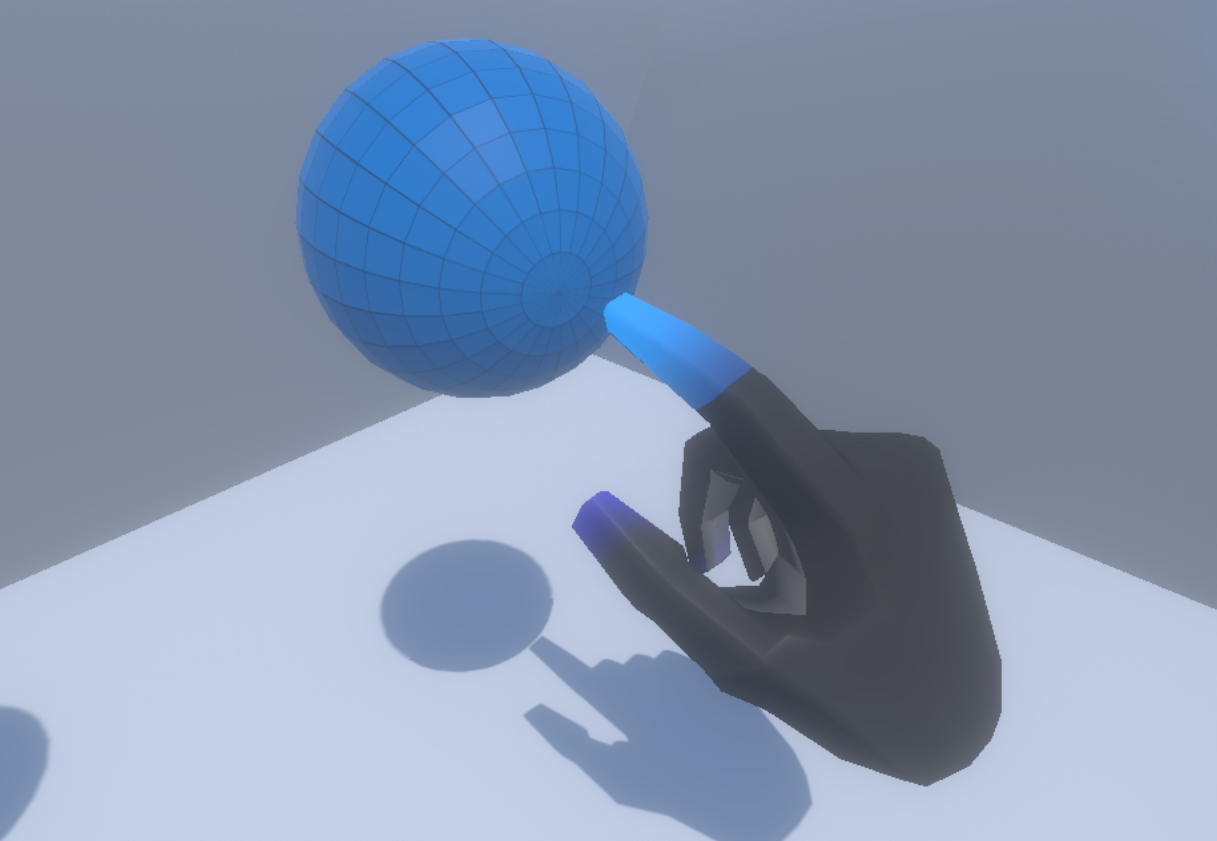

For our first experiment, we proposed that when a hand intersects some other mesh, the intersection should be visually acknowledged. A shallow portion of the occluded hand should still be visible but with a change color and fade to transparency.

To achieve this, we applied a shader to the hand mesh. This checks how far each pixel on the hand is from the camera and compares that to the scene depth, as read from the depth texture to the camera. If the two values are relatively close, we make the pixels glow, and increase their glow strength the closer they are.

This execution felt really good across the board. When the glow strength and and depth were turned down to a minimum level, it seemed like an effect which could be universally applied across an application without being overpowering.

Experiment #2: Fingertip Gradients for Proximity to Interactive Objects and UI Elements

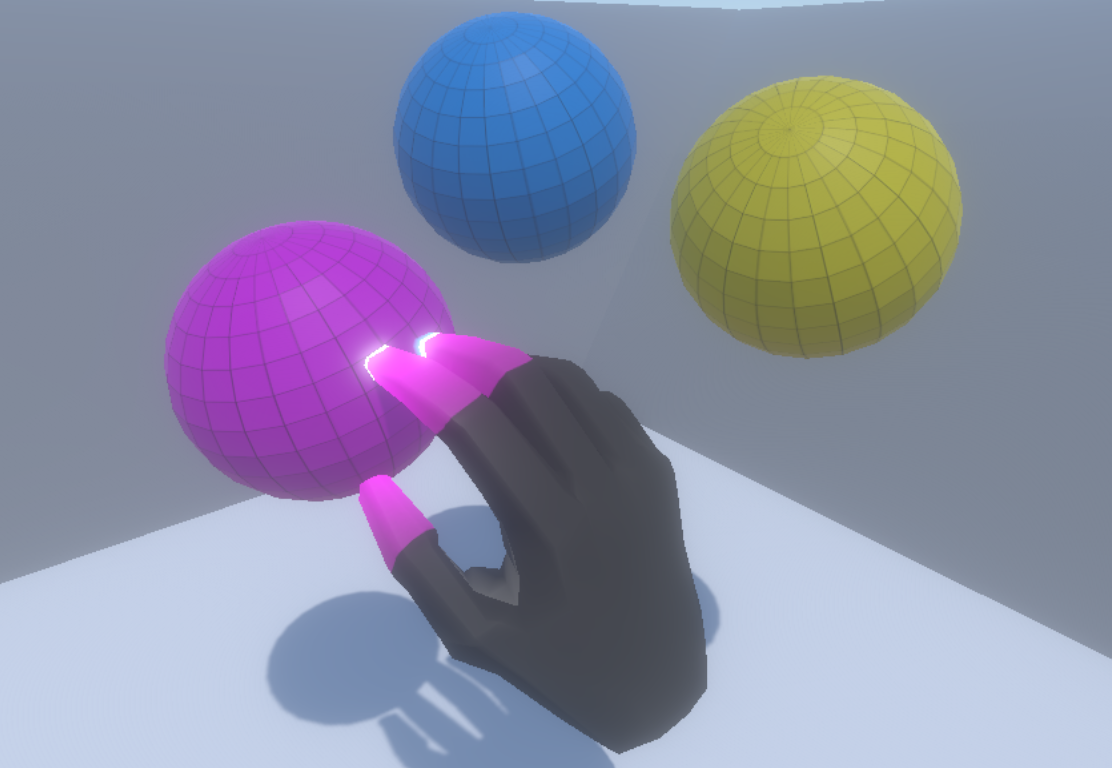

For our second experiment, we decided to make the fingertips change color to match an interactive object’s surface, the closer they are to touching it. This might make it easier to judge the distance between fingertip and surface, and less likely to overshoot and penetrate the surface. Further, if they do penetrate the mesh, the intersection clipping will appear less abrupt – since the fingertip and surface will be the same color.

Using the Interaction Engine OnHover, we check the distance from each fingertip to that object’s surface whenever a hand hovers over an InteractionObject. We then use this to drive a gradient change, which affects each fingertip color individually.

Using a texture to mask out the index finger and a float variable, driven by fingertip distance, to add the Glow Color as a gradient to the Diffuse and Emission channels in ShaderForge.

This experiment definitely helped us judge the distance between our fingertips and interactive surfaces more accurately. In addition, it made it easier to know which object we were closest to touching. Combining this with the effects from Experiment #1 made the interactive stages (approach, contact, and grasp vs. intersect) even clearer.

Experiment #3: Reactive Affordances for Unpredictable Grabs

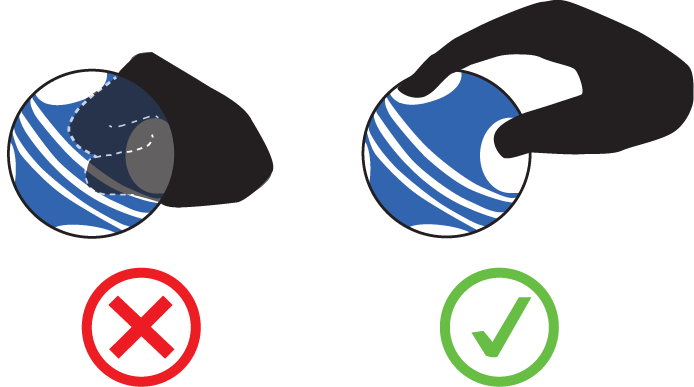

How do you grab a virtual object? You might create a fist, or pinch it, or clasp the object. Previously we’ve experimented with affordances – like handles or hand grips – hoping these would help guide users in how to grasp them.

Reactive affordances can shape how users interact with virtual objects. Click To TweetWhile this helped many people rediscover how to use their hands in VR, some users still ignore these affordances and clip their fingers through the mesh. So we thought – what if instead of modeling static affordances we created reactive affordances which appeared dynamically wherever and however the user chose to grip an object?

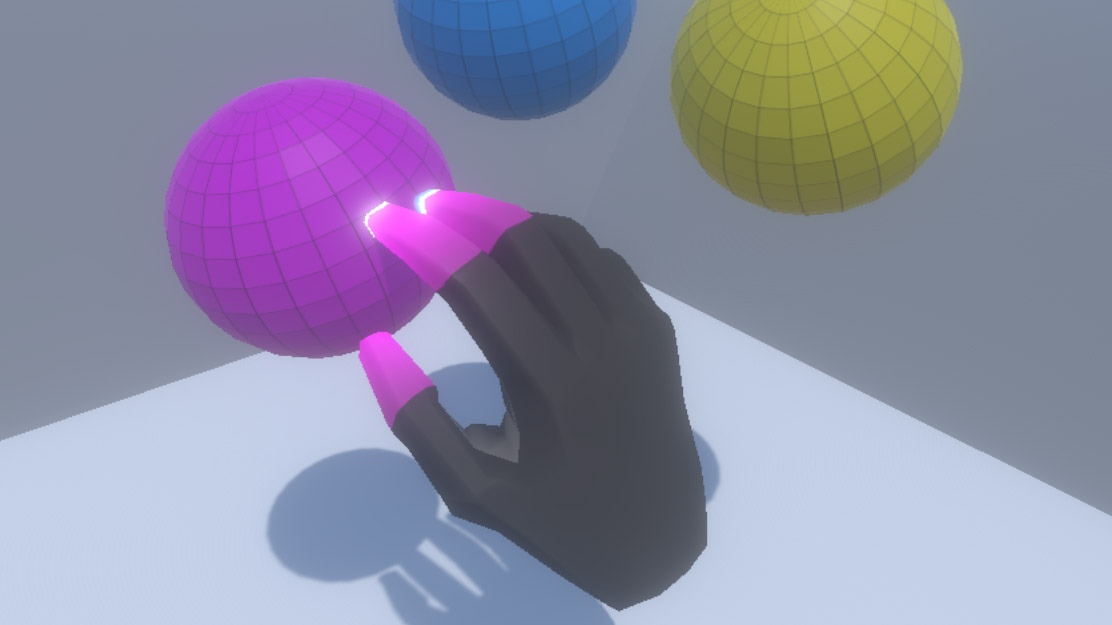

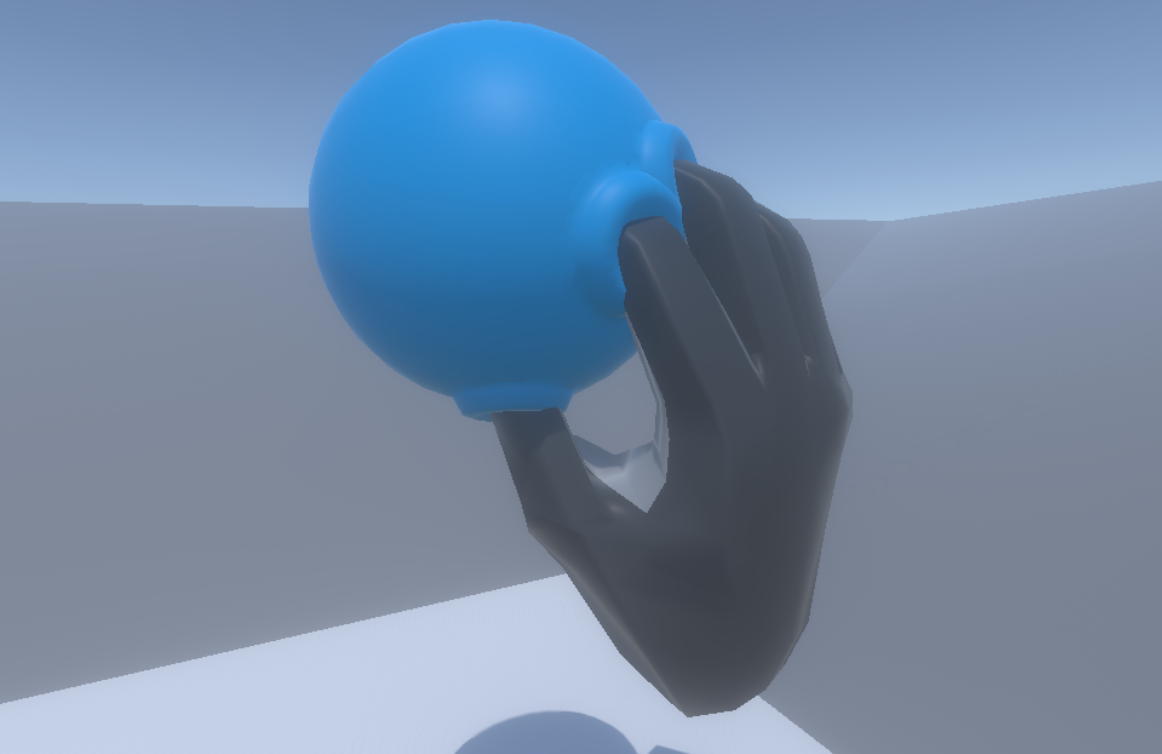

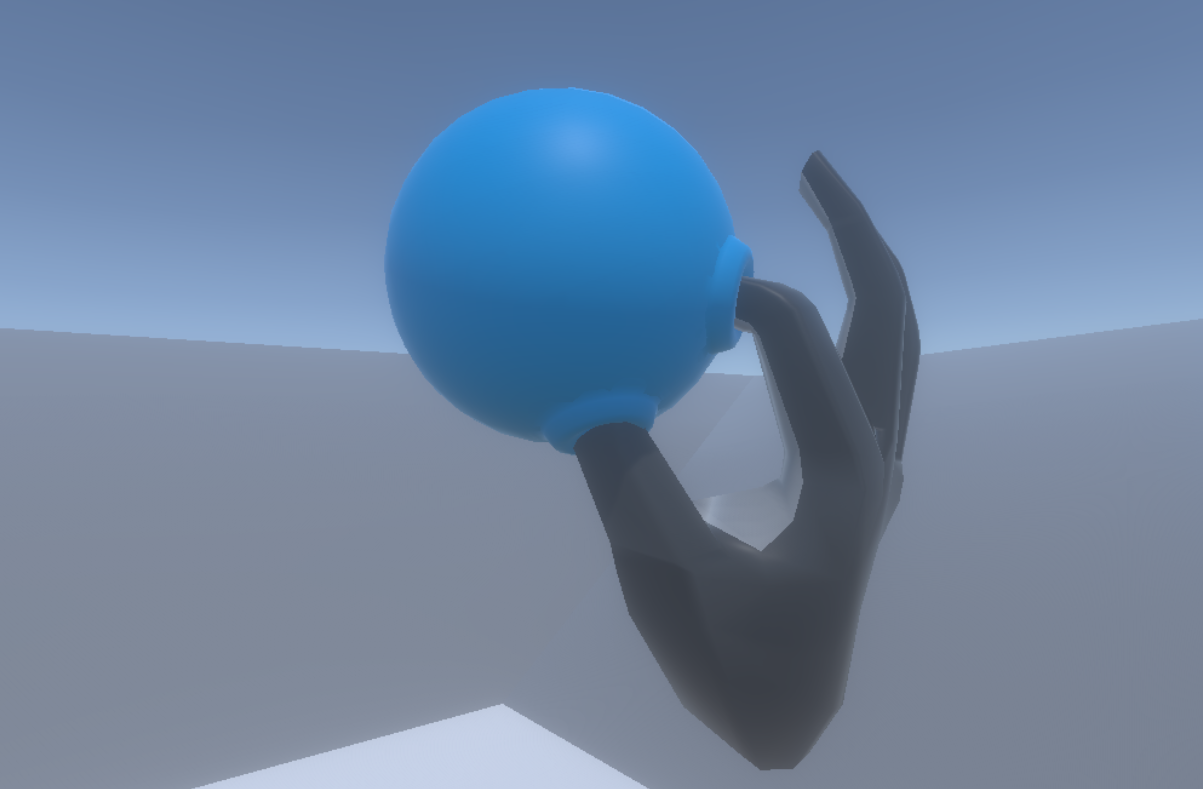

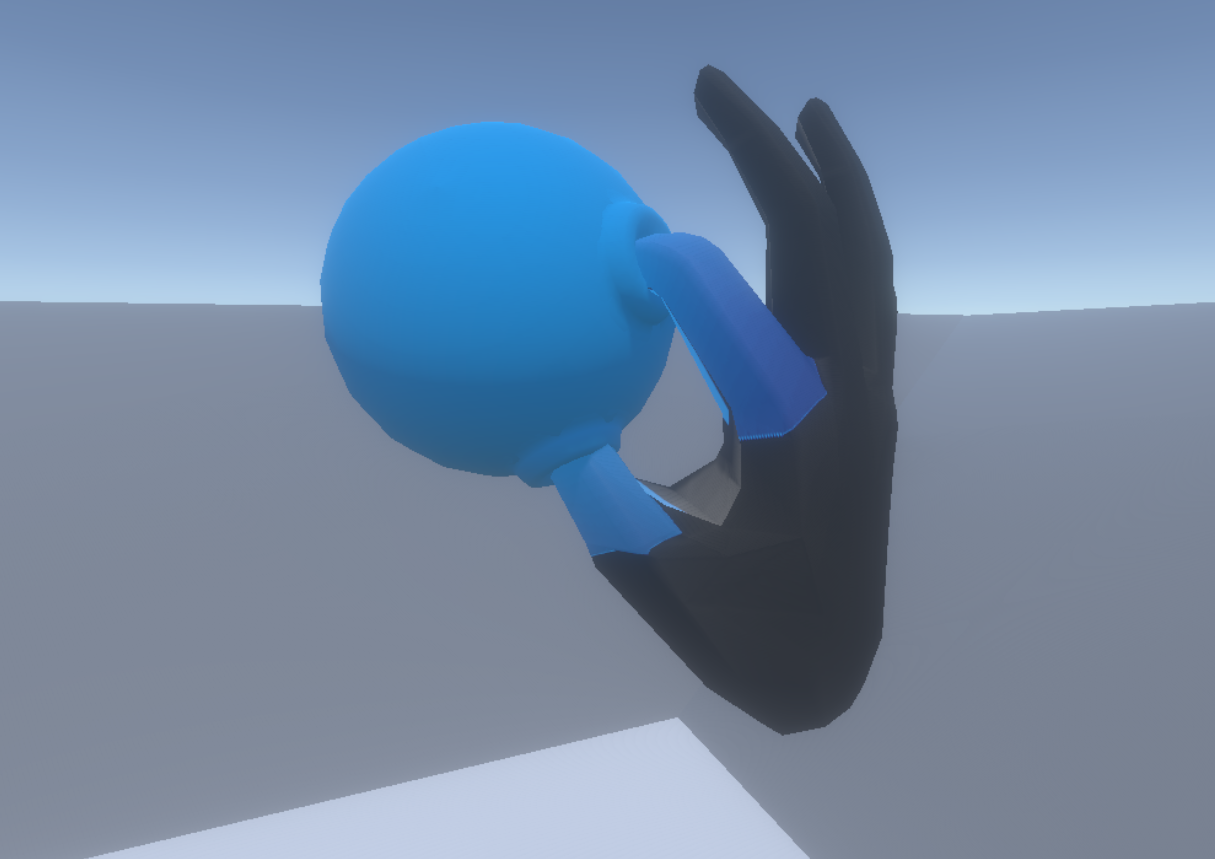

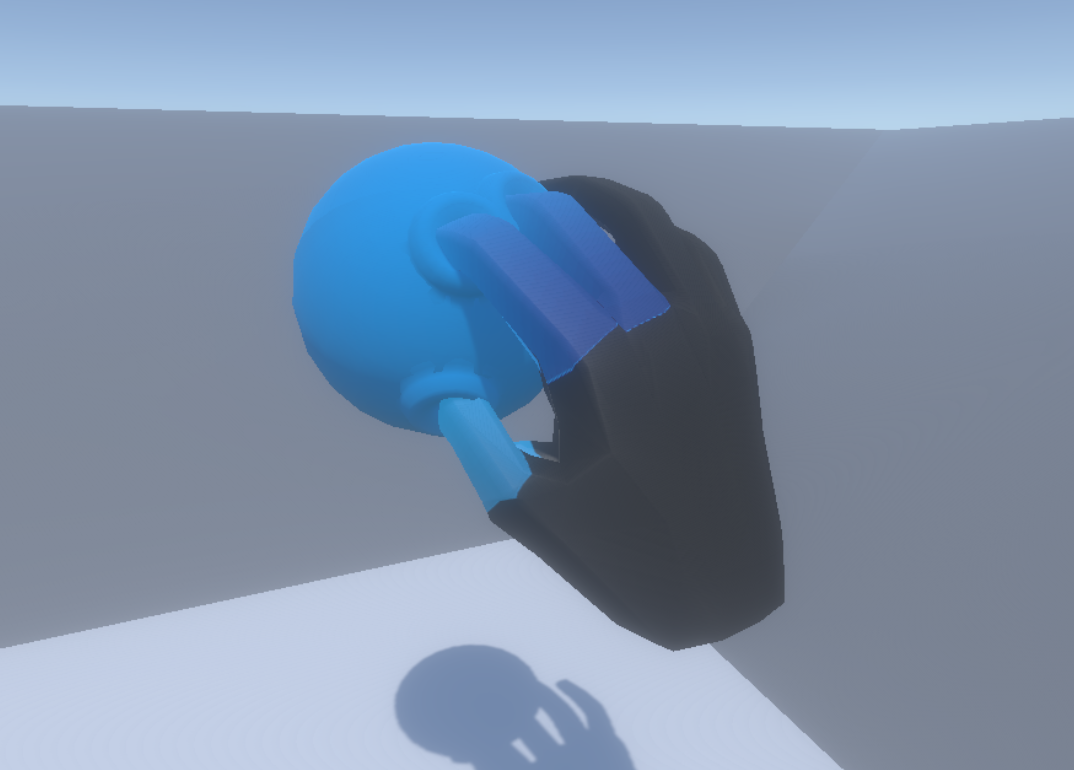

By raycasting through each joint on a per-finger basis and checking for hits on an InteractionObject, we spawn a dimple mesh at the raycast hit point. We align the dimple to the hit point normal and use the raycast hit distance – essentially how deep the finger is inside the object – to drive a blendshape which expands the dimple.

Three raycasts per finger (and two for the thumb) that check for hits on the sphere’s collider.

Bloop! By moving the dimple mesh to the hit point position and rotating it to align with the hit point normal, the dimple correctly follows the finger wherever it intersects the sphere.

In a variation on this concept, we tried adding a fingertip color gradient. This time, instead of being driven by proximity to an object, the gradient was driven by the finger depth inside the object.

Pushing this concept of reactive affordances even further we thought what if instead of making the object deform in response to hand/finger penetration, the object could anticipate your hand and carve out finger holds before you even touched the surface?

Basically, we wanted to create virtual ACME holes.

VR lets us experiment with cartoon-style physics that feel natural to the medium. Click To TweetTo do this we increased the length of the fingertip raycast so that a hit would be registered well before your finger made contact with the surface. Then we spawned a two-part prefab composed of (1) a round hole mesh and (2) a cylinder mesh with a depth mask which stops any pixels behind it being rendered.

By setting up the layers so that the depth mask won’t render the InteractionObject’s mesh, but will render the user’s hand mesh, we create the illusion of a moveable ACME-style hole in the InteractionObject.

These effects made grabbing an object feel much more coherent, as though our fingers were being invited to intersect the mesh. Clearly this approach would need a more complex system to handle objects other than a sphere – for parts of the hands which are not fingers and for combining ACME holes when fingers get very close to each other. Nonetheless, the concept of reactive affordances holds promise for resolving unpredictable grabs.

Hand-centric design for VR is a vast possibility space – from truly 3D user interfaces to virtual object manipulation to locomotion and beyond. As creators, we all have the opportunity to combine the best parts of familiar physical metaphors with the unbounded potential offered by the digital world. Next time, we’ll really bend the laws of physics with the power to magically summon objects at a distance!

An abridged version of this post was originally published on RoadtoVR. A Chinese version is also available.