The frustrating thing about raw Twitter data is that it tends to remove the very element that makes the platform so interesting in the first place: the nuance of human sentiment. But what if you could harness the power of that data back with your own two hands, set to music?

What began as a project exploring the correlation between architecture and sound at the University of Architecture in Venice, Italy morphed into something interactive when Electronic Music major Amerigo Piana took the reins. To finish out his thesis at the Music Conservatory of Vicenza, he decided to bring Leap Motion technology, sonic spatialization, and social data together under one dome.

“I love playing with audio, often in the digital domain, from sound design to experimental electronic productions,” Amerigo told us. “I’m co-founder of a video-mapping firm called ZebraMapping in which we provide stage design, structures, and real time video. I enjoy mixing different medias together that are controlled and driven by humans.”

Amerigo had followed Leap Motion since before we launched, intrigued by the possibility of integrating organic 3D motion control into a sound spatialization project. As he began to build, he discovered the power and creativity involved in interaction design, ranging from small, natural hand movements to complex gestures newly imbued with meaning.

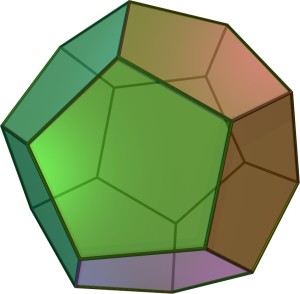

One of the five Platonic solids, the dodecahedron is formed from 12 pentagons.

“I wanted to create an immersive situation to intrigue and charm people, letting the public play with sound spatialization using the Leap Motion Controller. I chose a dodecahedron because it gives the maximum analog output of an audio card: 10 (excluding the floor and the entrance).

“The 10 surfaces are useful for the exciters. The exciters are pushed against wood surface work like speakers, making the wood vibrate. Wood works like a bandpass filter which gives to sound a particular timber: no high frequency, no low frequency. The structure is not a mere sound system, but a specific instrument with its own sounding board. Like the violin that has its own particular sound, Dodekaedros has a characteristic range of frequencies and timber.”

From afar, the user sees a geometric structure with red light spilling out. The spinning soundscapes are also audible from the outside. Once inside the dome, the user is prompted to wave their hand over the Leap Motion Controller. While the user’s right hand controls the sound position and LEDs, their left hand modifies the sound synthesis parameters.

The environment is controlled by a MaxMSP program that Amerigo developed. It creates sound, manages spatialization, reads Leap Motion data, then remaps it into sound synthesis and LED lights. The Twitter queries are written in Ruby, and the answers are then interpreted by MaxMSP which handles speech synthesis. When a specific set of hashtags are posted on Twitter, the structure gives the user speech feedback using the Ruby query.

Ultimately, Amerigo hopes that people walk away from Dodekaedros with a fresh perspective of our rapidly converging digital and physical universes. “The field of interaction and implementation of the Internet of Things creates a personal communication channel between machines and humans.”