There’s something magical about building in VR. Imagine being able to assemble weightless car engines, arrange dynamic virtual workspaces, or create imaginary castles with infinite bricks. Arranging or assembling virtual objects is a common scenario across a range of experiences, particularly in education, enterprise, and industrial training – not to mention tabletop and real-time strategy […]

// interaction engine

One of the core design philosophies at Leap Motion is that the most intuitive and natural interactions are direct and physical. Manipulating objects with our bare hands lets us leverage a lifetime of physical experience, minimizing the learning curve for users. But there are times when virtual objects will be farther away than arm’s reach, […]

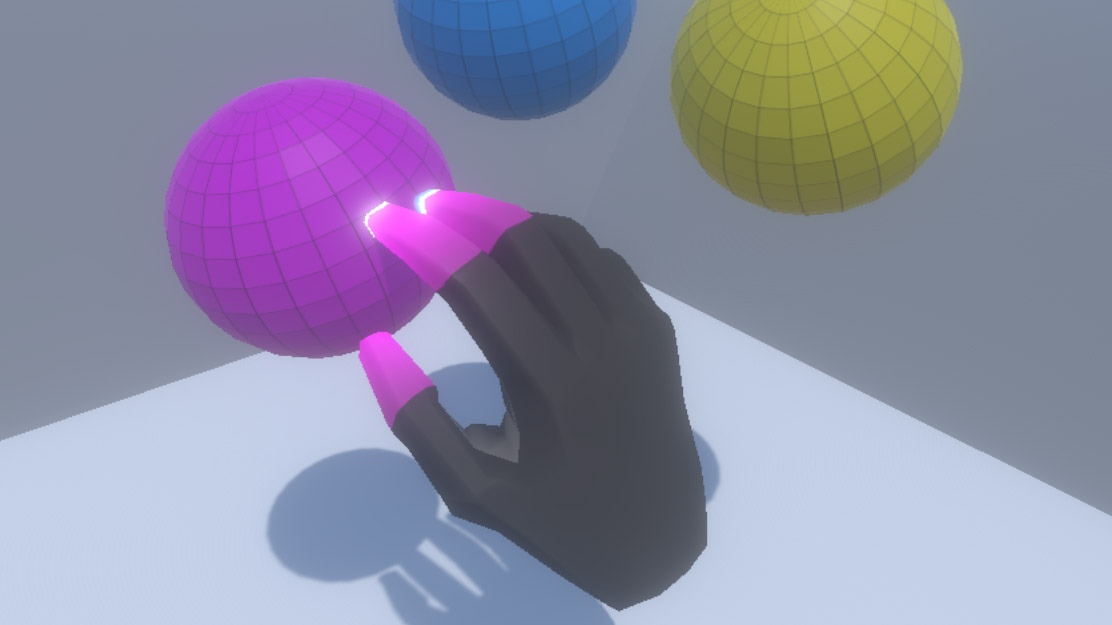

When you reach out and grab a virtual object or surface, there’s nothing stopping your physical hand in the real world. To make physical interactions in VR feel compelling and natural, we have to play with some fundamental assumptions about how digital objects should behave. The Leap Motion Interaction Engine handles these scenarios by having […]

As mainstream VR/AR input continues to evolve – from the early days of gaze-only input to wand-style controllers and fully articulated hand tracking – so too are the virtual user interfaces we interact with. Slowly but surely we’re moving beyond flat UIs ported over from 2D screens and toward a future filled with spatial interface paradigms that take advantage of depth and volume.

Last time, we looked at how an interactive VR sculpture could be created with the Leap Motion Graphic Renderer as part of an experiment in interaction design. With the sculpture’s shapes rendering, we can now craft and code the layout and control of this 3D shape pool and the reactive behaviors of the individual objects.

By adding the Interaction Engine to our scene and InteractionBehavior components to each object, we have the basis for grasping, touching and other interactions. But for our VR sculpture, we can also use the Interaction Engine’s robust and performant awareness of hand proximity. With this foundation, we can experiment quickly with different reactions to hand presence, pinching, and touching specific objects. Let’s dive in!

With the next generation of mobile VR/AR experiences on the horizon, our team is constantly pushing the boundaries of our VR UX developer toolkit. Recently we created a quick VR sculpture prototype that combines the latest and greatest of these tools.

The Leap Motion Interaction Engine lets developers give their virtual objects the ability to be picked up, thrown, nudged, swatted, smooshed, or poked. With our new Graphic Renderer Module, you also have access to weapons-grade performance optimizations for power-hungry desktop VR and power-ravenous mobile VR.

In this post, we’ll walk through a small project built using these tools. This will provide a technical and workflow overview as one example of what’s possible – plus some VR UX design exploration and performance optimizations along the way.

As humans, we are spatial, physical thinkers. From birth we grow to understand the objects around us by the rules that govern how they move, and how we move them. These rules are so fundamental that we design our digital realities to reflect human expectations about how things work in the real world. At Leap […]

Explorations in VR Design is a journey through the bleeding edge of VR design – from architecting a space, to designing groundbreaking interactions, to making users feel powerful. Virtual reality is a world of specters, filled with sights and sounds that offer no physical resistance when you reach towards them. This means that any interactive […]

Update (6/8/17): Interaction Engine 1.0 is here! Read more on our release announcement: blog.leapmotion.com/interaction-engine Game physics engines were never designed for human hands. In fact, when you bring your hands into VR, the results can be dramatic. Grabbing an object in your hand or squishing it against the floor, you send it flying as the physics […]

In the physical world, our hands exert force onto other objects. By grasping objects, they exert force through fingers and palms (using precision, power, or scissors grips), and the objects push back. Gravity can also help us hold items in the palms of our hands.

But not in the digital world! In the frictionless space of the virtual, anything can happen. Gravity is optional. Magic is feasible.