The Leap Motion Controller offers an expansive landscape of new possibilities for user control of computer applications, and in particular, games. Who didn’t, as a kid, run around with their thumb-and-index “gun” drawn, playing cops and robbers, shooting each other with the unmistakeable “bang, bang, you’re dead” gesture – relying on the unwritten rules of the playground to enforce pretend injury or death?

Those were simpler times. Fortunately, the simplicity of the shooting gesture long-ingrained from childhood can be used as a control mechanism for games (and maybe even more – OS clicking gesture, anyone?). Nostalgic violence can now be augmented through computers!

Now to launch into math-textbook-author mode. Put on your thinking hats and chug the rest of your coffee!

What is a Gesture?

A simple definition, used here, is a motion of a human fingertip whose path has a definite geometric shape or property, well-defined enough to be recognizable to an observer, be it human or computer. A couple of examples of gestures relevant to the Leap Motion Controller are circles (moving a fingertip through a sufficient amount of a circular arc) and swipes (moving a fingertip along a sufficiently long and straight path).

From the human or computer perspective, it’s necessary to have seen enough of the gesture elapse before recognition is possible. In other words, it’s necessary to retain a small amount of historical data in order to perform the recognition. In this post, I’ll describe a simple “timed history” data structure, which handles this in code.

This post will also discuss a couple of “pistol shooting” gestures (imagine kids playing cops and robbers, mimicking the use of guns with thumbs-up, index-finger-barrel “guns”). These could be used in a game setting in which single-shot weapons’ aiming and firing are controlled directly by gesture.

Before we move forward, the “pistol shooting” gesture needs to be defined in a reasonably mathematically rigorous way. Depending on the desired control scheme and interaction accuracy/complexity, different definitions could be used. Two definitions will be explored here:

- pistol “leveling”

- pistol “aiming/kickback”

Pistol “Leveling” Scheme

In this scheme, the gun will be considered to fire when the gun is “leveled” – meaning when the index finger direction is parallel with the x-z plane, the firing position will just be the fingertip position, and the firing direction will be the projection of the index finger direction onto the x-z plane. (Recall that the convention that the Leap Controller uses is that the positive x and y axes are rightward and upward on the screen, and the positive z axis points out of the screen, toward the user.) This could be used to simulate a quick-draw pistol duel – where the gun has to be brought up from the holster.

The advantage of this method is simpler control over aiming (the gun always fires parallel to the x-z plane). The main disadvantage is that the user’s finger direction may not pass through the x-z plane without explicit effort (tipping the index finger direction through horizontal, past where it should seem to end naturally), so some adaptation may be necessary on the user’s part.

Pistol “Aiming/Kickback” Scheme

Here, firing will be caused by the transition from an initial stillness (aiming the gun) to an upward motion (mimicking the kickback of the weapon). Once the gesture is recognized, the firing position and direction must then be determined by looking at the history – the position and direction of the index finger during the “stillness” phase will be used.

The advantages to this scheme are:

- the firing direction is not limited

- a gesture that is very natural for the user, and

- it will likely allow much higher accuracy of aiming.

The disadvantage is that the recognition is somewhat more involved, and may require tuning some of the parameters in order to optimize its performance.

TimedHistory Data Structure

A simple extension of a deque (“double-ended queue”) data structure can be used to store the history of data required to do gesture recognition. The design criteria for this structure are as follows. For the gestures discussed here, the “data” is the index finger’s tip position and direction.

- The structure will hold at least a prespecified amount of history (e.g. based on frame timestamp).

- Data will be prepended to the deque as it is accumulated, while the end of the deque will be trimmed such that the deque never holds more than the guaranteed amount of history.

- There must be a way to retrieve data that is “closest to X time-units old” (or “at least/most X time-units old”).

The data should be added to the TimedHistory object as it is received, and then age-based queries can be made. Note that there is no particular upper limit on the number of data frames stored to guarantee a particular amount of history is available. If this is an issue, some additional design criteria could be added guaranteeing an upper bound on the number of frames, but that the duration of history then can’t be guaranteed. The deque data structure is chosen as a foundation because of its runtime complexity: O(1) for random element access and amortized O(1) for front-push/pop and back-push/pop.

Recognizing the Pistol Leveling Gesture

Using a TimedHistory object with A+B time-units of history (where A and B could be something like 50 milliseconds each), the gesture will be divided up into the A time-units before (call this “pre-fire candidate history”) firing and B time-units after firing (call this “post-fire candidate history”). These are essentially windows into parts of history – pre-fire candidate history is the time range [-A-B,-B] and post-fire candidate history is the time range [-B,0]. These windows will be used to measure the “signature” of the recent motion – if the particular signature is significant enough, the gesture is considered to have been recognized.

The task at hand is then determining when the pre- and post-fire candidate histories correspond to the index finger direction being completely below and above the x-z plane respectively.

The calculations described below should be performed every frame, so a gesture may occur at each frame. Using a cooldown period after a gesture has been recognized, during which no gestures may be recognized, is recommended to increase stability.

The A and B time units are tuning parameters – the longer they are, the more stability the recognition should have (up to a point), but this comes with the cost of more latency. The figure of 50 milliseconds was chosen because it is reasonably close to the limit of human perception in this regard, but should admit a reasonable number of data frames in the pre- and post-fire candidate histories.

The simple recognition test is to compute:

- the average “pitch” (see the Leap API) for the finger directions in the pre-fire candidate history,

- the same quantity but for the finger directions in the post-fire candidate history,

- the average fingertip positions for all historical data, and

- the average “yaw” of finger directions for all historical data.

If quantities (1) and (2) have opposite signs and are sufficiently far from zero (some threshold, perhaps pi/15 – this is another tuning parameter), then the gun is considered to haved fired, and the quantity (3) can be used as the firing position, and (4) can be used as the firing direction (the “yaw” may need to be converted into a vector quantity depending on what is being done with it). The direction of the gun’s motion (down-to-up, or up-to-down) can be determined by the sign of quantity (1) – if it is positive, then the motion is up-to-down, and otherwise it is down-to-up.

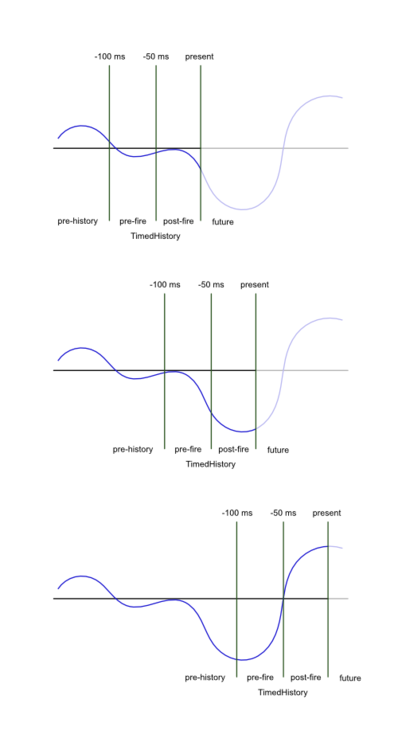

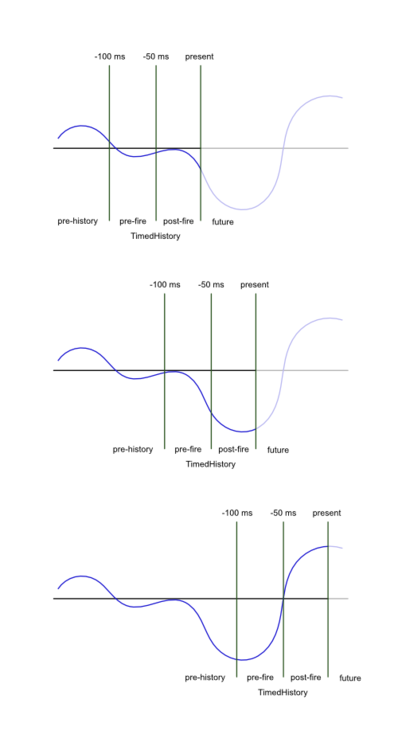

Here is a picture describing use of the TimedHistory object.

These are graphs of the finger pitch as a function of time, with the pre- and post-fire candidate histories overlaid at various times. The “future” is the data that hasn’t been recorded yet. The pre-fire and post-fire windows are the sections of data that are used to calculate the various quantities. Quantities (1) and (2) are defined as the average values of the pitch function in the pre- and post-fire windows respectively. Here are [dummy] values for quantities (1) and (2) for each of the pictures:

- Picture 1: Quantity (1) is -4 and quantity (2) is -3. The firing gesture is not triggered.

- Picture 2: Quantity (1) is -7 and quantity (2) is -21. The firing gesture is not triggered.

- Picture 3: Quantity (1) is -20 and quantity (2) is 17. The firing gesture may be triggered, depending on the other quantities.

Recognizing the Pistol Aiming/Kickback Gesture

Just as in the pistol leveling gesture, a TimedHistory object will be used, as well as the concepts of pre- and post-fire candidate histories. The quantities that should be calculated here are:

- the maximum speed (magnitude of velocity) for the finger in the pre-fire candidate history, and

- the average value of the y component of the finger velocity in the post-fire candidate history.

If quantity (1) is below some threshold (a tuning parameter, which physically represents what is considered aiming motion of the gun) and quantity (2) is above some threshold (another tuning parameter which physically represents the magnitude of the kickback necessary to fire the gun).

Conclusion

There are many refinements and stabilizations that could be made to the gesture recognition techniques described here, but the general idea of the TimedHistory object and how to use it to do gesture recognition is the main point. Hopefully it will inspire some more complex and robust approaches to gesture recognition, as well as ideas in general. Enjoy!

– Victor Dods (photos: Digit Duel by Resn and Fast Iron by Massively Fun)