Each spring, UX aficionados gather in a different part of the world for CHI, an annual summit focused entirely on the human factors in computer interfaces. For creatives, professionals, academics, and developers on the forefront of next-gen UX, it’s a chance to come together and rally around two tough questions.

First, what tools that exist today can help us evolve the way people interact with technology? Second, how can we iterate upon this arsenal to help transform interaction paradigms from pie-in-the-sky sketches into concrete, real-world solutions to better our health, increase our efficiency, and make us feel inspired?

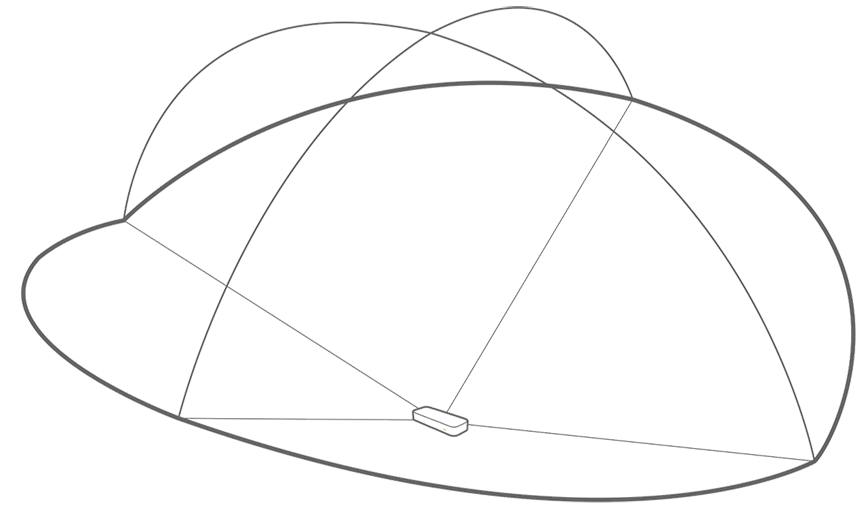

At this year’s conference in Toronto, UX researcher and designer Sheila Christian, a recent graduate of Carnegie Mellon’s Human-Computer Interaction Master’s Program, joined Madeiran students Júlio Alves, André Ferreira, Dinarte Jesus, Rúben Freitas, and Nelson Vieira to build a Leap Motion experience from the ground up for CHI’s student game competition. Their creation – Volcano Salvation, an Aztec-themed god game using webcam head tracking and Leap Motion control.

Read More ›

We live in a heavily coded world – where the ability to talk to computers, and understand how they “think,” is more important than ever. At the same time, however, programming is rarely taught in schools, and it can be hard for young students to get started.

We live in a heavily coded world – where the ability to talk to computers, and understand how they “think,” is more important than ever. At the same time, however, programming is rarely taught in schools, and it can be hard for young students to get started.