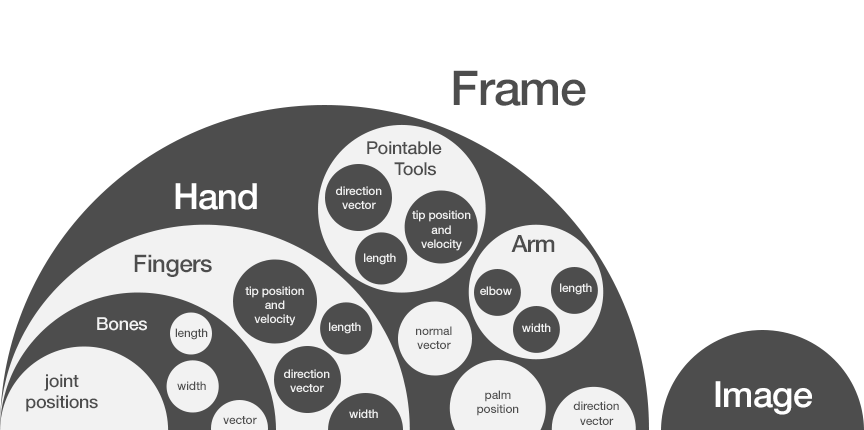

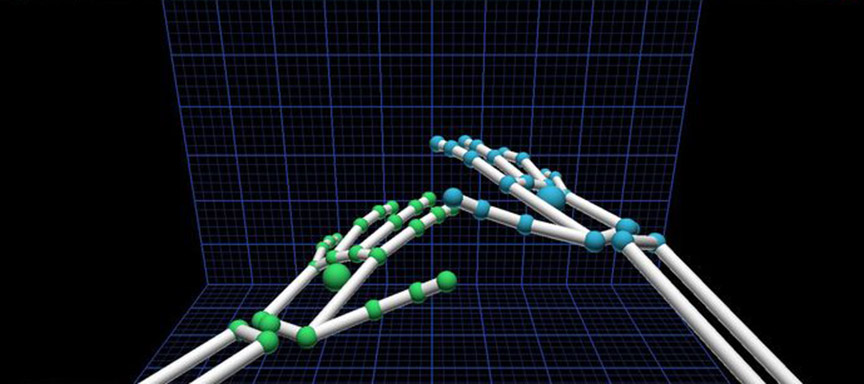

Last week, we took an in-depth look at how the Leap Motion Controller works, from sensor data to the application interface. Today, we’re digging into our API to see how developers can access the data and use it in their own applications. We’ll also review some SDK fundamentals and great resources you can use to get started.

// Unity

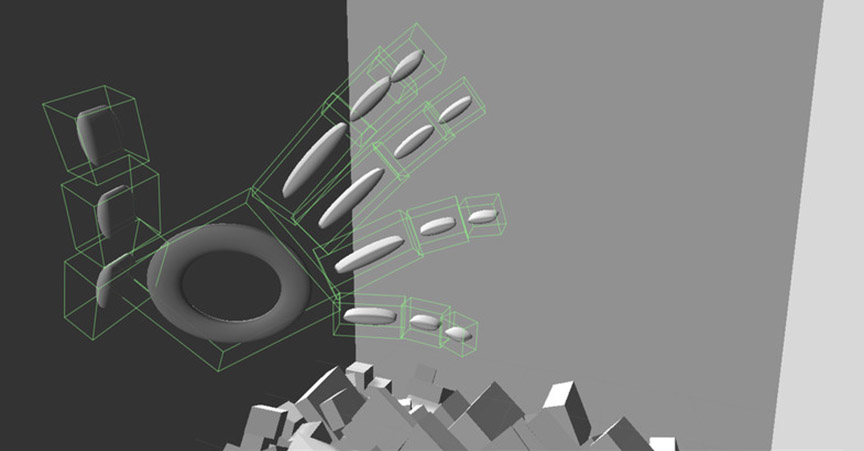

Interacting with your computer is like reaching into another universe – one with an entirely different set of physical laws. Interface design is the art of creating digital rules that sync with our physical intuitions to bring both worlds closer together. We realize that most developers don’t want to spend days fine-tuning hand interactions, so we decided to design a framework that will accelerate development time and ensure more consistent interactions across apps.

The way we interact with technology is changing, and what we see as resources – wood, water, earth – may one day include digital content. At last week’s API Night at RocketSpace, Leap Motion CTO David Holz discussed our evolution over the past year and what we’re working on. Featured speakers and v2 demos ranged from Unity and creative coding to LeapJS and JavaScript plugins.

Art imitates life, but it doesn’t have to be bound by its rules. While natural interactions always begin with real world analogues, it’s our job as designers to craft new experiences geared towards virtual platforms. Building on last week’s game design tips, today we’re thinking about how we can break real-world rules and give people superpowers.

What can virtual environments teach us about real-world issues? At last month’s ImagineRIT festival, Team Galacticod’s game Ripple took visitors into an interactive ocean to learn about threats facing coral reefs.

Built in Unity over the course of five weeks, Volcano Salvation lets you change your perspective in the game by turning your head from side to side. This approach to camera controls – facial recognition via webcam – makes it easy for the player to focus on the hand interactions.

In any 3D virtual environment, selecting objects with a mouse becomes difficult if the scene becomes densely populated and structures are occluded. This is a real problem with anatomy models, where there is no true empty space and organs, vessels, and nerves always sit flush with adjacent structures.

By creating a game that forced his eyes to work together, cross-eye sufferer and game developer James Blaha has been working to overcome his condition and retrain his brain with the power of gamification.