What can virtual environments teach us about real-world issues? At last month’s ImagineRIT festival, Team Galacticod’s game Ripple took visitors into an interactive ocean to learn about threats facing coral reefs.

// Developer Labs

New projects and features, insights on the future of human-computer interaction, and updates on Leap Motion developer communities around the world.

Over the past year, we’ve been iterating on our UX best practices to embrace what we’ve learned from community feedback, internal research, and developer projects. While we’re still exploring what that means for the next generation of Leap Motion apps, today I’d like to highlight four success stories that are currently available in the App Store.

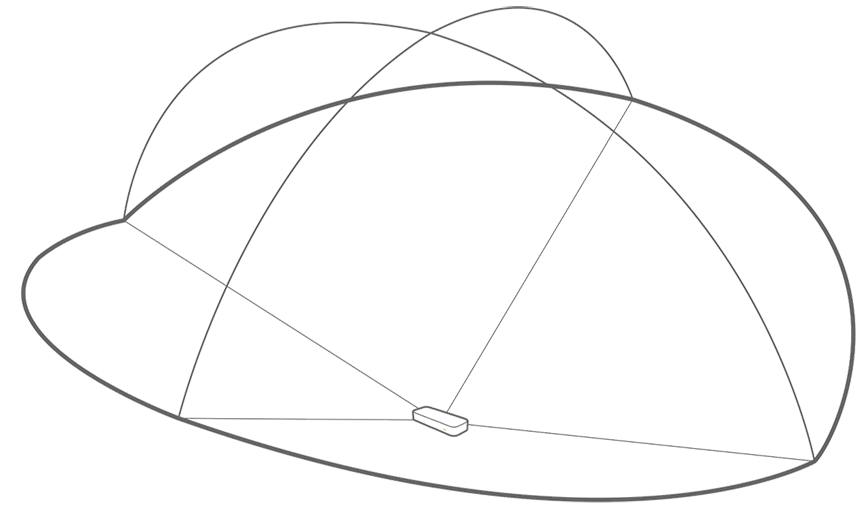

Built in Unity over the course of five weeks, Volcano Salvation lets you change your perspective in the game by turning your head from side to side. This approach to camera controls – facial recognition via webcam – makes it easy for the player to focus on the hand interactions.

For touch-based input technologies, triggering an action is a simple binary question. Touch the device to engage with it. Release it to disengage. Motion control offers a lot more nuance and power, but unlike with mouse clicks or screen taps, your hand doesn’t have the ability to disappear at will. Instead of designing interactions in black and white, we need to start thinking in shades of gray.

In any 3D virtual environment, selecting objects with a mouse becomes difficult if the scene becomes densely populated and structures are occluded. This is a real problem with anatomy models, where there is no true empty space and organs, vessels, and nerves always sit flush with adjacent structures.

Mapping legacy interactions like mouse clicks or touchscreen taps to air pokes often results in unhappy users. Let’s think beyond the idea of “touch at a distance” and take a look at what it means when your hand is the interface.

Digital technologies in the operating room can be powerful tools for surgeons, but only as long as they can be controlled without compromising sterile procedures. In the last of our AXLR8R spotlight videos, DriftCoast co-founder Hua (Michael) Chen talks about how he was inspired to use Leap Motion technology to open up new interactive possibilities within the OR. Their […]

By creating a game that forced his eyes to work together, cross-eye sufferer and game developer James Blaha has been working to overcome his condition and retrain his brain with the power of gamification.

While truly smart digital assistants are still on the horizon, recent advances in voice recognition and control technologies are taking us closer to sci-fi dreams like Iron Man’s AI JARVIS or Her’s Samantha.