When combined with auditory and other forms of visual feedback, onscreen hands can create a real sense of physical space, as well as complete the illusion created by VR interfaces like the Oculus Rift. But rigged hands also involve several intriguing challenges.

// UX Design

For touch-based input technologies, triggering an action is a simple binary question. Touch the device to engage with it. Release it to disengage. Motion control offers a lot more nuance and power, but unlike with mouse clicks or screen taps, your hand doesn’t have the ability to disappear at will. Instead of designing interactions in black and white, we need to start thinking in shades of gray.

In any 3D virtual environment, selecting objects with a mouse becomes difficult if the scene becomes densely populated and structures are occluded. This is a real problem with anatomy models, where there is no true empty space and organs, vessels, and nerves always sit flush with adjacent structures.

Mapping legacy interactions like mouse clicks or touchscreen taps to air pokes often results in unhappy users. Let’s think beyond the idea of “touch at a distance” and take a look at what it means when your hand is the interface.

When Craig Winslow and Justin Kuzma paint interactive digital media directly onto the physical world, your senses feast in unexpected ways. Last fall, they delivered Growth, an immersive forest of trees you could manipulate and command with your hands in the air. Their most recent Leap Motion installation, ZX, went up this February in Vermont, […]

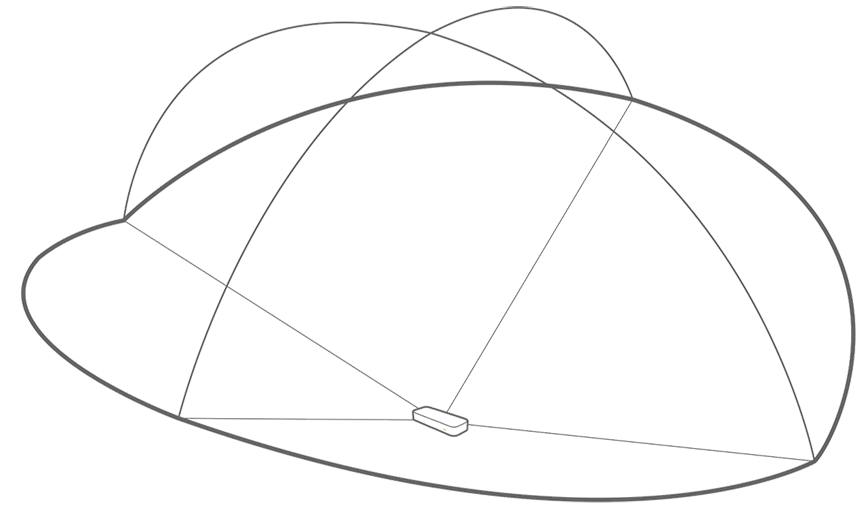

Earlier this year, visitors to Champlain College in Vermont discovered a strange shape emerging from the snowy campus courtyard. Created by Craig Winslow and Justin Kuzma, the duo behind last year’s immersive forest experience Growth, ZX is a 10-foot geometric structure that combines projection mapping with Leap Motion technology. Recently, we caught up with Craig […]

Leapouts is a collaborative 3D model builder, controlled with Leap Motion interaction, that runs inside a Google Hangout. We’ve made a demo available for you to try at leapouts.com. But how did we build it over a weekend without ever having used the Leap Motion API, Firebase, or Three.js before?